Consume huge amount of data via API JSON data into tables?

I am pretty horrible with SQL, so hence I am asking some guidance/tips what would be the best approach for this problem:

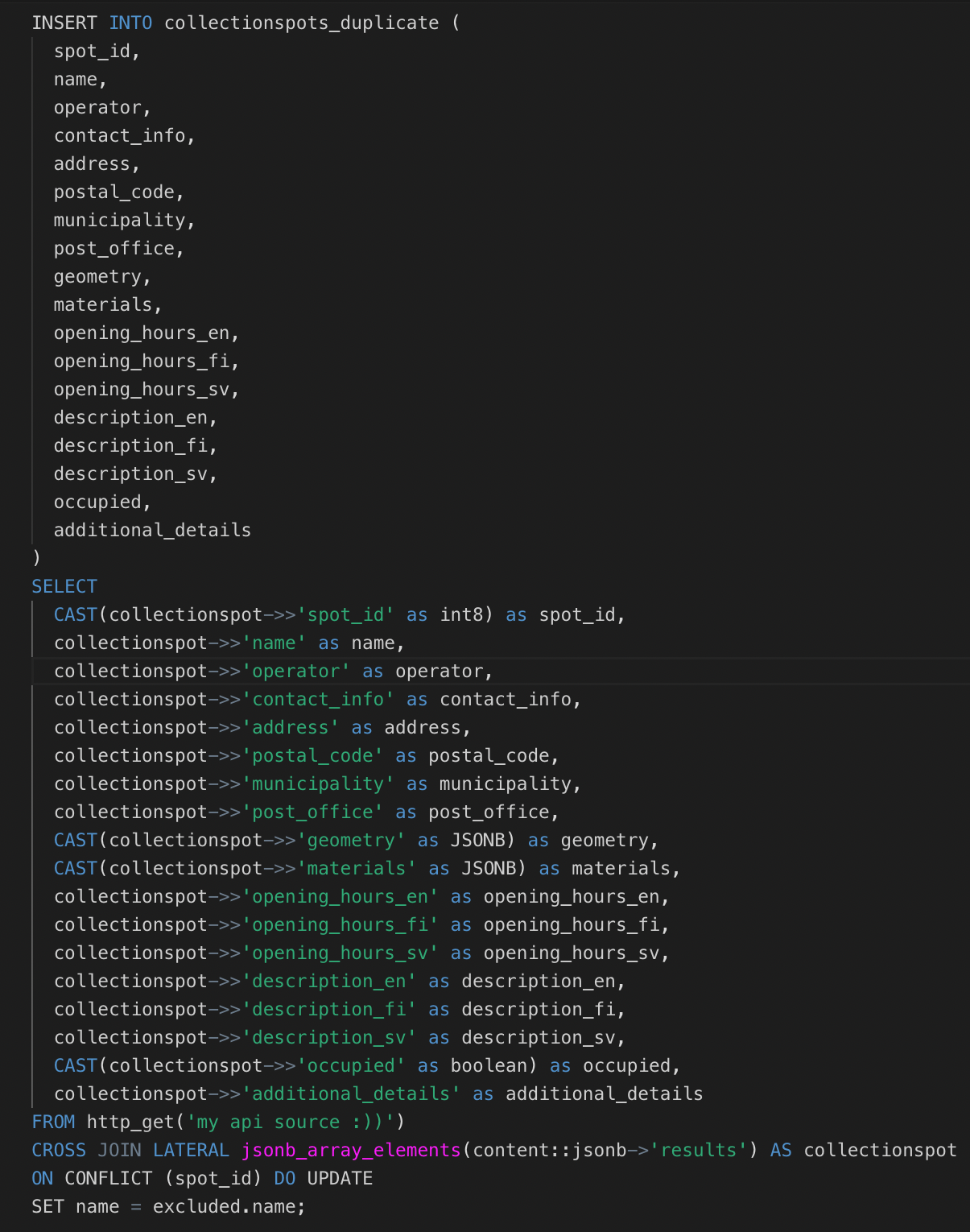

Imagine you have access into API data, which consist an array which has 10k+ different values. Each value consist of an object. Does it make sense to consume everything you get from API and insert that data into tables? Or is there any limitations or challenges, which might come as to my eyes inserting 10k different values same time sounds risky?

Also I want to automate this (saw this article https://supabase.com/blog/postgres-as-a-cron-server) via cron job and update tables every day/or week (not sure yet), so I need to also build an logic which checks that if JSON data is same as on the database, then it doesn't update anything.

Also (not sure if it matters or not), the database will be then connected into Node.js server, and that is connected into React Native app.

Imagine you have access into API data, which consist an array which has 10k+ different values. Each value consist of an object. Does it make sense to consume everything you get from API and insert that data into tables? Or is there any limitations or challenges, which might come as to my eyes inserting 10k different values same time sounds risky?

Also I want to automate this (saw this article https://supabase.com/blog/postgres-as-a-cron-server) via cron job and update tables every day/or week (not sure yet), so I need to also build an logic which checks that if JSON data is same as on the database, then it doesn't update anything.

Also (not sure if it matters or not), the database will be then connected into Node.js server, and that is connected into React Native app.