Serverless Worker Fails: “cuda>=12.6” Requirement Error on RTX 4090 (with Network Storage)

Hi team,

Hi team,

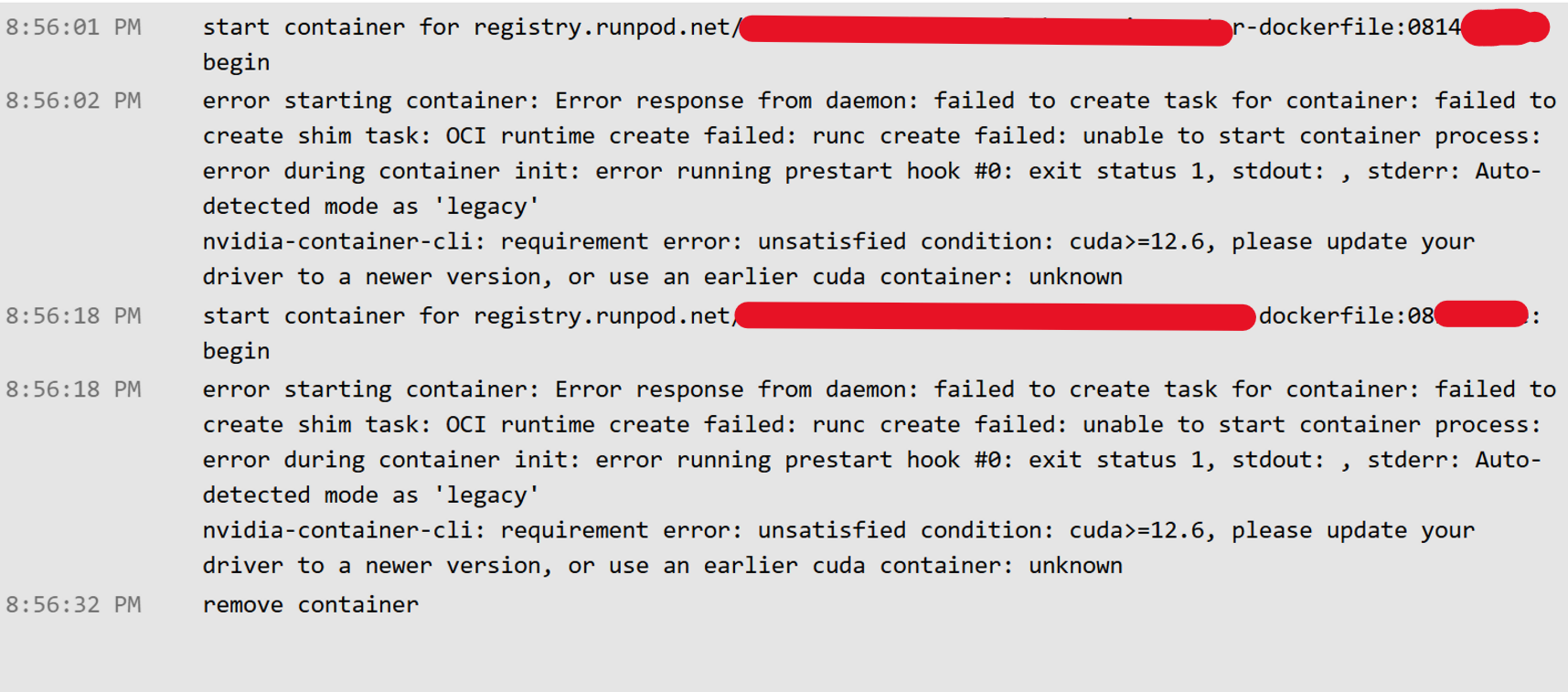

I’m deploying a serverless worker that requires CUDA 12.6 or higher. My endpoint is set to use RTX 4090 GPUs, and I’m using a network volume for model storage (models are not baked into the image). However, when the worker starts, I get this error:

nvidia-container-cli: requirement error: unsatisfied condition: cuda>=12.6, please update your driver to a newer version, or use an earlier cuda container: unknown

My endpoint is configured to require CUDA 12.6+.

I’m using network storage for models, as recommended for large model workflows.

The error persists even though the GPU should be compatible.

Questions:

Is this due to the host’s NVIDIA driver version being too old, even though the GPU is compatible?

How can I ensure my jobs are only scheduled on hosts with drivers that support CUDA 12.6 or higher?

Is there a way to avoid this error or improve scheduling reliability for CUDA 12.6+ workloads with network storage?

Thanks for your help!

Hi team,

I’m deploying a serverless worker that requires CUDA 12.6 or higher. My endpoint is set to use RTX 4090 GPUs, and I’m using a network volume for model storage (models are not baked into the image). However, when the worker starts, I get this error:

nvidia-container-cli: requirement error: unsatisfied condition: cuda>=12.6, please update your driver to a newer version, or use an earlier cuda container: unknown

My endpoint is configured to require CUDA 12.6+.

I’m using network storage for models, as recommended for large model workflows.

The error persists even though the GPU should be compatible.

Questions:

Is this due to the host’s NVIDIA driver version being too old, even though the GPU is compatible?

How can I ensure my jobs are only scheduled on hosts with drivers that support CUDA 12.6 or higher?

Is there a way to avoid this error or improve scheduling reliability for CUDA 12.6+ workloads with network storage?

Thanks for your help!