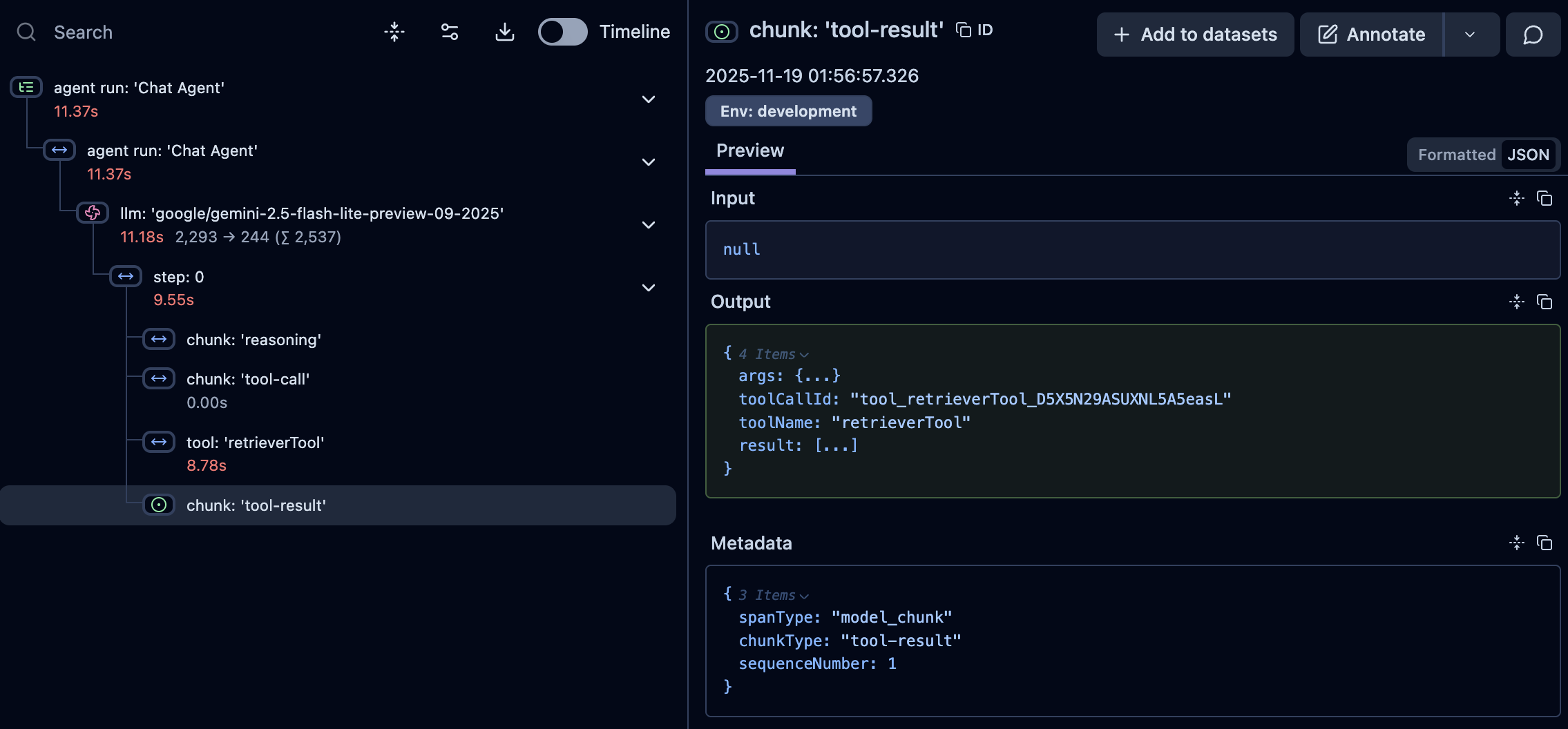

Tool output not being passed back to agent

Hey everyone,

I’m running into a consistent issue with Mastra (latest stable version

- The tool executes normally and produces the correct output.

- But the agent never receives the tool result—the process simply ends quietly with no error.

- No follow-up actions, no continuation, no reasoning loop—just a silent stop after the tool finishes. What I’ve Checked - Tool schema & return types are correct

- Agent is properly configured with the tool

- Clear instructions for returning text output

- Logging shows the tool’s return payload is generated

- Happens across multiple tools

- Using latest Mastra version

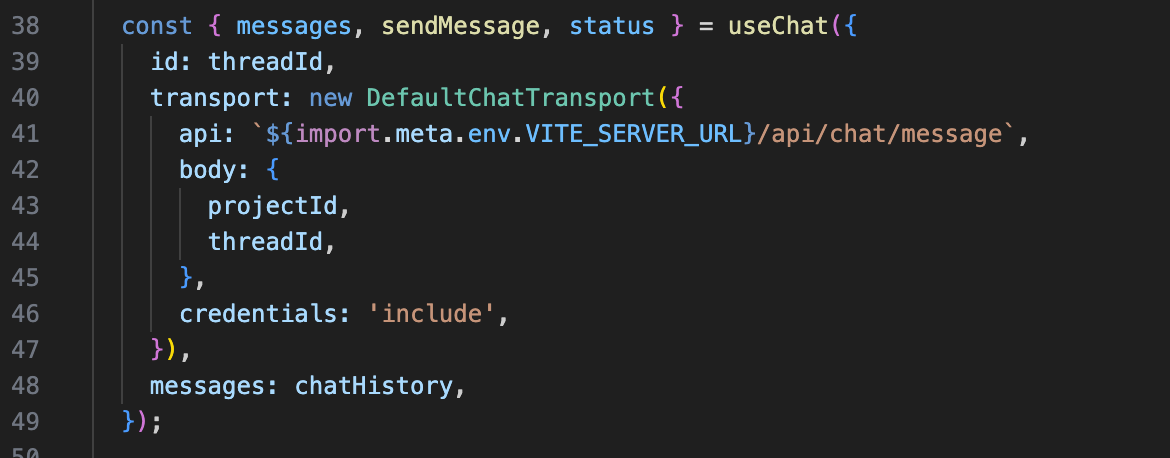

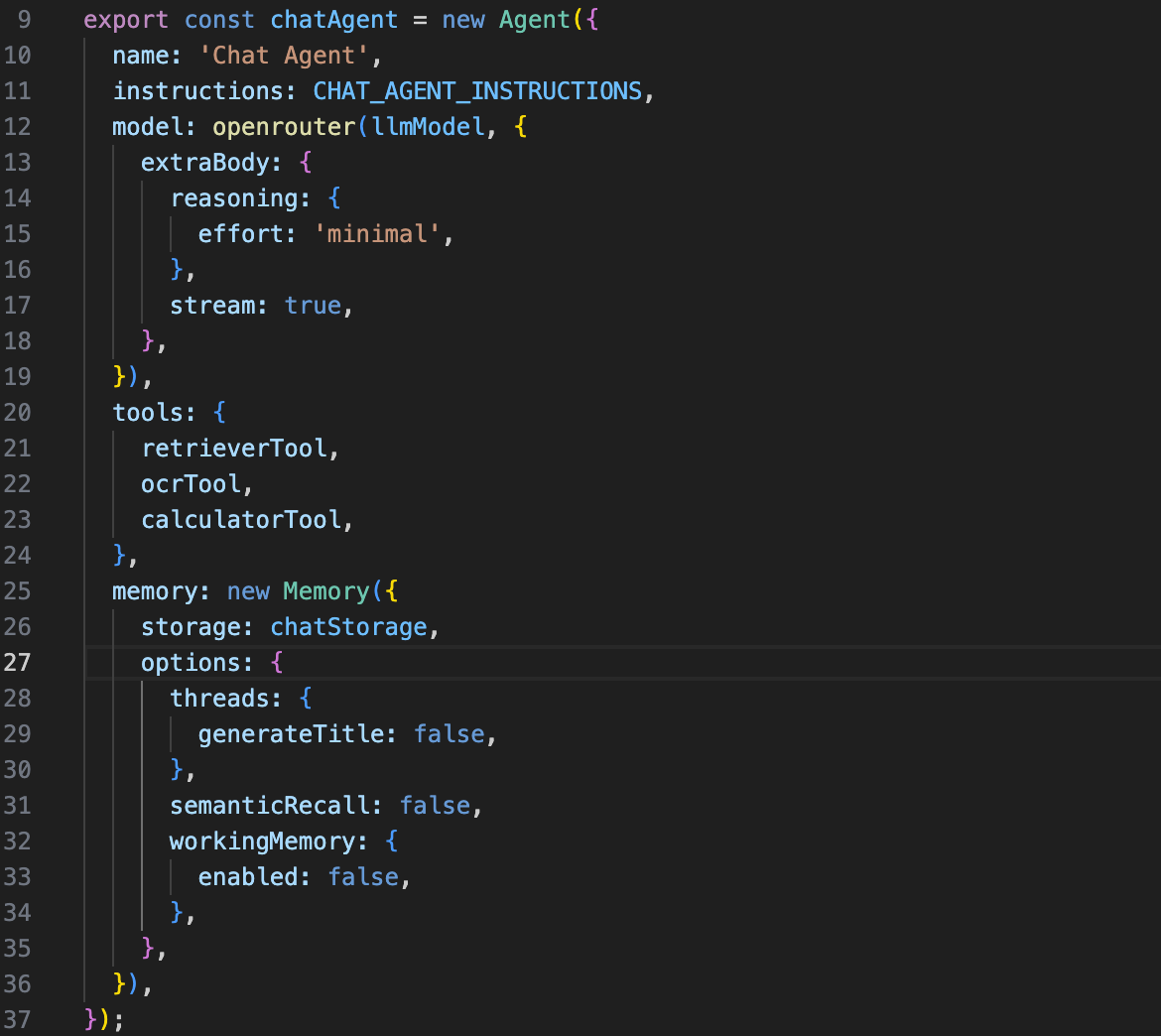

- Setup: Conversation (RAG) Agent + OpenRouter + AI SDK 5 + useChat hook + fastify server What I Expected The agent should consume the tool result and continue its thought/action chain, but the callback never triggers. --- Code Example screenshot attached Attached langufse screenshot PS: it was happening on previous version of Mastra as well, but I updated it to see if anything changes.

0.24.1) where a tool’s output isn’t being passed back to the agent during execution.

What’s Happening:

- The agent successfully calls the tool.- The tool executes normally and produces the correct output.

- But the agent never receives the tool result—the process simply ends quietly with no error.

- No follow-up actions, no continuation, no reasoning loop—just a silent stop after the tool finishes. What I’ve Checked - Tool schema & return types are correct

- Agent is properly configured with the tool

- Clear instructions for returning text output

- Logging shows the tool’s return payload is generated

- Happens across multiple tools

- Using latest Mastra version

- Setup: Conversation (RAG) Agent + OpenRouter + AI SDK 5 + useChat hook + fastify server What I Expected The agent should consume the tool result and continue its thought/action chain, but the callback never triggers. --- Code Example screenshot attached Attached langufse screenshot PS: it was happening on previous version of Mastra as well, but I updated it to see if anything changes.

3 Replies

📝 Created GitHub issue: https://github.com/mastra-ai/mastra/issues/10327

🔍 If you're experiencing an error, please provide a minimal reproducible example to help us resolve it quickly.

🙏 Thank you @expiredMedicine for helping us improve Mastra!

📝 Created GitHub issue: https://github.com/mastra-ai/mastra/issues/10328

🔍 If you're experiencing an error, please provide a minimal reproducible example to help us resolve it quickly.

🙏 Thank you @expiredMedicine for helping us improve Mastra!

📝 Created GitHub issue: https://github.com/mastra-ai/mastra/issues/10329

🔍 If you're experiencing an error, please provide a minimal reproducible example to help us resolve it quickly.

🙏 Thank you @expiredMedicine for helping us improve Mastra!

Hi @expiredMedicine !

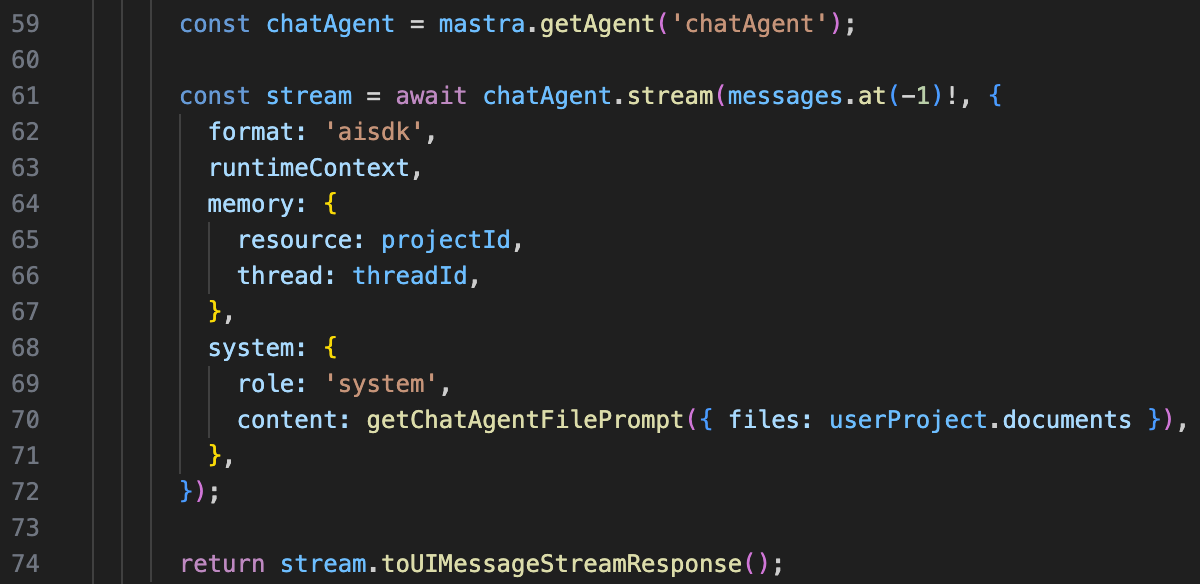

Could you try updating your API endpoint code to use this pattern instead, we've made a lot of changes/fixes to our stream transformer (remove the

format: 'aisdk' and use toAISdkFormat instead:

Also, is the tool-result generated via human-in-the-loop, or is it a tool that doesn't require human interaction?

Thanks! 🙏