MastraAI

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

JoinMastraAI

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

JoinCannot use networks in playground due to resourceId missing

AI tracing instance already registered

Distinguish Step Level Retries / Workflow Level Retries

Disable thinking/reasoning with Ollama provider (+ Ollama cloud)

type annotation error and property 'suspend' missing

"@mastra/core": "0.17.1", to "@mastra/core": "0.18.0", and getting the errors

```

Property 'suspend' is missing in type '{ context: { industry: s

tring; }; runtimeContext: RuntimeContext<unknown>; }' but require

d in type 'ToolExecutionContext<ZodObject<{ industry: ZodString; ...Has anyone had success setting up Sessions & Users in Langfuse using Mastra

How is writer.write supposed to be used?

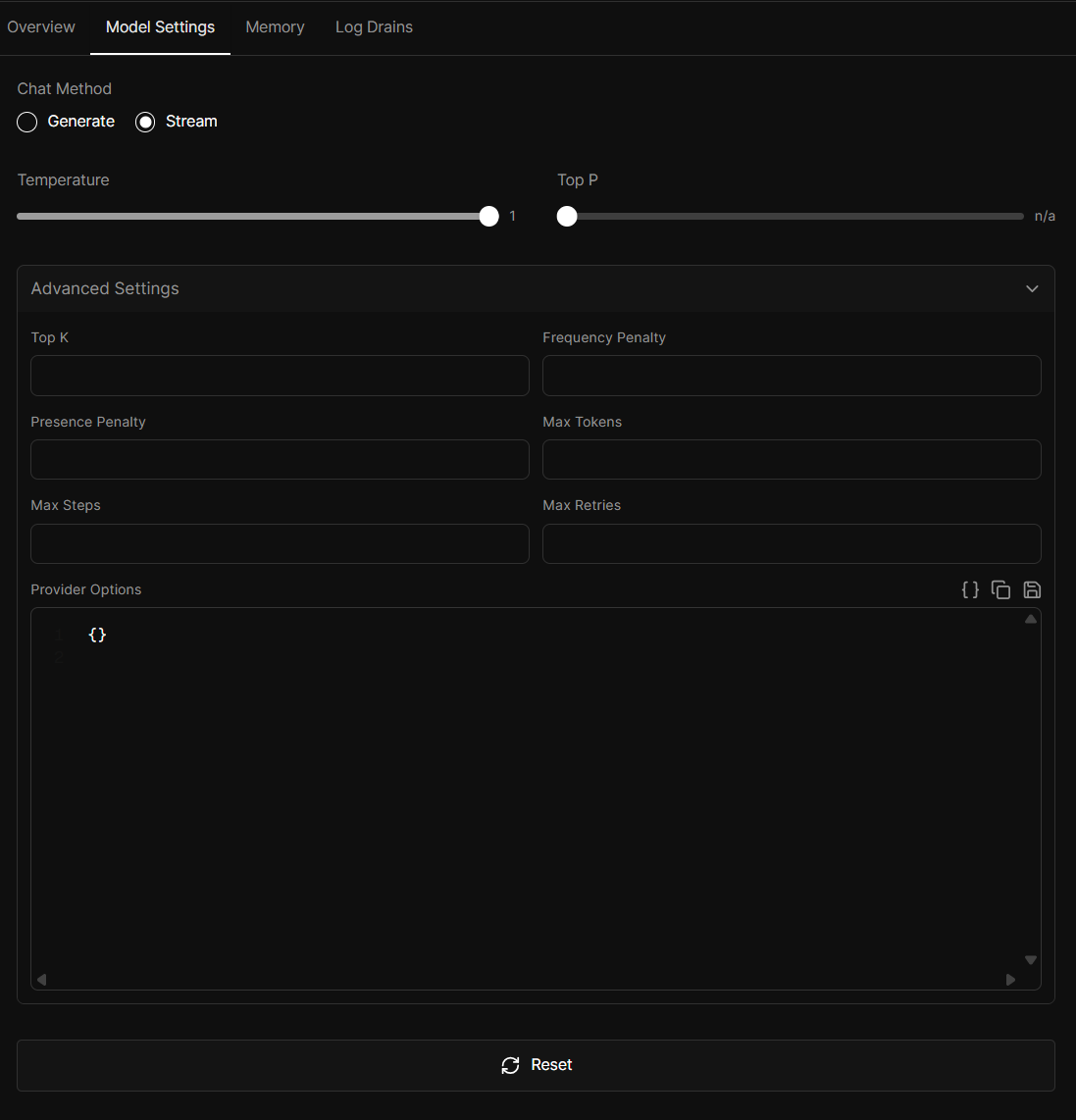

Can't configure model settings when using GPT-5

useChat tool streaming

Traces ofstructured output parser end up in different tracing context

tracingContext to call an agent's generateVNext, the agent's span is nicely put within the workflow tracing context in Langfuse. However, if you use structured output, the trace ends up as a seperate trace in Langfuse. I would prefer (and I think most if not all people would) if the structured output parser trace ended up within the context of the agent run spanCan I assign provider options for input/output processors?

Typescript Error while creating MCPServer with workflows

server.ts

then I'm registering everything in index.ts

...Mastra Cloud Deployment Failing Due to @mastra/cloud Version Incompatibility

@mastra/cloud requires @mastra/core versions 0.10.7-0.14.0 ...AI Tracing Storage Issues

AI tracing issue with Langfuse- prompt tracing not working

Put Agent Memory on a Different Model than Response?

streamVNext converting image URLs to embedded content - need URLs as strings for tools

Mastra client js SDK types export missing

Mastra React SDK