crawlee-js

apify-platform

crawlee-python

💻hire-freelancers

🚀actor-promotion

💫feature-request

💻devs-and-apify

🗣general-chat

🎁giveaways

programming-memes

🌐apify-announcements

🕷crawlee-announcements

👥community

Setting a cookie in Cheerio before the page request

Resume after crash

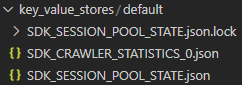

key_value_stores

key_value_stores

enqueueLinks with a selector doesn't work?

await enqueueLinks({

selector: '.pagination li:last-child > a',

label: 'LIST',

})

await enqueueLinks({

selector: '.pagination li:last-child > a',

label: 'LIST',

})

how to clear request queue without stoping crawler

Requesting proxy rotation for an individual organization

Google shopping

How to scrap emails to one level of nesting and give results to API

There is a major problem, Crawlee is unable to bypass the cloudflare protecti...

useChrome method was tried and failed.

Manual login was successful when done on Chrome (out of Node and also tried with incognito mode, etc.)

https://abrahamjuliot.github.io/creepjs/ Despite Crawlee receiving a higher trust score from the Chrome browser I am currently using, it is unable to pass the cloudflare page....

Waiting for CF bot check

errorHandler to wait out the bot check but now I can't find a way to keep the CF cookies for the session. If I do session.setCookies(...) inside the errorHandler, nothing gets stored and the retry connection uses a new session. I also tried session.markGood() but didn't help.

Any ideas?...How to add data to the SDK_CRAWLER_STATISTICS

await KeyValueStore.setValue("statistics", statistics); but i would prefer to add it to the existing statisticsHow to increase memory of PuppeteerCrawler

Use page.on('request') in PuppeteerCrawler

Bet 365 crawler

is there a way to close browser in puppeteer crawler?

Error while trying to use apify

Custom storage provider for RequestQueue?

Exclude query parameter URLs from crawl jobs