Github Serverless building takes too much

Websocket Connection to Serverless Failing

wss://<pod_id>-<port>.proxy.runpod.net/ws and expect this to be translated to wss://localhost:<port>/ws, and the websocket server is run in a thread just before the HTTP server is run. The latter works fine as I am able to communicate with it via the regular https://api.runpod.ai/v2/<pod_id> URL. The expected port is exposed in the Docker config, as per https://docs.runpod.io/pods/configuration/expose-ports. Any ideas what the issue is?...Pulling from the wrong cache when multiple Dockerfiles in same GitHub repo

Severless confusion

How to pass parameters to deepseek r1

Job stuck in queue and workers are sitting idle

Endpoint/webhook to automatically update docker image tags?

What is expected continuous delivery (CD) setup for serverless endpoints for private models?

InvokeAI to Runpod serverless

Comfyui From pod to serverless

Is serverless Network Volume MASSIVE lag fixed ? Is it now usable as a model store ?

Serverless with network storage

Workers keep respawning and requests queue indefinetely

Handler output logs

The default steps on the website for serverless create broken containers that I am charged for.

GraphQL Issue

You do not have permission to perform this action.

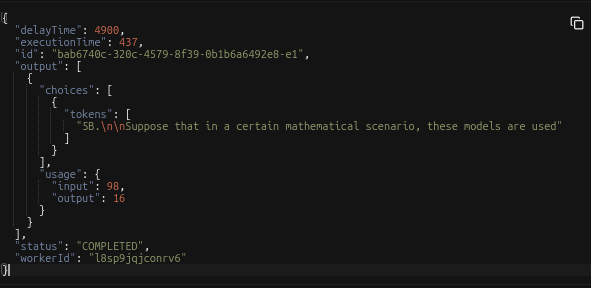

vLLM serverless output cutoff

"worker exited with exit code 1" in my serverless workloads

"Error decoding stream response" on Completed OpenAI compatible stream requests