Haven't been able to use Stable Diffusion for 2 days.

Hello. I'm trying to use my pod so I can use Stable Diffusion, but I keep getting a message that I haven no GPUs available. I was able to get on briefly today and then I got kicked off because of the GPUs disappearing. I haven't been able to use Stable Diffusion for 2 days. Please fix this as soon as possible.

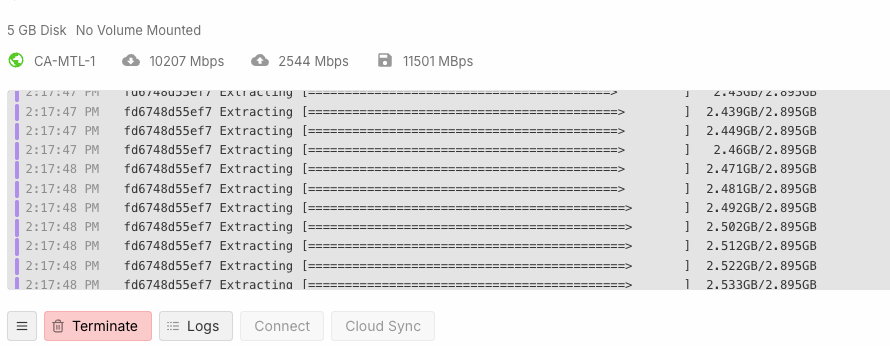

Pod Running

I’m having a problem with the operation of a program. I create the pod, connect, the desktop opens, I set the parameters I need, start the job, it begins to “work” but then reaches “Cache Latent” and the desktop disconnect. I reload the page, the desktop appears again but after a few seconds it disconnect again. I reload the page and it says it's impossible to connect (forcing me to reconnect from scratch and re-enter all the parameters).

I’ve tried several different GPUs but nothing changes. I also tried using the “web terminal” but when I paste the link into the browser, it says it can’t connect.

I’ve already spent several dollars without even managing to complete 1% of the job. How can I fix this issue and actually get the job to run?

Thank you....

vllm containers serving llm streaming requests abruptly stop streaming tokens

This has been an issue for a while, and I thought it was a vllm thing, but I've deployed the same image to AWS and there have never been these issues there. This issue is for A40s, not region-specific, but on AWS the A10 equivalent doesn't have these issues. I've looked at the logs and there isn't anything unusual happening in the runpod container logs.

Unable to Mount tmpfs Filesystem as Root in Container Environment

Issue Description

When attempting to mount a

Command Executed:

```bash...

tmpfs filesystem to the directory ./mem_disk as the root user inside a container, the operation fails with a "permission denied" error.Command Executed:

```bash...

CORS Issue

So I setup a few endpoints and they work great. Ironically the simplest of things evades me and that's my NodeJS App. I deployed a cpu pod and connected to storage as I needed deployed the container and ouef.

https://t1vks417dcde8m-3000.proxy.runpod.net/

{"level":50,"time":1752575170368,"pid":35,"hostname":"8833baa5d40d","locals":{"sessionId":"4f56ab53468849baae365477000dce885cda2bd9c919f740324af98cb1c80bef","isAdmin":false},"url":"https://100.65.14.188:60285/","params":%7B%7D,"request":%7B%7D,"message":"Internal Error","error":{},"errorId":"39a1a186-e93e-4cff-938b-6d730cff0d7c","status":500,"stack":"Error: CORS error: Incorrect 'Access-Control-Allow-Origin' header is present on the requested resource\n at universal_fetch (file:///app/build/server/index.js:2564:17)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)"}

...

Cannot setup pytorch2.8.0 pod on 4090 gpu with several times trying

start container for runpod/pytorch:2.8.0-py3.11-cuda12.8.1-cudnn-devel-ubuntu22.04: begin

error starting container: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error running hook #0: error running hook: exit status 1, stdout: , stderr: Auto-detected mode as 'legacy'

nvidia-container-cli: requirement error: unsatisfied condition: cuda>=12.8, please update your driver to a newer version, or use an earlier cuda container: unknown

start container for runpod/pytorch:2.8.0-py3.11-cuda12.8.1-cudnn-devel-ubuntu22.04: begin

error starting container: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error running hook #0: error running hook: exit status 1, stdout: , stderr: Auto-detected mode as 'legacy'

nvidia-container-cli: requirement error: unsatisfied condition: cuda>=12.8, please update your driver to a newer version, or use an earlier cuda container: unknown

Solution:

filter with cuda version works

ComfyUI Template Trouble

I cant seem to figure out whats going on when I am trying to use the comfui template from RunPod.

I am running a basic 4090 with no extra storage so I am going off of the 50GB included in thr workspace. I am supposed to be able to upload my own models I thought if I add them to the /workspace/comfyui/models/checkpoints/ directory (as stated in the extra info tab), but whenever I add my safetensors file it will never appear ComfyUI when I try to use it.

Is there something simple I am missing here to get this to work? I have watched so many videos and talked to Grok and chat gpt and they both give me no answers. Any help is appreciated !...

US-IL-1 Network Lag

I'm working with a volume on US-IL-1 and the network is extremely slow, where the delay between a "start" or "stop" command being initiated to being executed is easily 30 seconds or more, and I/O is similarly struggling. The performance is very very far outside the bounds of normal performance so wondering if it's network wide, specific to volumes, or something I'm doing.

GPU not visible in the pod.

I have a very simple Docker image with FastAPI which I pushed to my repo, then I use that image as a template to start a H100 PCIe pod. I used runpod/pytorch:2.2.0-py3.10-cuda12.1.1-devel-ubuntu22.04 as a base image. But for some reason the GPU is not available in the container. If I run nvidia-smi in the container it complains about missing drivers. I did try terminating and getting a new pod up several times.

My Dockerfile:

FROM runpod/pytorch:2.2.0-py3.10-cuda12.1.1-devel-ubuntu22.04...

comfyui pods not loading on us-ks-2 network storage

we have made several pods for comfyui on our network storage stored in us-ks-2 data center and comfyui seems to load forever.

How to visualize tensor board from runpod instance

How to visualize tensorboard started in terminal for training which is going on

tensorboard --logdir=./logs --host=0.0.0.0 --port-6006...

SimpleTuner OOM on H100 SXM

Hello, I'm trying to fine-tune SD3.5 Large with SimpleTuner on a H100 SXM and I'm getting out of memory errors, i tried with an RTX A6000 before and still not working with 80GB of VRAM, i find it very weird since 80GB should normally be more than enough for SD3.5 training.

Thanks for your help :)

Here are the logs:...

Config.yaml - invalid dataset format?

Having trouble running axolotl train config.yaml to fine tune mistral v1 with my own data. Getting returned a lot of nonsense errors but AI feedback focuses a lot on my dataset formatting being incorrect. Currently, I have it like this:

datasets:

- path: vitalune/business-assistant-ai-tools

type:...

cache docker image

friends, i have a face analysis docker image with 4gb+, everytime when i ask for a pod via API tooks like 4 to 7 minutes to deploy, there's a way to cache this image in runpod registry???????

Some of my Volume Network Uploads aren't persisting.

Hi I bought some space on a network volume with the hope of keeping things I will need to start a pod uploaded there. I uplosded two loras which sit in workspace/comfyui/models/loras/. They are persisting across pods.

However, I also have some .py code files and a prompt file within the workspace directory and they go missing when I delete the pod.

Why is this happening? Do I have to follow a particular folder structure?...

L40s pod config says 40 GB disk space, while launching i see only 20 GB

while a fine tuning task i am seeing this issue. L40s config states total 40 GB disk space but while creating a pod i see only 20 GB is allocated. Can i know if i am missing something here

Error due to this - "OSError: [Errno 28] No space left on device"...

Solution:

Most likely, you have 20 GB of container storage and 20 GB of volume storage. You can edit pos and add more volume storage.

What are the ROCm Supported Versions?

See title, AMD doesn't make it super obvious - what ROCm version matter to AMD users aside from the absolute latest 6.4.1?]

Deploy invalid pod with critical fault

When i have some problem inside the docker code, like start.sh exited. How i can stop pod not automatically restart? I dont want to terminate pod bcs i am not abble to find the log after that. I need to stop but do not restart, bcs i dont want to pay until somebody fix the problem. All pods are started automatically by rest api request.

If i returned exit 0 or exit 1 always the pod is restarted automatically, depends on the docker-compose.yaml?

this i have set...

not available

root@68c1e4141477:/workspace# python -c "

import torch

print(f'CUDA available: {torch.cuda.is_available()}')

print(f'CUDA device count: {torch.cuda.device_count()}')

if torch.cuda.is_available():...