How to debug auth requests?

I'm having an issue where /auth/v1/token suddenly returns 400. I've set an expiration window of 20 seconds.

This originated after finding out that the supabase token disappears from localStorage.

It's not clear why I received a 400 for this refreshToken, the path to this should indicate a successful request.

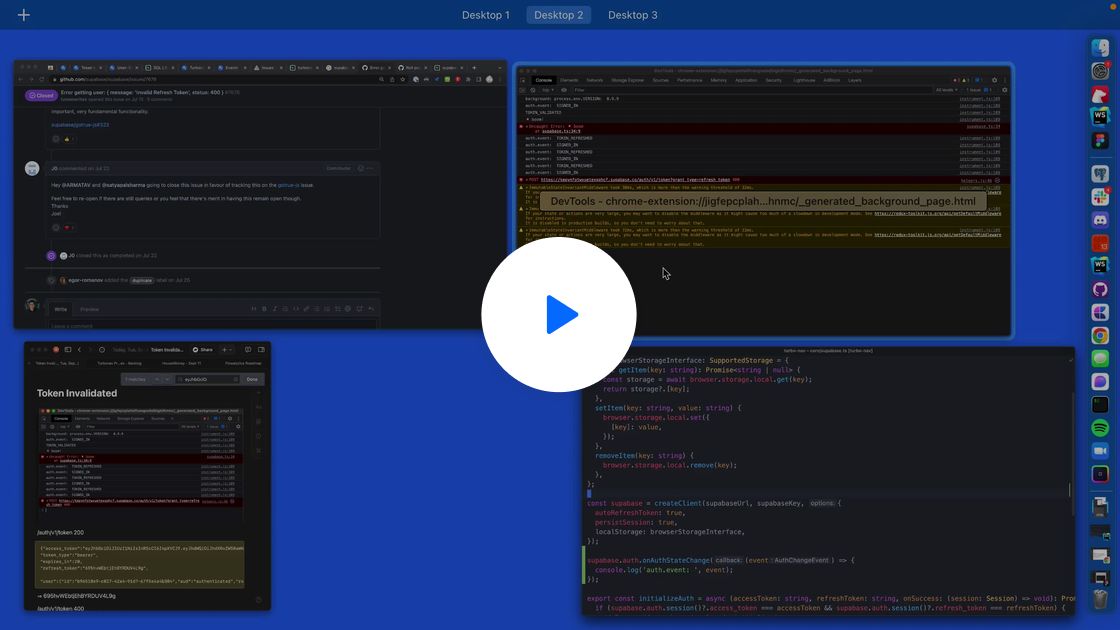

Here's a video walking through the situation:

https://share.cleanshot.com/mc6hDzOEoD7lzwk4ZSrk

This originated after finding out that the supabase token disappears from localStorage.

It's not clear why I received a 400 for this refreshToken, the path to this should indicate a successful request.

Here's a video walking through the situation:

https://share.cleanshot.com/mc6hDzOEoD7lzwk4ZSrk