Error when uploading to Storage (Swift)

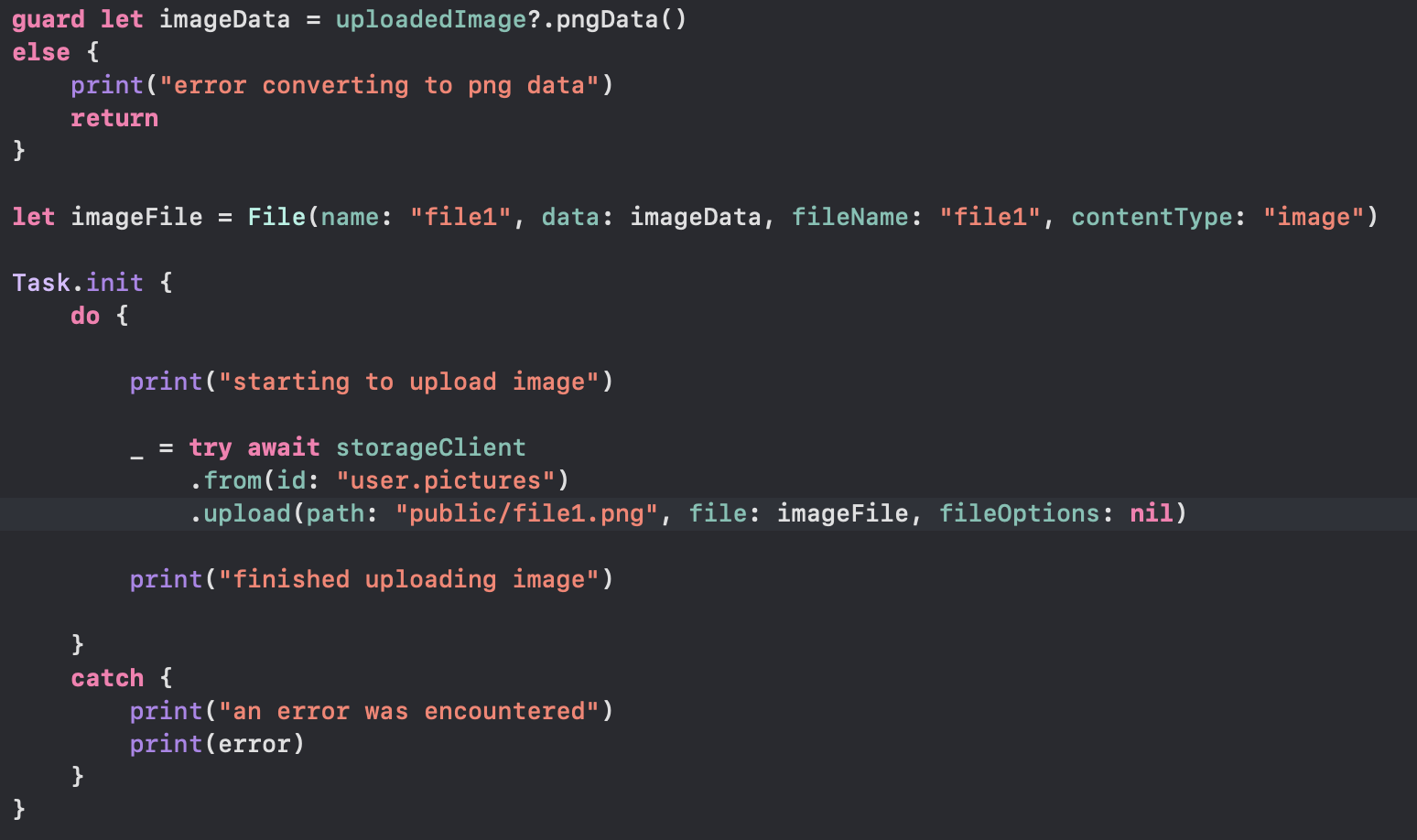

I keep getting this error when trying to upload an image file to my Supabase Storage bucket: "StorageError(statusCode: Optional(400), message: Optional("Error"))". I am trying to figure out if there is an issue with my code or with the way I have set up my Storage policies. I have attached an image of my code, any help would be greatly appreciated!