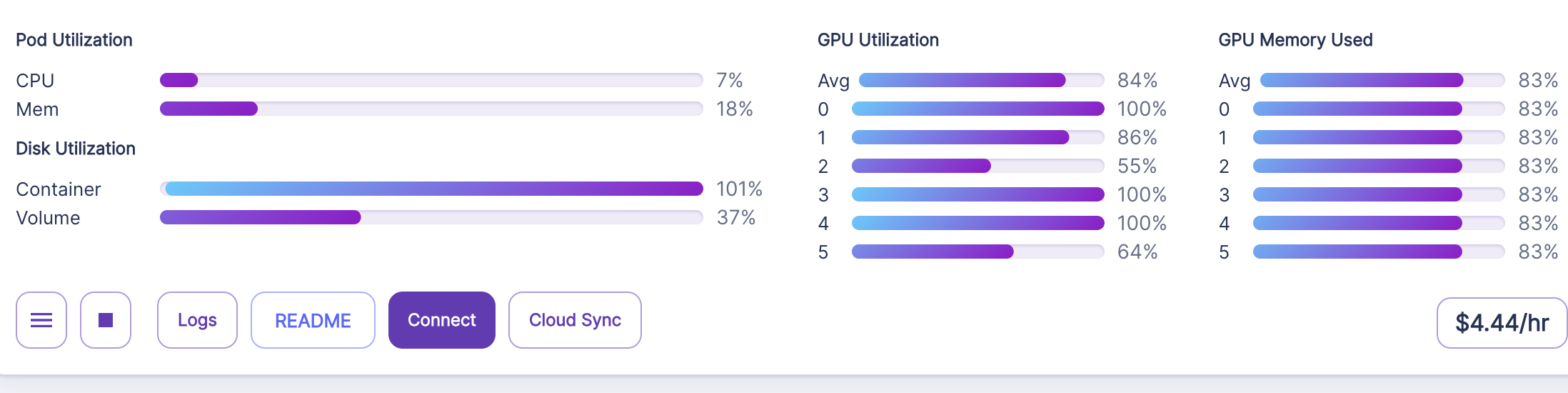

Hmm, could this be related? It seems like with 6 processes, batch size of 2 and multi-gpu enabled, I

Hmm, could this be related? It seems like with 6 processes, batch size of 2 and multi-gpu enabled, I get 96 epochs and 100 batches per epoch. But with 1 process, batch size of 1 and multi-gpu disabled, I get 8 epochs @ 1200 batches per epoch.