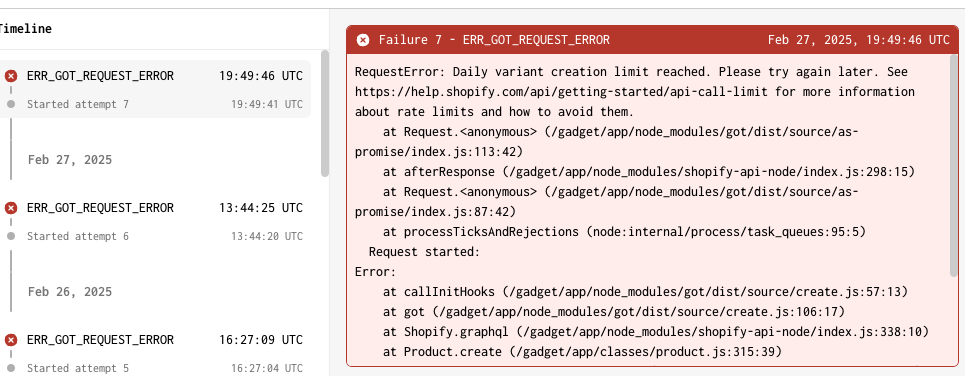

Reached variant limit errors retrying a lot

I'm trying to figure out why so much usage is being generated when these errors are being hit. I would have expected the retries to happen with exponential backoff and that much less usage would be occurring since it would be spending most of the time waiting for the next retry - but it was running pretty close to 24 hours/day.

I have since disabled the sync job and cleared the queue.

https://rapapp.gadget.app/edit/development/queues/job-aReCc797o-FefrRYXPCf8

27 Replies

@Chocci_Milk any word on this one?

Could you please share a traceId?

3371fe7d22ef9a3f1fe60a1cbd01e700

Ok, looking at this error from Shopify. You're attempting to create way too many variants in one day. You may need to look into their documentation to see how many you can create and split the work into smaller chunks. Might I ask why you're creating so many variants?

Exponential backoff wouldn't help you here

Yes I know what the error message means. I'm creating a lot of variants because this is a sync from another system where the intention is to create a lot of variants.

I see that it's doing backoff when I look at the attempts in a given job. But I think it's treating each product job separately.

What I'm wanting to happen is for the whole queue to pause when it's backing off. I think it's doing backoff on the individual product jobs but it isn't pausing the queue as a whole.

I'm enqueueing 50 of these every 5 minutes, when it starts hitting the errors, it's not pausing the queue when it hits the error.

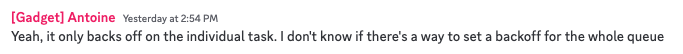

Yeah, it only backs off on the individual task. I don't know if there's a way to set a backoff for the whole queue

That seems weird. Let's say you have 1k jobs enqueued.

If you're hitting api limits (forget this particular one let's just say you're hitting the normal rate limit errors) - then is it going to run 1k of those in a row even though each one is being rate limited?

Shouldn't it pause the whole queue if it's being rate limited?

I'll talk to the team about adding that to the feature. I think for the time being, you should look at using bulkOperations, I don't know if you'll get around the variant creation limit but it might help you lower the number of errors

So if you enqueue 100k jobs, it’s going to do backoff individually, so you hit a rate error and it’s going to go ahead and process 100k of those jobs and just keep hitting 100k rate limit errors and bill you for that usage?

There's no real way for us to know that the errors are because of rate limits. Therefor, other tasks in the queue could technically be successful. Its on a case by case basis but in your case a queue level backoff would be helpful

Well the cannonical use case for backoff would be api rate limits which would also apply on a queue level?

We're talking internally. The solution I gave you above to use bulkOperations (Shopify) and to add records to the db as a "whats next" is the best we can offer at the moment. Either way, I don't think that rate limits would fix this issue as the message from Shopify is a dead stop

What I'm describing: https://en.wikipedia.org/wiki/Dead_letter_queue

Dead letter queue

In message queueing a dead letter queue (DLQ) is a service implementation to store messages that the messaging system cannot or should not deliver. Although implementation-specific, messages can be routed to the DLQ for the following reasons:

The message is sent to a queue that does not exist.

The maximum queue length is exceeded.

The message e...

Thanks I appreciate you raising it internally. For now I've reduced the run freqeuency to avoid hitting the 1k/day variant creation limit. But I'm more concerned generally about rate limit handling if that works the same way as all error handling.

@kalenjordan did yo utake a look at: https://docs.gadget.dev/guides/plugins/shopify/building-shopify-apps#managing-shopify-api-rate-limits

you might want to try setting

connections.shopify,maxRetries = 1 in the background action

shopify-api-node has an internal retry before it throws an error and if that exponential backoff kicks in then your job is processing the whole time while you wait for the eventual final failure

we set 2 as the default (although if you are on an older app it might be 6)Unknown User•6mo ago

Message Not Public

Sign In & Join Server To View

Thanks @Aurélien (quasar.work)! Am I correct in understanding that this is not true in the case of shopify rate limit failures? The whole queue does get paused right?

Unknown User•6mo ago

Message Not Public

Sign In & Join Server To View

I must be articulating this horribly 😅

If you enqueue 100k jobs and the first one hits a shopify rate limit with a retry-after header, is the queue going to continue processing the rest of the 999,999 jobs or is the queue going to pause until the retry-after time.

I understand what you're saying about there not being programmatic access to pause the queue which is what I'd need to handle my variant creation limit case, but I'm also interested in better understanding regular rate limit retries which is why I'm asking.

Unknown User•6mo ago

Message Not Public

Sign In & Join Server To View

aaah ok that's kinda surprising.

Unknown User•6mo ago

Message Not Public

Sign In & Join Server To View

yeah

I recall Mo giving me a solution to dealing with a surge of webhooks I received on my flow extension app and based on this I don't think that solution would have helped. I didn't end up implementing it at the time. And I can't seem to find it in discord - I can't remember where he sent it to me - might have been the old support channel.

But the gist of it was that putting the webhook processing into a background job would more gracefully handle rate limits, but that doesn't seem to be the case.

Unknown User•6mo ago

Message Not Public

Sign In & Join Server To View

😆

Right I guess at least queueing them up would have increased the time in between the initial api calls which would have helped.

Unknown User•6mo ago

Message Not Public

Sign In & Join Server To View