Endpoint 20mb limitation

https://docs.runpod.io/serverless/endpoints/operations - what caused such limitations for the "/run" and "/runsync" endpoints of 10mb and 20mb, respectively? Are there any solutions on how to increase them?

Endpoint operations | RunPod Documentation

Learn how to effectively manage RunPod Serverless jobs throughout their lifecycle, from submission to completion, using asynchronous and synchronous endpoints, status tracking, cancellation, and streaming capabilities.

9 Replies

one way around it is using s3 for large data

Can you send a link?

there is no link for that

you can see the s3 sdk docs tho

it should be something like

give the client a presigned s3 upload url and the client uploads to that url then u get the object from s3 to process

Well, thank you, i will try it

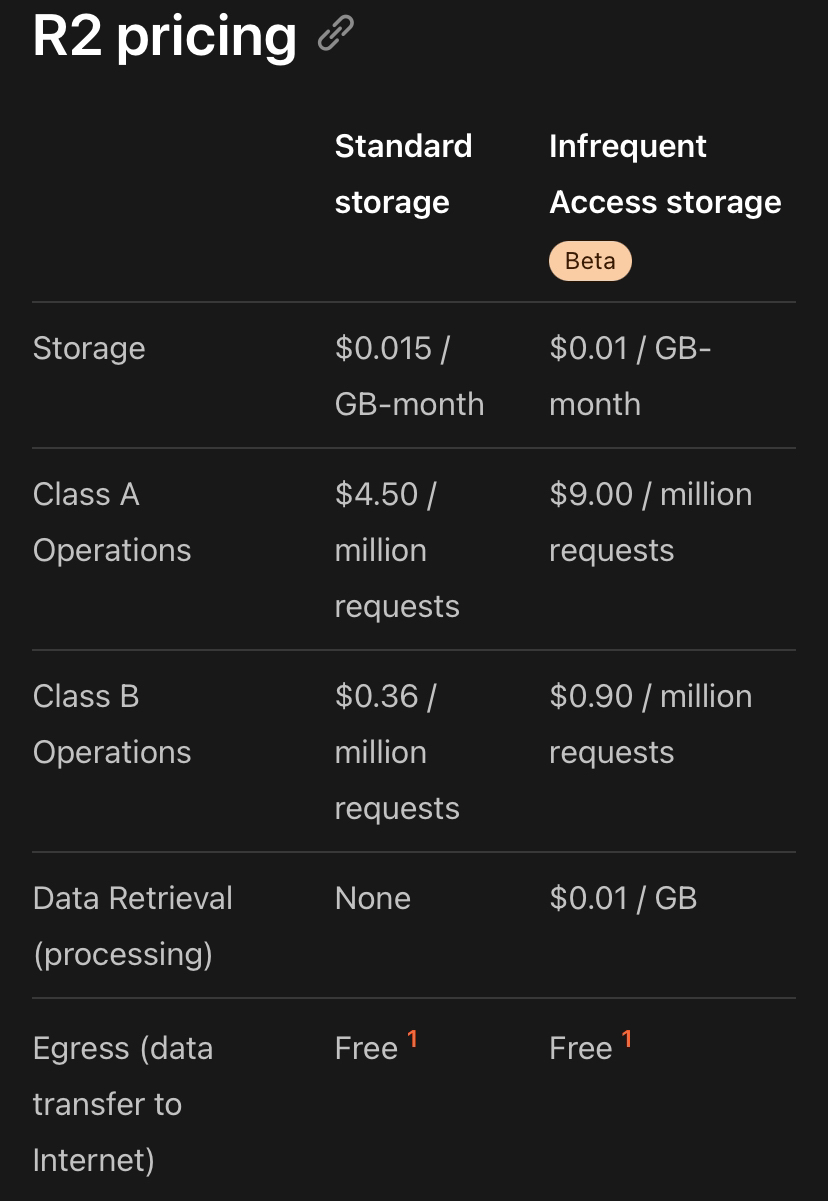

Id recommend Cloudflare r2 because it has 0 egress fees

https://rclone.org/ is an amazing tool for managing most cloud storage solutions, it can even generate those presigned urls if you want to test it out quickly.

Rclone

Rclone syncs your files to cloud storage: Google Drive, S3, Swift, Dropbox, Google Cloud Storage, Azure, Box and many more.

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

Meanwhile AWS with their eyewatering egressfee

Its free up to 1tb through cloudfront tho