STIX modeling

Question: How to Model Controlled Language Lists, Vocab or Enum in TypeQL

In Stix and other cybersecurity languages, Controlled Vocabularies are used to limit the allowable choices for a particular field. There are two types:

- Enum: A list of words that cannot be added to, e.g. Opinion, which is a scale

- Vocab A list of words that can be added to, e.g. Hash Algorithms, which can obviously increase

At the moment we control the choices through the UI, which pulls from the github, but is there a way to develop this control in TypeQL? We already have all of the lists of enums and vocab for each stix dialect available, how could we utilise these in TypeDB.

The modelling scenario is that an entity or relation will own an attribute, with a fixed, standards-based name, e.g. hash-type, which must be of a class Vocab, with a subclass of hash-algorithm-ov, and this sub-class has a pointer to the right list of words. One must load all of the Vocab and Enum lists, and have functions to then constrain the choices of values for particular attributes.

At the user interface level, we hold both the word, and a description for each word (e.g. tooltip on mouseover), but this seems less useful in the database, so the lists could just be simple lists of words, without an accompanying description. The lists can be unordered since their only purpose is to validate values in the database.

How should this best be modelled? How does the constraint on the words lists get applied? I assume we cant restrict from being updated, but we might be able to, through IAM?? Assume I have a free scenario, plus a cloud scenario. How can I accomplish this in TypeQL?

GitHub

stix2-dialect-definitions/stix/enum/opinion-enum.json at main · os...

Contribute to os-threat/stix2-dialect-definitions development by creating an account on GitHub.

69 Replies

We can continue here

just starting to get back to STIX!

I'm just using the TypeDB "values" feature for both:

we don't have a way to query annotations right now but once we do you should be able to query for the annotations of the attribute type -> use that in your UI

Granular markings are the trickiest translations STIX i think:

1) we need to be able to "annotate" other objects in the database. However, those objects are composite of entities/relations/attributes in TypeDB!

2) this is actually the only part of STIX i can see that requires ordering to be preserved, such as

labels.[0] requires you to record which label was the 0th one

3) this probably is most easily done with lists!

---

There are two/three ways to do orderings in TypeDB:

a) wait for ordered ownerships/relates in TypeDB

b) implement ordering via links or indexes, which might require using relations instead of 'owns' for properties that are used in lists in the spec

---

Once you've picked an ordering mechanism, then how do you do granular markings?

a) the "native" typedb implementation would make a granular_marking relation that points to the object, the marking, and the annotated property/embedded object and all the steps in between (we need to be able to reconstruct the full path external_references.[0].source_name from the granular marking!)

b) the more easy and correct approach: just annotate the object with the relation, and store the annotation selector as a string, without actually translating it to a full TypeDB relation connecting everything together ... this way you don't actually need your granular marking to relate/own every single possible thing either.ok, that looks promising. Can I then use a sub-type, so

if I can do that, then it will look great

Hmm, I am not sure about how this granular marking is going to work. I have previously used unordered lists in Grakn and it worked fine. Yes, sometimes the markings came back in different orders, but as long as they still marked the correct bits, t was ok

I would be happy for a solution that enables me to do it, without having every single attribute explicitly named as being owned by the relation. How can this work?

yes can do

hmm yes we can actually do the unordered version the same as in 2.x, but yeah you'll have to write out all 200 something properties

that's probably what' ill do tbh

there has to be another way. If i wait till ists arrive, is thered something elses that will change this?? Its a maintenance hassle. Each time a new attribute is added, this relation must get reworked

is there some other plan for the future for this?

because i havbe more than 200 atttributes unfortunately

lists will help with maintaining the ordering, not with the pointing at the attribute

the real solution is owns*

when is that happening?

it's not on the roadmap yet cc @christoph.dorn

shit

we will need a way to do this for sure

in 2.x did you have all attributes

sub a common attribute that could play something like owned-attribute:attribute, which all attributes inherited?yes

what about the use case where iwant to record read/write/update/delete for a single attribute?

you should be able to do exactly the same as in 2.x because you can replace owning attributes explicitly with your own attribute ownership relation

so, the long and short is, in the meantime we have to use a really ugly workaround, with every attribute on a s relation, but at some stage there is a a more beatiful approach coming?

ah yeah haha interesting, because role type playing is inherited, that's basically the inverse of owns*

can't i use a super-attribute?? So if all stix string attributes are owned by a super type, can i just use that on the relation?

so if we had

owns* you could easily do:

then you can do all your change tracking over that relation tooyep, thats the one

this is pretty much identical to the role playing attribute in 2.x i believe

that would essentially provide the same capability as the old attribute playing a role

but we cant do that at the moment, true? I cant use a super-type on the relation???

no, we don't have owns*, you'd have to declare every attribute ownership there

ok, we need that on the github issue list then. Let me know, and i will give it some love

in your 2.x model did you directly own the attribute, and also have it play the role? or just the role?

no the supertype owned the role

it was abstract

sure but if you have an

entity attack-pattern <somehow connected to attribute> alias

was that <somehow connected to attribute> always a relation or a simple ownsit was always a relation, check here how the attribute super type was defined

https://github.com/os-threat/Stix-ORM/blob/main/stixorm/module/definitions/stix21/schema/cti-schema-v2.tql#L1644

GitHub

Stix-ORM/stixorm/module/definitions/stix21/schema/cti-schema-v2.tql...

Contribute to os-threat/Stix-ORM development by creating an account on GitHub.

all the sub types just inherit that role

and it was that simple in 2.x

right i see

granular marking defined here

https://github.com/os-threat/Stix-ORM/blob/main/stixorm/module/definitions/stix21/schema/cti-schema-v2.tql#L1357

GitHub

Stix-ORM/stixorm/module/definitions/stix21/schema/cti-schema-v2.tql...

Contribute to os-threat/Stix-ORM development by creating an account on GitHub.

and it was super elegant

so really what you want is:

yeah pretty much

absolutely !!!! This would be a game changer for those tricky things where you need to relate to an attribute, which does happen for sure

Is that a tricky feature to build?

easier than lists or structs!

but it's not prioritised atm i can have think about it

also it's a loose spec, we haven't thought too far about it

nice, let me know when you get a description up, and i will comment oin it

Thanks @Joshua and @christoph.dorn , cheers!!!!

it's actually this one - https://github.com/typedb/typeql/issues/325

GitHub

Introduce syntax for interface covariance · Issue #325 · typedb/t...

Current variance behaviour Currently, definitions of interface implementations (i.e. owns and plays statements) are covariant in the implementing type (the owner or roleplayer), and invariant in th...

which you've seen 😄

I just gave the issue some love

By the way, @christoph.dorn and @Joshua check out my attribute list for my combined schema attached. Not quite 1,000, but still a respectable number for our current starting point.

In my view my granular marking relation definition will be a world-record for TypeQL, with the most attributes a relation has ever owned. Check that list out.

It will make a funny meme 😉

By the time I add in all of the Vocab and Enum definitions, and this new relationship whopper, our schema is going to swell from the current 4.500 lines to 6,000 lines plus., and thats not including Fetch statements, which could easily be another 2,000, which also need to be stored in the code base.

So the total should be about 8,000 lines, although admittedly the Fetch statements hcve probably been underestimated. Stix loves lots of optional components on objects, and so the Fetch statement will generally be well over 100 lines.

But anyway.

Whew

We found our ORMv1 to be very onerous cross checking efforto add new objects to, or make any changes to

Its basically the learnings of our design of ORMv1, where it was hard work, hand crafting the mapping data between Python classes and Typeql,, on a one-by-one basis took quite a few weeks to get right, for each new dialect supported. Then in our low-code platform, manually building forms in the UI to support it, and apis to get the data. Its months of work to add on new objects, or make changes

Our plan is to transpile from a template, and a common core of ORMv1 content and template for each object, and then into each output format (typeql, fetch, pydantic, form etc.) for every object, accumulate them into list and then write them to files. We are definitely working towards a point where you cold build your own objects through a user interface, which wiuld be sick

do you use the JSON schemas from https://github.com/oasis-open/cti-stix2-json-schemas/blob/master/schemas/ at all @modeller ?

GitHub

cti-stix2-json-schemas/schemas at master · oasis-open/cti-stix2-js...

OASIS TC Open Repository: Non-normative schemas and examples for STIX 2 - oasis-open/cti-stix2-json-schemas

No, JSON schema has insufficient info, but the Python class file contains plenty of detail. There are 21 types of properties in Stix, and so all objects are just made from those 21 possible components, with different names and orders. You then just compose a TypeQL structure for every type of property.

So we use the Python class as a root, which can be seen to contain all of the property data here . But this is useless outside of Python so we parse into a template format, which is what the middleware blocks are expecting.

This template splits each object into 5 sections, which includes all of the extensions and sub objects behaviour. This template is mostly sufficient to transpile any single object into TypeQL, but we miss the relation role names, so if you want non-default role names, they must be specified.

Finally, in the case of SRO's, one also needs to know which objects they can be matched against, for use in the UI, and so this data is shown here. Foreign keys require similar matching data, as to what valid objects may be matched. We anticipate capturing this data interactively from the user in the "make your own object" user interface.

Because we have existing handcrafted mapping data for both the back end transpile, and for the front-end behaviors (e.g. RMB Menu, Vocab drop downs lists etc.), by replaying these as "form imports" we can create a very robust object-by-object auto-generation process for the TypeQL schema and Fetch statements, based on a common core. It seems straightforward to also generate descriptions for Pydantic, Forms etc.

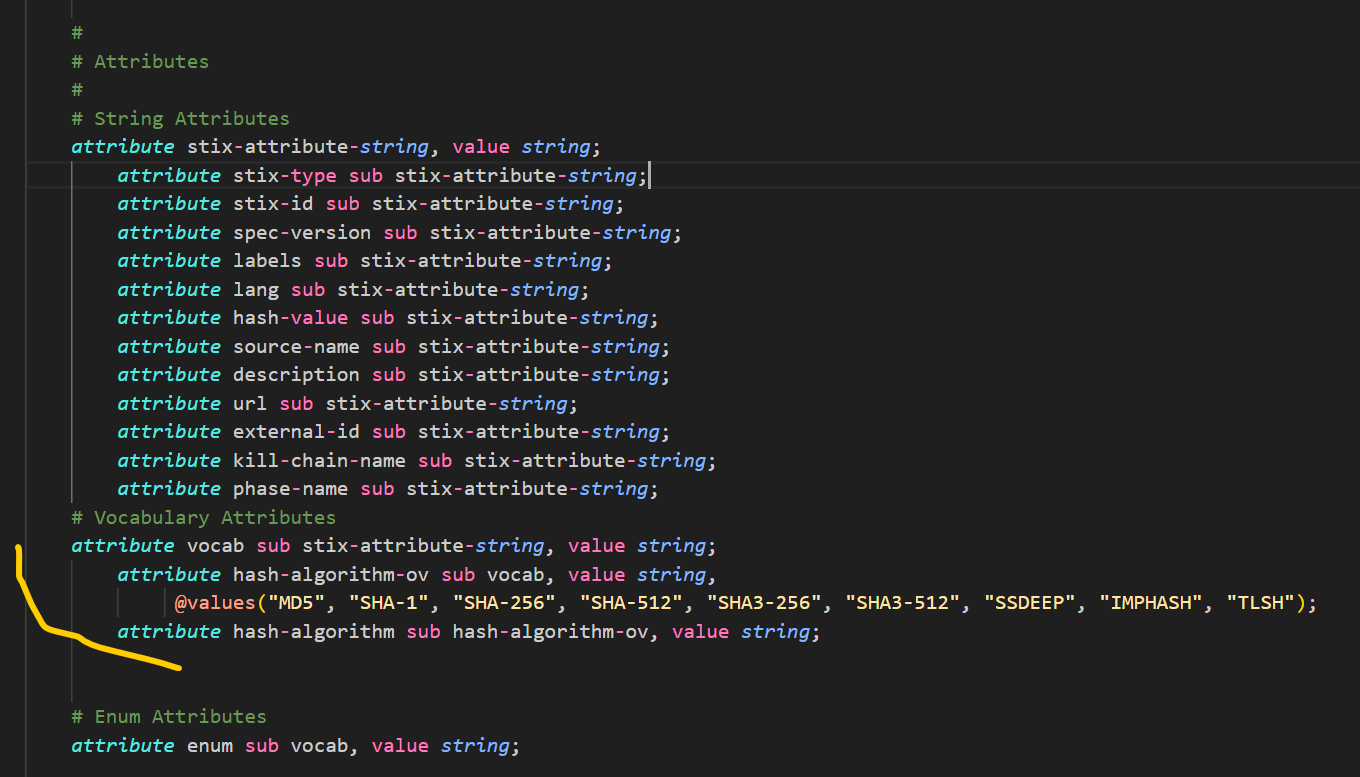

Woo Hoo. See Below the World's first Controlled Vocabulary in TypeDB

Quick explanation, the schema fragment below is from my core schema, that i use for generation. Basically, to reduce effort, it is convenient to start from a core of parent objects, with common attributes and relations.

I keep the names of all the parent objects, plus a registry of all names used (except for roles, since they are scoped by the relation name), and so these attribute definitions are fake,. Attributes will all be generated automatically at the end of the schema writing process.

However, the core does include a single Vocab example, that I can use as a template for my generator. So, explanation ended, lets check out how TypeQL models the controlled hashing-algorithm-ov vocabulary.

Note that the modelling requirements are:

1. Import a list of valid values and assign them to the official Vocab name

2. Create an attribute that is a sub-class of the Vocab attribute

TypeQL performs flawlessly, with an elegant, terse solution. Nice work!!

STIX Version 2.1

This document describes the STIX language for expressing cyber threat and observable information and defines its concepts and overall structure.

Wait till you see the Granular Markings TypeQL schema, and the Fetch statements that include the Granular Markings.

I plan a test-run for development of 4 complete objects, 1 * SCO, 2 * SDO and 1 * SRO, no Meta Objects, so it will be interesting to examine the results

Question: Can we convert/map any valid Stix Pattern to Relations? What might the solution look like?

Hey @Joshua Stix Patterns are more easily understood by looking at te actual syntax, and one can learn a lot through the Quick Reference Chart. Although the rest of the detail is contained in the Standard.

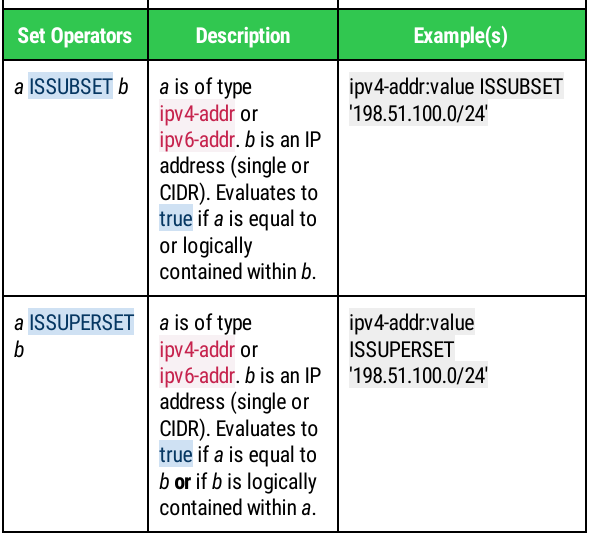

It would be a significant advantage if we can natively represent this logic, however I am not sure Typeql can handle all of the set variations that Stix Patterns requires.

Ignoring the transpiling bit, and merely looking at the modelling of those linkages in the Quick Reference Guide.

Can TypeQL model any valid Stix Pattern?

STIX Version 2.1

This document describes the STIX language for expressing cyber threat and observable information and defines its concepts and overall structure.

or do we need to leave it as a string?

It could be huge if TypeQL can map Stix Patterns natively, especially if retrievals can be made from some TypeQL version of a Stix Pattern. There obviously has to be a transpile process, but that seems easy, what about the modelling?

Perhaps its as simple as producing a Pattern <=> Match statement transpiler, and storing the Stix Pattern field on an Indicator SDO as a strings?

However, if the data matched by the pattern was in TypeDB, and the Indicator was being created in TypeQL, is there a way to materialise the Stix Pattern, into the schema, or as a TypeQL function, or is the Pattern still best held as a string inside TypeDB and externally transpiled into TypeQL to use as a match pipeline??

Ideally, how should TypeQL interact with Stix Patterns?

and can TypeQL do everything that Stix Patterns does? If the answer is yes, then we are in a good place

ITs still a cool question

im honestly good without trying to native-ize patterning 😄 but you can probably embed it natively snow

Hmm, ok, as a minimum, I will need to transpile it to TypeQL, so a Stix Pattern can be used to return Stix records from a TypeDB database. I wont get into it until after the Generator.

I know you're super busy, but can you have a quick squiz at the Quick Reference Card, and let me know whether TypeQL can execute all of those queries?? Like the two SET ones on page 1, looking within IP addresses. I wonder if those could be done with functions???

The most important thing when doing the transpile is to know whether any operations cannot be transpiled, whether there are any search options TypeQL could not execute???

Can you advise whether i have the all-clear?

Does not have to be done quickly, as still working on the Generator atm, just some time this month if you can, thanks

I'm figuring that these SUBSET queries need structs, since looking inside IP addresses requires string ops, which cant be done in your functions

once we use structs then this can be done through integer comparison, which is quite doable in a function

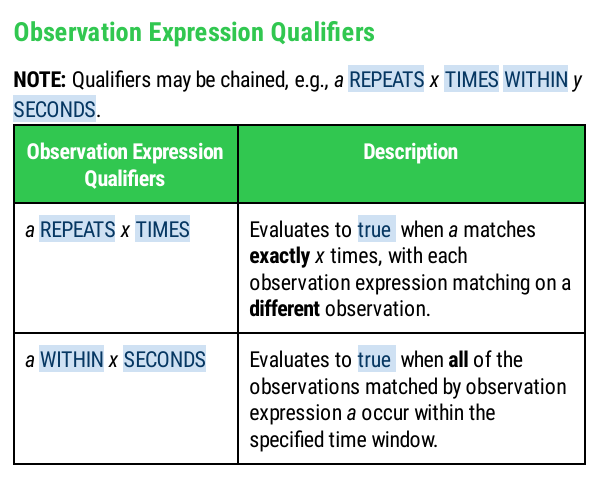

The other main issue in my mind is these qualifiers to matches

i feel like this expressable with a function with some complexity:

I gotta say this is kinda oof but also could be made to work haha cc @krishnan

Would this work?

do we have check?

i forgot about that one

nice

that also solves there not being any observations

yah i dont think we have string slicing + parsing into int and all that done atm

and structs to store IPs yet either

Hmm, that looks cool, I wasnt sure you could do the date maths

YEah I reckon that the Stix PAtterns will work much better once structs are here, as we can do the:

- IP SUBSET/SUPERSET stuff real easily then

- duration maths real easy, although you do show it working above

I really like how flexible your language has become, though guys. Sure it has meant a lot of new rules and conventions for us to consider, but it has tightened up a lot, which is great work, well done.

I was looking at the *links * term the other day, and realised that *plays * was the last word in common between the schema and query languages, and that *links * seemed a deliberate addition to clean that aspect up.

I thought that was particularly nice.

I was also interested in the *@cascade * annotation, which holds the promise of making delete significantly simpler.

So even though my application is hampered, until structs are released, I dont want to seem ungrateful, cos I think you lads are doing a tremendous job!!

Nice work. Keep going. Thanks for the assist with the cybersecurity modelling!!

Question: What resolution is this date maths? Can the duration work with milliseconds, or microseconds?

Assuming, you had a server side function, to switch a

duration=300 MSEC Stix Pattern duration to a query with the TypeQL duration standard of duration=0.3 seconds or duration=PT0.3S.

How fast is that likely to be?? Could you search through nanoseconds? What is the indexing on this search, is there any? Would it require searching all timestamps?

Structs and Lists will unleash the beast!!! Things no other database can do. Bring on G-Time, I cant wait franklyI guess it's not implemented but it's pretty easy to do when we decide what we want 🙈

You may as well wait for structs, then you can make time and date maths a market-leading capability (i.e. super fast indexing and date maths)

At this level we want an integer at each power of three, so subseconds:

- milliseconds

- microseconds

nanoseconds

this would mean you could index any nanosecond in a ten year range with a maximum number of indexes < 5,000, instead of 10^21 like all other databases

making TypeDB a time-series database, based on the G-time model

sick

What's the searching involved here?

Can you write a really simple typeql query to demonstrate?

lets say i had a hundred million timestamps, and i want to find which ones are within +/- 10 milliseconds of a time, How much effort does it take to answer that question?

In the naive datetime stamp, one must search 100 million date time stamps.

In the G-Time model, one makes time a composite, based on integers, so:

- day = 1-> 31

- month = 1-> 12

- year = 1-> 10

- hour = 1->12

- min = 1->60

- secs =1->60

- msecs = 1->1,000

- usecs = 1-> 1,000

- nsecs = 1-> 1,000

In total, 1,185 unique values

Since TypeDB is normalised, and all uses of an attribute's value are simply linked to the original. Searching the hundred million records amounts to searching through 1,185 values, and then relating back to the datetime instances.

This is how time series databases actually work, they use index segmentation, although the model I have presented is updated, splitting the seconds into 3 separate groups of 1,000, will be very useful to military/scientific/commercial applications.

In the early days, Haikal told me that no graph database can be fast at searching for times. But once structs arrive and you guys spend some effort on the G-Time model, TypeDB can dominate other time databases.

Time indexed databases are a whole market segment that TypeQL can dominate

In the naive datetime stamp, one must search 100 million date time stamps.I don't understand why this is true if they're all stored sorted

True, but you still need to sort through many to find your starting record

the struct approach gives you ultra-fast, hig fidelity indexing across any time in 10 years

its the same as GIS, or units, they're better to store as structs because one can do more with it

Yes, but log_2(10^8) is still small.

So with the G-Time we'd first zoom in into the relevant year, then the relevant month and so on? I'll have to read up on it. I'm afraid I don't appreciate it yet. Does it work like a try? I intuitively expect combining the results for queries that span multiple values to involve some effort.

no i could do a single match of all of the index values at the same time, so only find the records that use those same index values, this it also makes it easy for date maths with durations.

my original model was attribute owning attribute, but that was idempotent, so structs are better

Medium

Modelling time within a strongly typed database

Using Attributes as Relation Roles — Advanced Use Case for TypeDB

I sort of see why this is the case, but I don't know if it'll beat naive date-times without having a custom index.

With structs as they're (partially) implemented now, you'd actually collect every event with a certain year before filtering them down to the ones in the required month and so on. Even with some execution magic, that feels like some 10^6 values.

We can revisit this once we have structs, but I don't think it's a huge step forward over the 1185 9.

I don't see why it's 1185 either. Yes, there are 1185 attributes, but there can still be 31 * 12 * 10 * ... * 1000 UTC instances, can't there. The 9 comes is just the height of the trie for accessing one unique point in time.

UTC type you've described in the article. At that point, we could enumerate a few queries where naive date-times fall flat and a composite approach wins.

I'm curious whether you considered modelling it as a trie

The UTC type seems flat, so you'd be "joining" large intermediate results.

But if you modelled it as a trie, you can avoid the 10^6 values you have to check and get back down to YEs, but if I want to match any time and duration across many instances, from financial time, custom weeks, milliseconds, or nanoseconds. If I access it through integer components, then I can find all affected items more quickly than naive searching.

The cost of my search can only be the cost of searching ~1.2*10^3, rather than however manyh records i have. If i have many records, or I want custom durations (e.g. financial time, shift-length, nanoseconds etc.), then i have far more flexibility with components.

Hmm, I like the idea of the trie, that would be super useful for a GIS struct, where the trie represents bounding boxes. The advantage of integers in a datetime struct, is that the maths is then straightforward. For GIS, you need functions to do the maths, so there is less advantage for an integer-based struct

Are these durations that we're searching for or points in time?

Both, the general case is where you have a time with a duration both forwards, and backwards, so find all records within 2 weeks of time X.

An easier case is where you are given a time and want to search just forward or backward (e.g. find all records in a specific financial week)

More sophisticated event records have a start time and an end time, some have 4 or more timelines involved, so the comparisons then scale

Are the intervals arbitrarily large or are they rarely longer than, say, a few weeks?

It deoends on the app, if I was building an accounting system, then i woukd want everything based on shifts or financial weeks/.quarters/halves. If I was building a cybersecurity system then i am going to be more interested in milliseconds

😄

hey @Joshua I have managed to get my TypeQL v3.0 schema generator working nicely based on those templates I mentioned last time. Recall that the templates were based on a transpile of the Python object classes, and we use them to define our forms and, as a structure to carry form data to the apis.

Anyway, if you look at the attached you can see that its based on a core schema,, on which a schema for any of the sub objects and the main object, for each SFO, SCO and SRO. In this example we process the File, Incident, Impact, Sighting and Relationship templates.

Following the object definitions then a listing of all of the types of relationships in the objects, and then finally the definition of the attributes.

You can see that cardinality is annotated, and the vocab and enum controlled vocabulary is successfully generated and linked to the objects.

t has some small errors in it, and I could improve it but it is sufficient as a demo for the moment. In short, large-scale generation of Python classes, TQL schema, Fetch models and UI Forms can all be done for any type of Stix object. Which is pretty interesting

Anyway, that is a pretty cool milestone imho, and only possible becuse of Pydantic