Heavy load on mariadb

Hello,

I came across a similar topic on the forum and I'm currently experiencing the same issue. (https://discourse.crowdsec.net/t/heavy-load-on-mariadb-multi-servers-environment/1738)

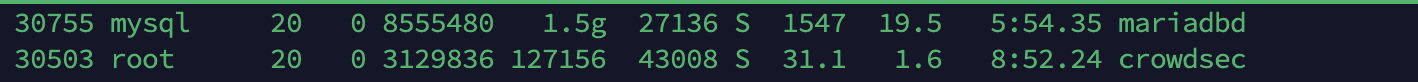

MariaDB is consuming all available CPU resources (I’ve tested with 8, then 12, and even 16 vCores), especially during SQL query execution. I also ran MySQLTuner, but the issue still persists.

Is there currently any known solution or recommended approach to optimize the configuration and reduce this high CPU usage?

Result of mysqltunner :

[!!] Slow queries: 9% (68K/736K)

[!!] Joins performed without indexes: 196741

Thanks in advance for your help!

CrowdSec

Heavy load on mariadb - multi servers environment

Hi all, I have 1x LAPI server with ~15x crowdsec servers. The mariadb server encounter a permanent heavy load due to mariadb process. With 4 vcpu and 12 GB RAM, the load is ~20 and the %cpu is 400%. Is it normal ? I suppose no of course 😔 I did not find tuning guide of mysql/mariadb from crowdsec (indexing some fields ?, etc …) Here is ...

57 Replies

Important Information

Thank you for getting in touch with your support request. To expedite a swift resolution, could you kindly provide the following information? Rest assured, we will respond promptly, and we greatly appreciate your patience. While you wait, please check the links below to see if this issue has been previously addressed. If you have managed to resolve it, please use run the command

/resolve or press the green resolve button below.Log Files

If you possess any log files that you believe could be beneficial, please include them at this time. By default, CrowdSec logs to /var/log/, where you will discover a corresponding log file for each component.

Guide Followed (CrowdSec Official)

If you have diligently followed one of our guides and hit a roadblock, please share the guide with us. This will help us assess if any adjustments are necessary to assist you further.

Screenshots

Please forward any screenshots depicting errors you encounter. Your visuals will provide us with a clear view of the issues you are facing.

© Created By WhyAydan for CrowdSec ❤️

How many bouncers / log processors do you have ?

Yes, I have several servers that go back to the main server

How many ?

Also, how many decisions/alerts do you have in the database ? (you can get the count with

cscli decisions list --all | wc -l and cscli alerts list --all | wc -l, or directly from the DB by running SELECT count(*) FROM alerts and SELECT count(*) from decisions, which will likely be a bit faster).ALERTS : 45866

DECISIONS : 62264

that seems reasonnable

Is the load constant or not ?

If it is constant, it could be because of the bouncers querying LAPI to get the decisions

If it spikes every ~2h or so, then it's when refreshing the blocklists content

If you could also try to log some of the slow queries so we know where to look, it would be useful

This seems to be very constant

what's the output of

cscli bouncers list ?Only my Python bouncer

what's the refresh interval of the bouncer ?

20 s from memory

hmmm

that's really weird then

you'll need to log some slow queries, a single bouncer querying every 20s should not put that much load on the DB

Exemple :

Interval between last API pulls :

2025-06-16T12:00:23Z

2025-06-16T12:00:57Z

That looks like either a call to

cscli alerts list --all or the periodic flush crowdsec performs in the database (which is 5k alerts/7 days by default).

Can you show me the content of /etc/crowdsec/config.yaml ?flush:

max_items: 251000

max_age: 7d 😅

Sometimes, I go over, so I increase.

even 250k alerts should be easily manageable with a single bouncer

how fast is the storage of the mysql server ?

that's the only thing that comes to mind

to confirm it's the flush, you can try to disable it by setting max_items and max_age to 0 and restart crowdsec, the load should go down

IDK

is it HDD or SSD ?

SSD

If I do it, won't it clear the database ?

-------- Performance Metrics -----------------------------------------------------------------------

[--] Up for: 1h 49m 31s (1M q [181.455 qps], 2K conn, TX: 3G, RX: 493M)

[--] Reads / Writes: 86% / 14%

[--] Binary logging is disabled

[--] Physical Memory : 7.8G

[--] Max MySQL memory : 3.8G

[--] Other process memory: 0B

[--] Total buffers: 801.0M global + 2.9M per thread (1000 max threads)

[--] Performance_schema Max memory usage: 149M

[--] Galera GCache Max memory usage: 0B

[OK] Maximum reached memory usage: 1.2G (15.83% of installed RAM)

[OK] Maximum possible memory usage: 3.8G (49.04% of installed RAM)

[OK] Overall possible memory usage with other process is compatible with memory available

[!!] Slow queries: 9% (111K/1M)

[OK] Highest usage of available connections: 10% (104/1000)

[OK] Aborted connections: 0.00% (0/2765)

[OK] Query cache is disabled by default due to mutex contention on multiprocessor machines.

[OK] Sorts requiring temporary tables: 0% (0 temp sorts / 10K sorts)

[!!] Joins performed without indexes: 322193

[OK] Temporary tables created on disk: 0% (862 on disk / 1M total)

[OK] Thread cache hit rate: 96% (107 created / 2K connections)

[OK] Table cache hit rate: 99% (3M hits / 3M requests)

[OK] table_definition_cache (400) is greater than number of tables (304)

[OK] Open file limit used: 0% (62/32K)

[OK] Table locks acquired immediately: 100% (1K immediate / 1K locks)

no, we use the 0 value to check if we should do the flush or not: https://github.com/crowdsecurity/crowdsec/blob/master/pkg/database/flush.go#L253

GitHub

crowdsec/pkg/database/flush.go at master · crowdsecurity/crowdsec

CrowdSec - the open-source and participative security solution offering crowdsourced protection against malicious IPs and access to the most advanced real-world CTI. - crowdsecurity/crowdsec

I have just setup at 0, the service no longer wants to launch.

Which error do you get ?

that's not related I think

Jun 16 14:23:31 xxx systemd[1]: Stopped crowdsec.service - Crowdsec agent.

Jun 16 14:23:31 xxx systemd[1]: Starting crowdsec.service - Crowdsec agent...

Jun 16 14:23:31 xxx crowdsec[30334]: FATAL api server init: unable to run local API: max_items can't be zero or negative

there's a small inconsistency: we do not allow max_items to be 0 when checking the config

Jun 16 14:25:31 xxx systemd[1]: Starting crowdsec.service - Crowdsec agent...

Jun 16 14:25:31 xxx crowdsec[30375]: FATAL api server init: unable to run local API: max_items can't be zero or negative

Jun 16 14:25:31 xxx mariadbd[1027]: 2025-06-16 14:25:31 3433 [Warning] Aborted connection 3433 to db: ‘xxx’ user: ‘xxx’ host: ‘xxx’ (Got an error reading communication packets)

Jun 16 14:25:31 xxx mariadbd[1027]: 2025-06-16 14:25:31 3432 [Warning] Aborted connection 3432 to db: ‘xxx’ user: ‘xx’ host: 'xxx' (Got an error reading communication packets)

Jun 16 14:25:31 xxxx mariadbd[1027]: 2025-06-16 14:25:31 3431 [Warning] Aborted connection 3431 to db: ‘xxx’ user: ‘xx’x host: ‘xxx’ (Got an error reading communication packets)

Jun 16 14:25:31 xxx systemd[1]: crowdsec.service: Control process exited, code=exited, status=1/FAILURE

Jun 16 14:25:31 xxxx systemd[1]: crowdsec.service: Failed with result 'exit-code'.

Jun 16 14:25:31 xxx systemd[1]: Failed to start crowdsec.service - Crowdsec agent.

Should I put 1?

no, it will then just keep a single alert

Okay

just comment the entire

flush section, it will also disable itOkay good !

crowdsec seems to have issue establishing the connection

how's the load on the mysql server ?

1449 % 😅

I'll restart it

After a restart still not

same slow queries ?

[!!] Slow queries: 6% (2K/38K)

Could it be from my

/v1/allowlists/check/X.X.X.X checks?

Because some machines I scan with honeypot so I check that ipv4 is not already bannedno those only use the

allowlists tables, and the queries are fairly simple (i haven't tested them with mysql, but I can't imagine them causing those kind of issues)Hmm

slow-query.log :

can you try to stop the bouncer ?

That cuts the bgp sessions...

that's not ideal 😄

then, just increase the refresh interval to something very high, like an hour

Hmmm

Do you think the bouncer could be the cause of the problem?

I'm not sure

A bouncer query should not use the alerts table, but because the flush is disabled, I don't see anything else that could make those kind of queries

which bouncer exactly are you using ?

And what's your crowdsec version ?

python-bouncer/0.0.5

version: v1.6.8-debian-pragmatic-amd64-f209766e

I only include decisions in my script

can you share your script ?

Yes, only as a private message

sure

It's sent !

i'm really at a loss

We know that mysql is not always happy with our queries and is likely the least performant database we support, but it still shouldn't behave like this

Hmm

Why doesn't CrowdSec perform indexing?

we do have indexes

but we are probably missing some

OK, so at the moment there's no solution other than waiting?

you could try switching to postgres or sqlite and see how it behaves

note that we do not provide a migration script between databases, so you'll probably need to copy some things by hand

For me, that would be getting round the problem, you'd have to understand why...

yes

but that's the best I can offer for now

going over all queries to make sure we have indexes everywhere need is going to take some time (and adding indexes without any planning can have a negative impact)

what's the max open connections to that db instance (as graph) and what do you have set in the config?

Hello @KaszpiR,

I currently allow 1,000 connections, and there are 102 threads connected

So I finally solved my problem.

If you're experiencing the same issues I did, here's the behavior that was causing it:

I had a script that checked whether an IPv4 address was banned using the command:

cscli decisions --ip IP -o json

I’ll admit, this command was running continuously in my process.

It was checking millions of addresses, so after a minute or so, it would eventually overwhelm the system.

The solution was simply to switch to an HTTP request, which is much less resource-intensive.

Thanks to @blotus for guiding me through this issue.Looking at the slow-query.log, the DB engine had to scan about 500K rows in the DB to return just 2 rows. There are definitely some indices missing. Run EXPLAIN on that query to find out. Also, subqueries, like "IN (SELECT ... ) ", are performance killers, they probably caused multiple full-table scans.