Can't deploy Qwen/Qwen2.5-14B-Instruct-1M on serverless

Steps to reproduce:

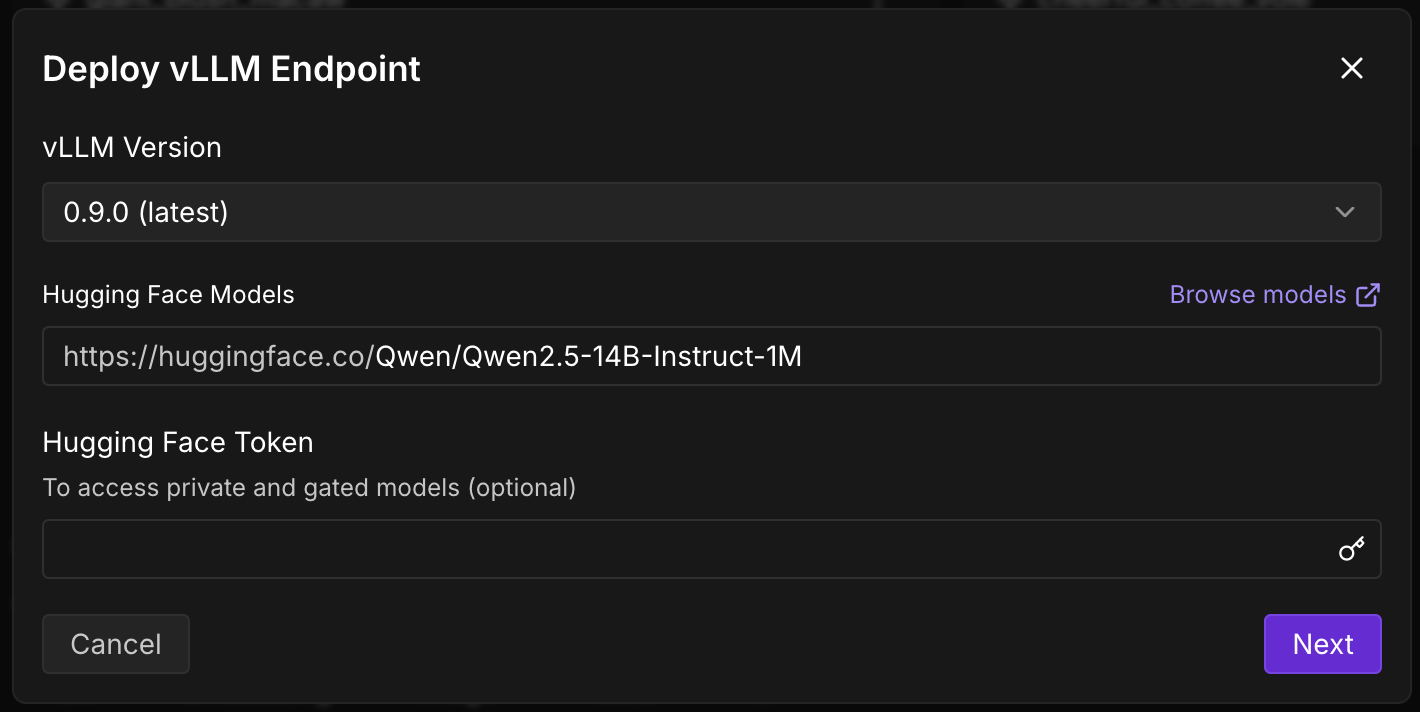

1. Use Serverless vLLM quick deploy for Qwen/Qwen2.5-14B-Instruct-1M (image attached)

2. Proceed with default config.

3. Try and send a request.

Error:

How do I fix this?

I've been trying to troubleshoot this all morning. All help appreciated

1. Use Serverless vLLM quick deploy for Qwen/Qwen2.5-14B-Instruct-1M (image attached)

2. Proceed with default config.

3. Try and send a request.

Error:

How do I fix this?

I've been trying to troubleshoot this all morning. All help appreciated