Prompt formatting is weird for my model

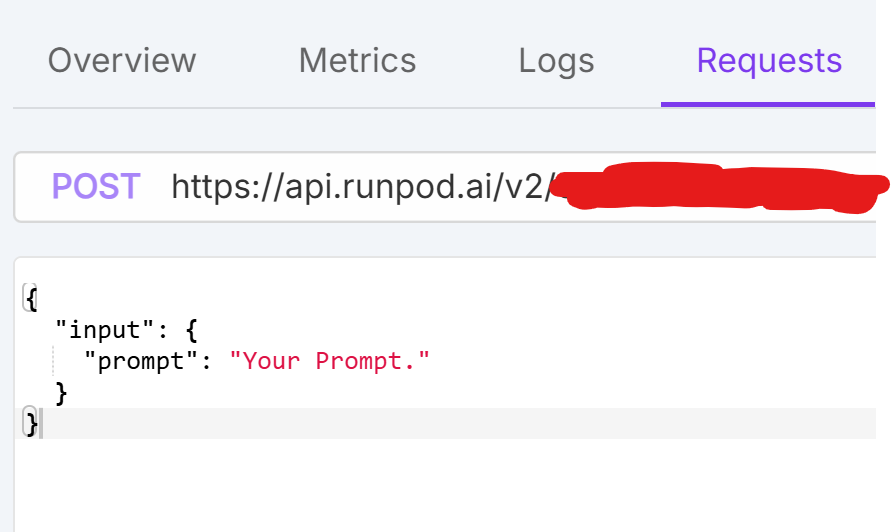

Tried making a serverless endpoint with my model from huggingface but its stuck with this prompt template. The correct one should be the alpaca prompt template which is like "instruction", "input", "output". How do I change it to this? It's only giving me errors when I change it in this window and try to send a request that way.

Solution

That field doesn't change based on the container or model you're using on the endpoint. It's just a placeholder. To test it via the RunPod UI like you're trying, which allows only non-openAI paths

See the worker repo

/runrunsyncSee the worker repo