So you guys REALLY like making things as difficult as possible don't you?

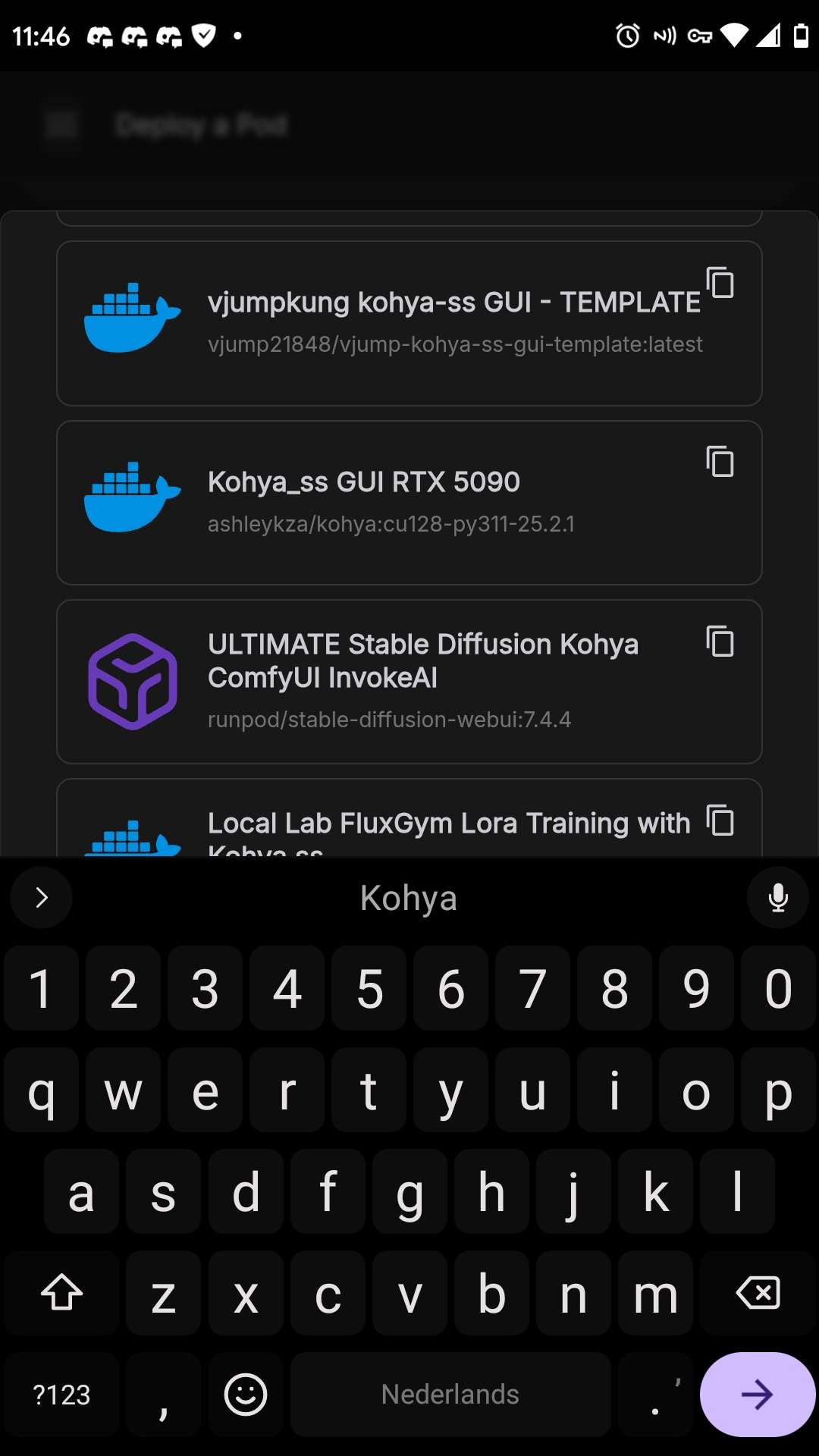

So tried to build a training POD ... Your prebaked Kohya image is about 50 versions behind and don't support Flux.

I need to Install a later version of CUDA Library but I can't because while old drivers are installed/ they are not installed so the installer can't replace them. Not to mention you have 5090s installed and yet don't have the drivers installed to support them! And of course I can't install these either.

I wasted 6 hours trying to get a damn pod to run damned Kohya ... A task I did on my own desktop in 20 mins! you can send my refund to kris.paypal@luxelite.com.au

WTF do you insist on making EVERYTHING shit?

83 Replies

Runpod doesnt offer an official Kohya image. You used a community made one which is up to its creator to maintain. If they dont maintain the template you get old stuff like that.

CUDA driver is auto injected though, so I expect it was indeed cuda toolkit and Torch in the image beinf to old.

Wow thanks for being a decent human ...

@Dj @Poddy But maybe it'd be a good idea. Have an image that can be installed for one of the most used pieces of compute software ... one that goes through the difficulty of writing an installer (that fails due to poor management (yes latest pull)) for your platform! (runpod-setup.sh)

Yes that one doesn;t support Flux

The one that says 5090 might

Or is that to old?

idk ULTIMATE is

didn't see the other ..

Ultimate is no longsr listed as officual so I think they dropped it

(Ultimate has trouble with 4090)

Multiple community made ones though

guess it might be worth a look

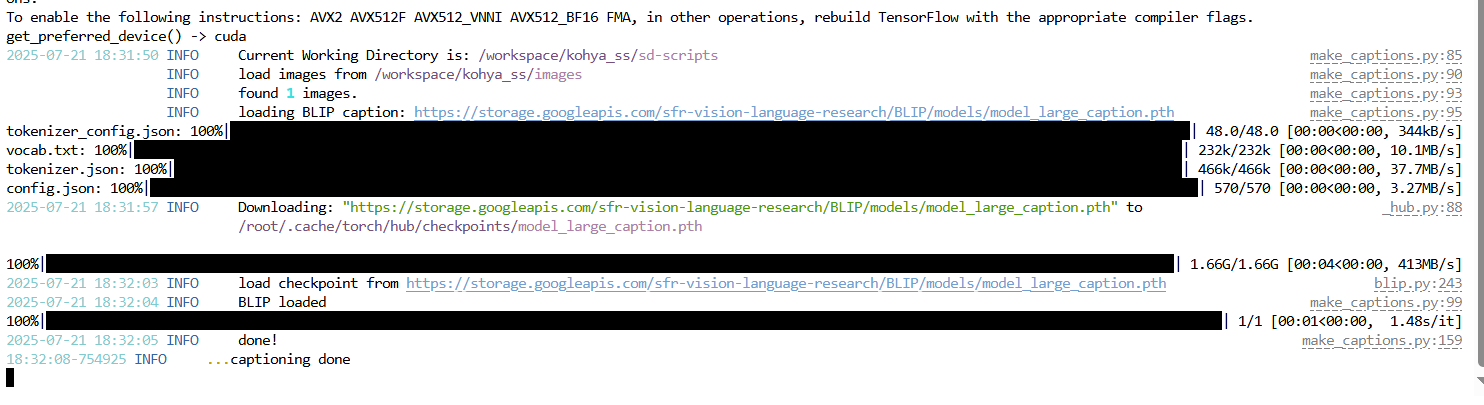

@Henky!! Well at least you tried ... the umm 5090 Image .. doesn't support 5090's ...

instructions in performance-critical operations.

To enable the following instructions: AVX2 AVX512F AVX512_VNNI AVX512_BF16 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2025-07-21 11:10:18.051537: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

2025-07-21 11:10:18.950446: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

/workspace/kohya_ss/venv/lib/python3.11/site-packages/torch/cuda/init.py:235: UserWarning:

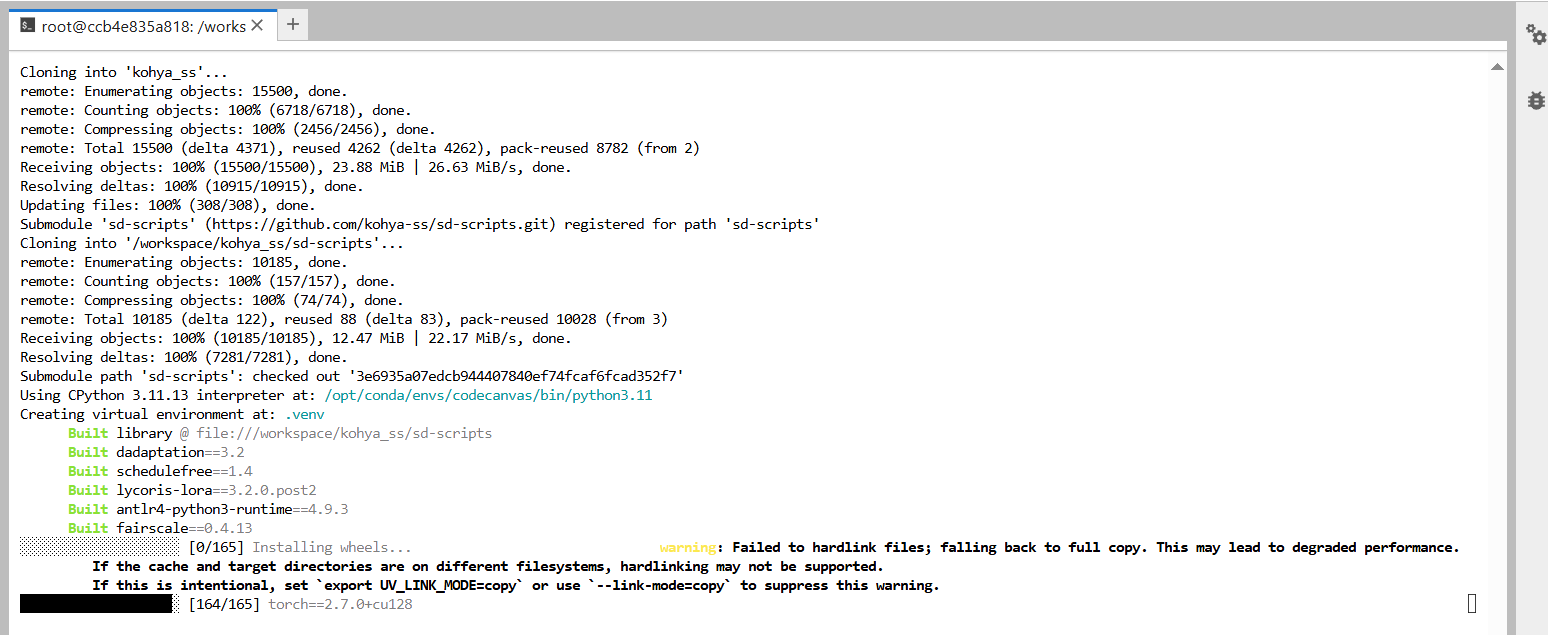

NVIDIA GeForce RTX 5090 with CUDA capability sm_120 is not compatible with the current PyTorch installation.

The current PyTorch install supports CUDA capabilities sm_50 sm_60 sm_70 sm_75 sm_80 sm_86 sm_90.

If you want to use the NVIDIA GeForce RTX 5090 GPU with PyTorch, please check the instructions at https://pytorch.org/get-started/locally/

warnings.warn(

/workspace/kohya_ss/venv/lib/python3.11/site-packages/torch/cuda/init.py:235: UserWarning:

NVIDIA GeForce RTX 5090 with CUDA capability sm_120 is not compatible with the current PyTorch installation.

The current PyTorch install supports CUDA capabilities sm_50 sm_60 sm_70 sm_75 sm_80 sm_86 sm_90.

If you want to use the NVIDIA GeForce RTX 5090 GPU with PyTorch, please check the instructions at https://pytorch.org/get-started/locally/

o.O

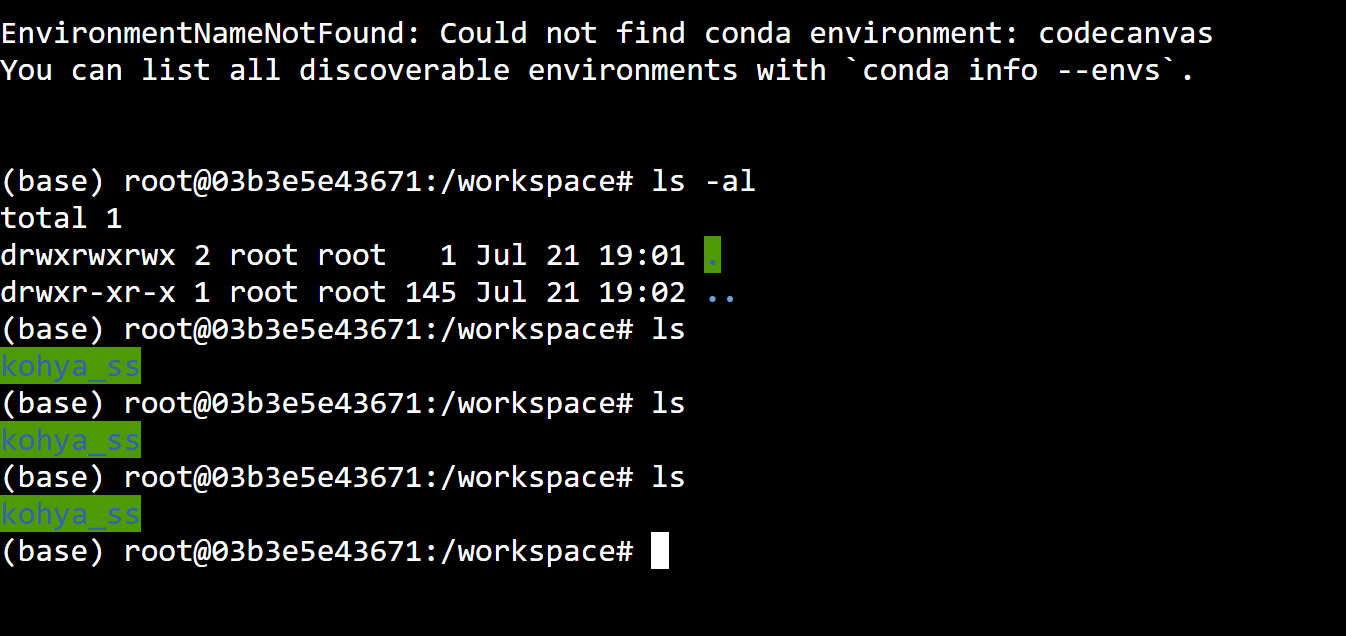

I'm actually trying to install one from scratch

Same driver problem as Ultimate ... something to do with how the drivers are not really installed

Thats not a driver issue

NVIDIA GeForce RTX 5090 with CUDA capability sm_120 is not compatible with the current PyTorch installation.

Well Pytorch issue technically

Yeah I know, torch doesn't have sm_120 compiled

Driver is fine, torch isn't

Pytorch Dev does /.. but its not compat wit Kohya

Pytorch needs to be compiled against all targets if you ship it

If they don't its whatever GPU they tested with

Anyway ... I tried backing off to 4090s ... still a web of incompat SS

So far it seems easy to setup but I don't know how compatibility will turn out

So I deleted the pod and yelled at the sky .... oh it thinks it sets up fine (I used pip3)

The template didn't come with it?

When you run it, it complains about not finding drivers, not finding GPUs, not finger pytorch ..

https://f2dd16607b97ae3130.gradio.live/ can you test this with 1x5090?

Gradio

Click to try out the app!

Just so we know if it works

Well ... not really because my Datasets / models etc are on my volume ...

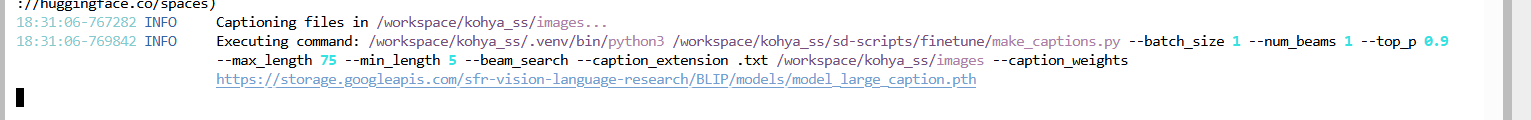

But I honestly doubt it's any diff ... if you want to test an Easy one is Blip Captioning ... if cuda isn;t working it'll fail

Cuda Lib/Pytorch/xformers

I think I'm just gonna go pay replicate ... getting damn sick of this dodgy AF runpod platform

I have such a talent for finding software bugs xD Broke the folder selector

oh that doesn't work at all ... nfi idea why ...

Now we wait

probably something old installed like JS1.1 knowing runpod

No, I installed everything myself

Wasn't so hard

Half your battle was probably it not being from scratch

well idk how it worked ... you got the same TensorFlow build error I did

but mine crashes after that come sup

2025-07-21 11:10:15.516973: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 AVX512F AVX512_VNNI AVX512_BF16 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2025-07-21 11:10:18.051537: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

2025-07-21 11:10:18.950446: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

/workspace/kohya_ss/venv/lib/python3.11/site-packages/torch/cuda/init.py:235: UserWarning:

NVIDIA GeForce RTX 5090 with CUDA capability sm_120 is not compatible with the current PyTorch installation.

The current PyTorch install supports CUDA capabilities sm_50 sm_60 sm_70 sm_75 sm_80 sm_86 sm_90.

If you want to use the NVIDIA GeForce RTX 5090 GPU with PyTorch, please check the instructions at https://pytorch.org/get-started/locally/

Your using 5090?

Yup

Just ubuntu, install python 3.11 and all the jupyter stuff, install cuda toolkt 12.8, install kohya

Or try the one a "KoboldAI using script kid" made

https://console.runpod.io/deploy?template=wjw5mvfnwj&ref=svxge8hv

well yeah idk once I get the pytorch working Kohya uninstalls it whenever it runs ... and then it says it can't even find tensorRT when I watched it get installed

Do keep in mind if kohya's already on your volume mine will most likely also break

Dude ,,, that is still a serious dis ... use oogabooga if you really want a pre-made ui

I develop for Kobold

oh ...

For GGUF we are ahead

Your probably thinking the old one that predates ooba

I'm think of the crap that runs on windows that can barely hold a conversation together ...

Back when we still used pytorch I ran into these issues all the time, its an easy fix for me

Thats up to the model

I ran Kimi K2 with it on runpod a few days ago on the AMD GPU

Although thats to heavy to be practical

idk too long ago ... think I found the tokenizer to be an awful hybrid that didn't like ChatML

but anyway ...

Unlike ooba the tokenizer isn't a hybrid

Does the link work?

I'll give you bebfit of the doubt ... so you say I need to delete Kohya from my volume ?

I'd rename instead of delete

It makes a venv inside that folder so if the .venv gets migrated you will keep your pytorch issues

And I don't know what the other templates did, git cloning into a folder that may not be git is an issue

yeah I know .. tried manually activating it and installing stuff didn't work either

When I build mine from scratch it was a fresh clone ..

fresh cuda lib and torch as well

Mine is an empty ubuntu with some special sauce when it boots

Mine said GPU couldn't be detected .. let nvidia-smi showed them running

The special sauce sets everything up

Because the driver worked fine but you had those torch issues

nvidia-smi fails if its actually a driver issue

It does happen but its rare

Sometimes the GPU hangs and then nvidia-smi freaks out

well pulled you image ... where Kohya? am I just cloning a fresh copy ?

Is Jupyter online?

( \ ( \ | |

__) ) ) ) | |

| __ / | | | || \ | // \ / |

| | \ \ | || | | | | || |( (| |

|| |_||/ || |||| _/ _|

For detailed documentation and guides, please visit:

https://docs.runpod.io/ and https://blog.runpod.io/

root@843a978fab42:/# ps ax

PID TTY STAT TIME COMMAND

1 ? Ss 0:00 /sbin/docker-init -- /opt/nvidia/nvidia_entrypoint.sh /start.sh

20 ? S 0:00 /bin/bash /start.sh

51 ? Ss 0:00 nginx: master process /usr/sbin/nginx

52 ? S 0:00 nginx: worker process

73 ? Ss 0:00 sshd: /usr/sbin/sshd [listener] 0 of 10-100 startups

81 ? S 0:00 sleep infinity

123 ? Ssl 0:00 gotty -w --credential f8zs7r1xnzzm1hr9inni:2m1s2mz8u7nqndqeazek --ws-origin .* -p 19123 env TERM=xterm bash

196 pts/0 Ss 0:00 bash

213 pts/0 R+ 0:00 ps ax

root@843a978fab42:/# cd workspace/

root@843a978fab42:/workspace# ls

axolotl-artifacts cuda_12.8.0_570.86.10_linux.run datasets filezilla_sftp_config.xml hub huggingface-cache logs models output webp_jpg_to_png.py

root@843a978fab42:/workspace# ls

axolotl-artifacts cuda_12.8.0_570.86.10_linux.run datasets filezilla_sftp_config.xml hub huggingface-cache logs models output webp_jpg_to_png.py

root@843a978fab42:/workspace#

Runpod Documentation

Welcome to Runpod - Runpod Documentation

Explore our guides and examples to deploy your AI/ML application on Runpod.

Runpod Blog | Guides, tutorials, and AI infrastructure insights

Learn how to build, deploy, and scale AI applications. From beginner tutorials to advanced infrastructure insights, we share what we know about GPU computing.

Nope

That doesn't look like mine (might be because its a shared volume) but whats the log saying atm?

double nup

clicked the link you gave me ..

Sure but what does the log of it say?

The runpod log button

(Template should be called Code Canvas - Kohya)

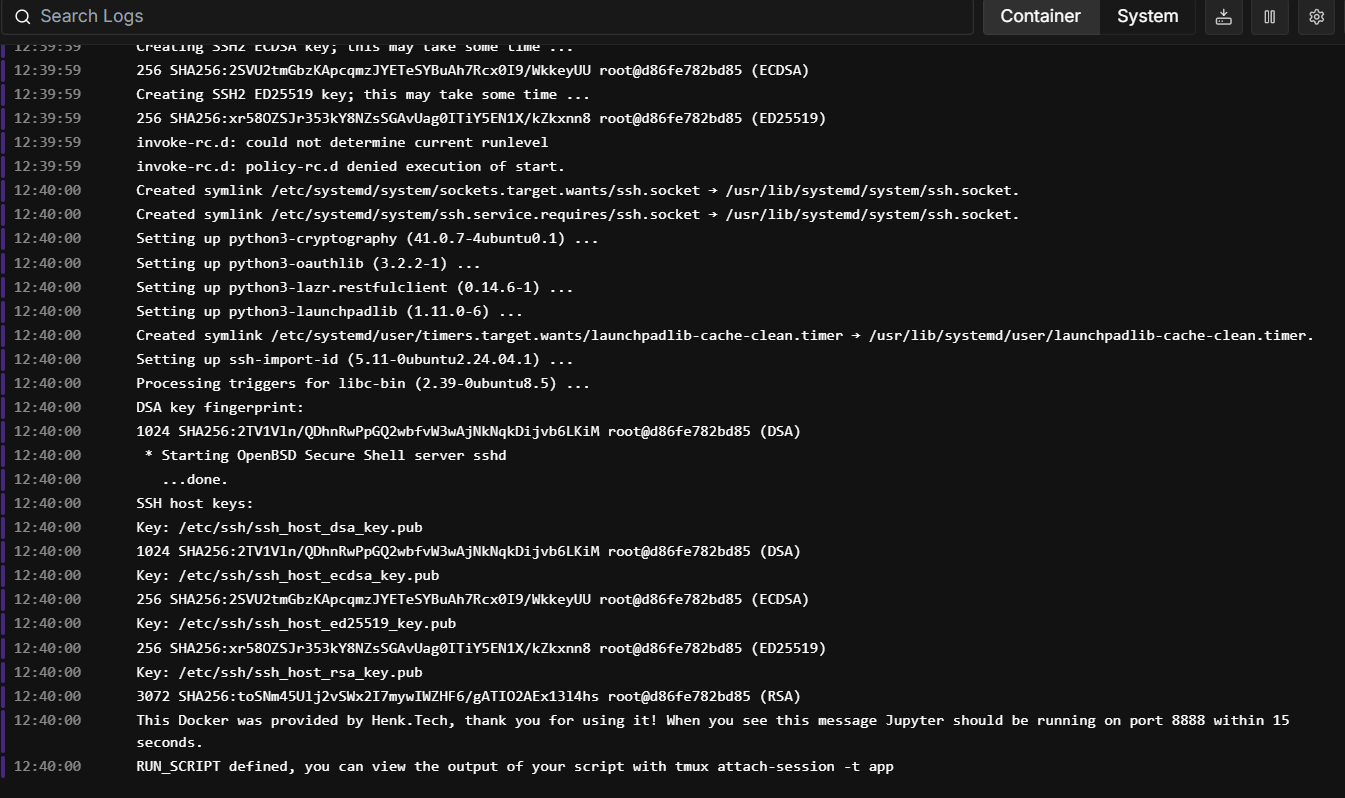

Mine looks like this once its done installing jupyter

==========

== CUDA ==

==========

CUDA Version 12.8.1

Container image Copyright (c) 2016-2023, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

This container image and its contents are governed by the NVIDIA Deep Learning Container License.

By pulling and using the container, you accept the terms and conditions of this license:

https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license

A copy of this license is made available in this container at /NGC-DL-CONTAINER-LICENSE for your convenience.

Starting Nginx service...

* Starting nginx nginx

...done.

Pod Started

Setting up SSH...

RSA key fingerprint:

3072 SHA256:YmTY5Yl7FvSaSRKP1h3Yhm7zC0FG2vubmO8vGWUPMus root@843a978fab42 (RSA)

DSA key fingerprint:

1024 SHA256:RdIbcA8+yQcTujPp5QcnUT6OkDkq27IhRTX/0uG9Ikc root@843a978fab42 (DSA)

ECDSA key fingerprint:

256 SHA256:v8KzDMWe6276xxdw4HQqNUE+vDuiswTbwUXw8QjSyGs root@843a978fab42 (ECDSA)

ED25519 key fingerprint:

256 SHA256:nJzUmTJ62w8xTmxoO/uj3OzqD7foFYlmBvq+3xKyHLU root@843a978fab42 (ED25519)

* Starting OpenBSD Secure Shell server sshd

...done.

SSH host keys:

Just clicked you link .... wouldn;t be surprised if RunPod did something unexpected .

Oh now it's a different image

Maybe ..

Thats not mine

Something caused it not to swap over

Make sure its Code Canvas - Kohya

The link should have done that but it got reversed

Yeah cool ... now eveything is deleted from my /workspace ... fuck I love runpod

thankfully everything is backed up on BackBlaze ... (as I don't trust runpod storage and was correct doing so)

Good call

I dont know why that would have triggered and which template did it

I doubt you had a file named reset_workspace casually laying around in it

no can't say that I did ..

Yeah .. I use BB for the sites storage as well ... writing images via s3 to backblaze is faster and easier than dealing with runpod storage ... BB have a unique feature ... alllowing you to list the contents of your bucket ... and wait! upload via a proper non dodgy little app that doesn't work half the time

You began using that ssh before it was done installing btw

Won't have wiped it but may be unreliable

I recommend reconnecting once jupyter is running so you get the right files

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

Yes wasn't compatible with the xformers version

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

yeah we'll see Henky here built a image .. that might kinda work .. but it stopped ... and I didn't have Tensorboard port open ... so idk it failed ... But it did start ... tomorrow is another day ... still omg so much easier on replicate ...

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

Tensorboard 6006? Ill add it to the default

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

My boss heard that they where better so paid them 1000$

Yeah that's the one ... either that or stdout from uv_gui needs to be piped into /workspace/logs/ -> /var/logs/

Replicate you spin up a VM And you can use Loras you make via the UI as a reference in the payload

Select Model (base or custom), dataset, click go ... few hours later a LoRA shows up

(Works for LLM and Diffusion LoRA)

I added it to the defaults, wont fix an existing pod but thats easy enough

Cool yeah I added 6006 myself. But the bug on the file loader meant to see what happened I'd have to fill everything out again . And I just didn;t have the heart.

(the bug causes the config loader not to load despite putting the path in )

Yeah that gradio doesnt seem the most stable, I broke it with that folder icon thing

gradio fails because it's not modular, people have been using monkey hacks for ages... but even that's not designed for real use.

I do hate to say it... but python really sucks as UI .... I was a hard sell ... wrote my first chatbot in python... Then I discovered svelte, and reactive components.