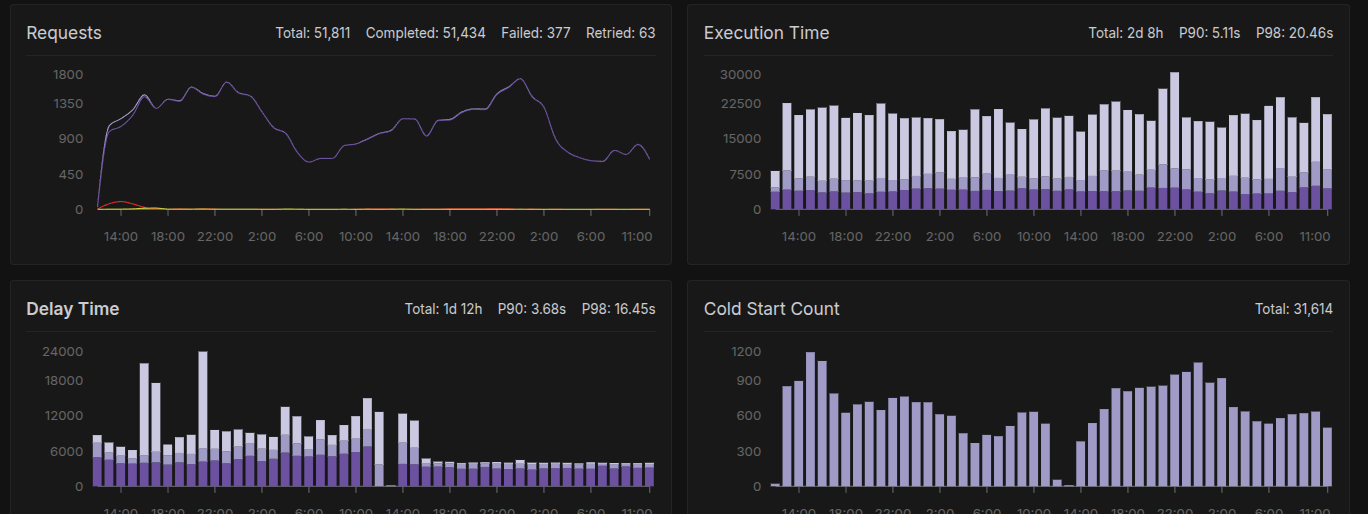

I have over 100 serverless running with different endpoint, and we face this timeout issue.

Most of time our controller/handler throws this error on various workers, Idk if its choking, its all of the sudden on all servers/workers and then resumes after some time.

16 Replies

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

Per minute 3 requests

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

@Five

Escalated To Zendesk

The thread has been escalated to Zendesk!

Ticket ID: #20701

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

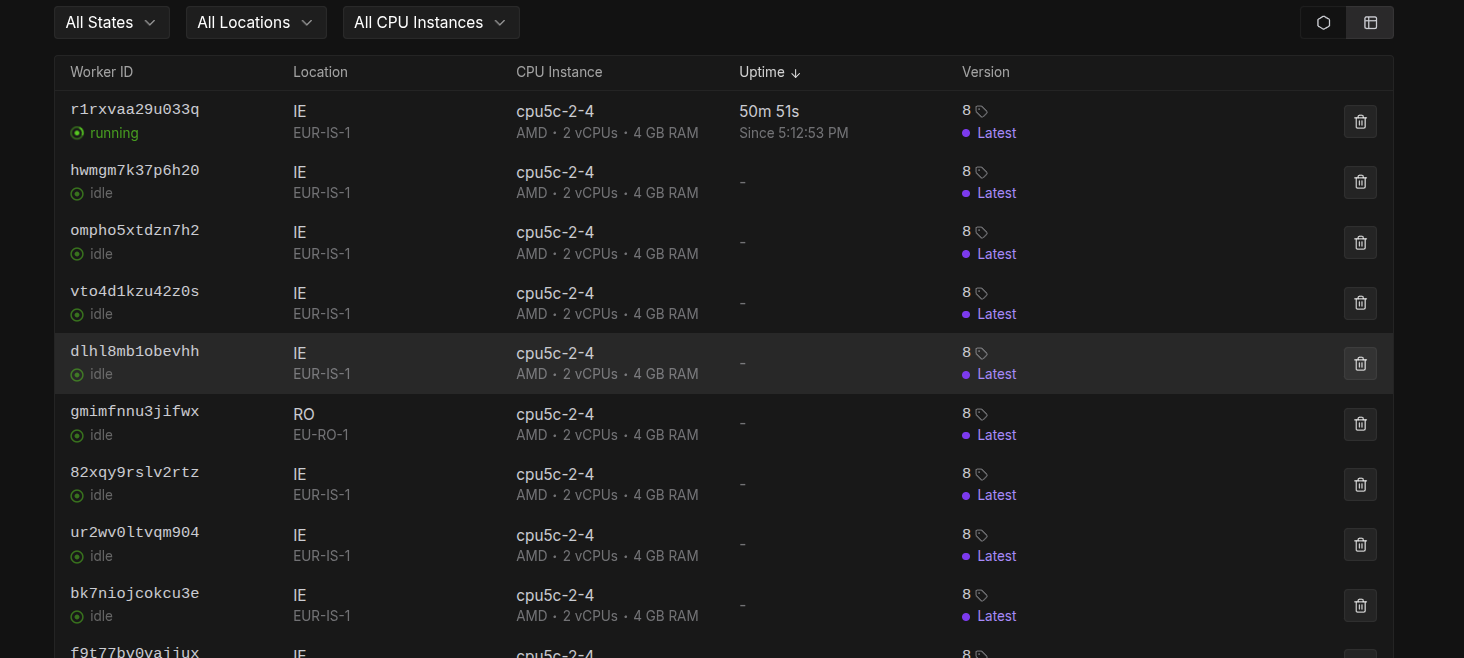

Also i do face this issue as well on cpu serverless

Why does other workers don't get request but only 1 is running for 50 mins.

check image 2

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

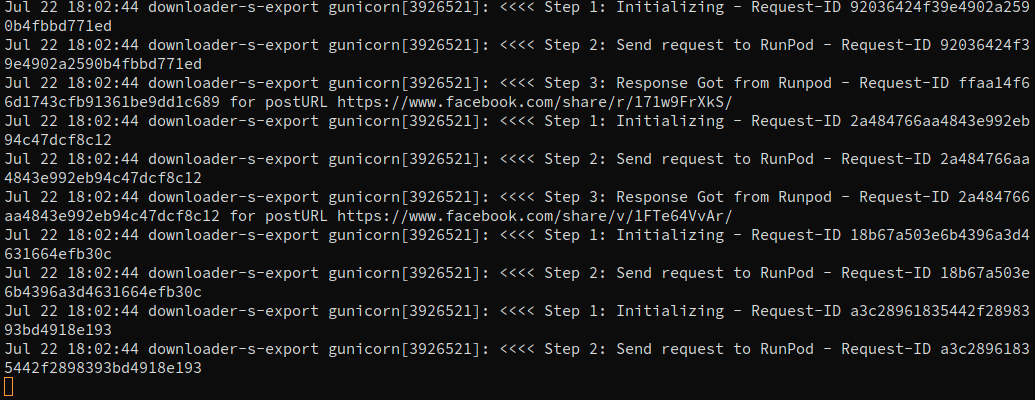

I want to perform an operationwith the help of the my workers , My client send request from Android to my Django controller and the controller send that query to the runpod worker.

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

I don't have concrete values but i think my gunicorn server is not fully utilizing the runpod workers,

i am using queue delay with 1 second.

The request it self take almost 5-6 seconds to complete. but in runpod case its taking more than that.

I want to improve this.

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

I am already using CPU5

Unknown User•4mo ago

Message Not Public

Sign In & Join Server To View

Wasted my 4 hours of computation. As explicitly mentioned that i need cuda > 12.6 , The 4090 GPU alloted to me was misconfigured :

'''start container for xxxxx/flux-quantized:v5: begin error starting container: Error response from daemon: failed to create task for container: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: error during container init: error running hook #0: error running hook: exit status 1, stdout: , stderr: Auto-detected mode as 'legacy' nvidia-container-cli: requirement error: unsatisfied condition: cuda>=12.6, please update your driver to a newer version, or use an earlier cuda container: unknown

Unknown User•2mo ago

Message Not Public

Sign In & Join Server To View

Nope i didn't. I just did it now. But it weird without any prior notice or something. Or maybe i oversighted the docs. Nor did i get an email