inaccurate time inside programs

i'm having hard time categorizing this issue so it's unspecified for now, sorry

why is the time kind of inaccurate in some programming languages?

i never use battery saving mode and termux has no restrictions to battery, but when my program is in idle, the time starts to mess up and somehow it doesn't rely on systems clock. however the issue disappears when the battery is full or its just charging

When on phone it's been lets say 5 minutes, inside the program it might say that it's been only 3 minutes since program started.

isn't there something more reliable? maybe like a way to get running system's time

if this issue is specific to certain languages im even willing to switch it (currently i use golang)

i'm super desperate so any solution will be good

39 Replies

could you give an example of exactly what "inside the program" and "it's been only three minutes" mean? ideally just a whole simple example.

asking for the wall time should be pretty much correct, but if you're doing something that assumes it will get 60 seconds of CPU time per minute, that's not how android works :)

(this doesn't explain what you're describing at all, but just in case you need it, https://github.com/golang/go/issues/20455)

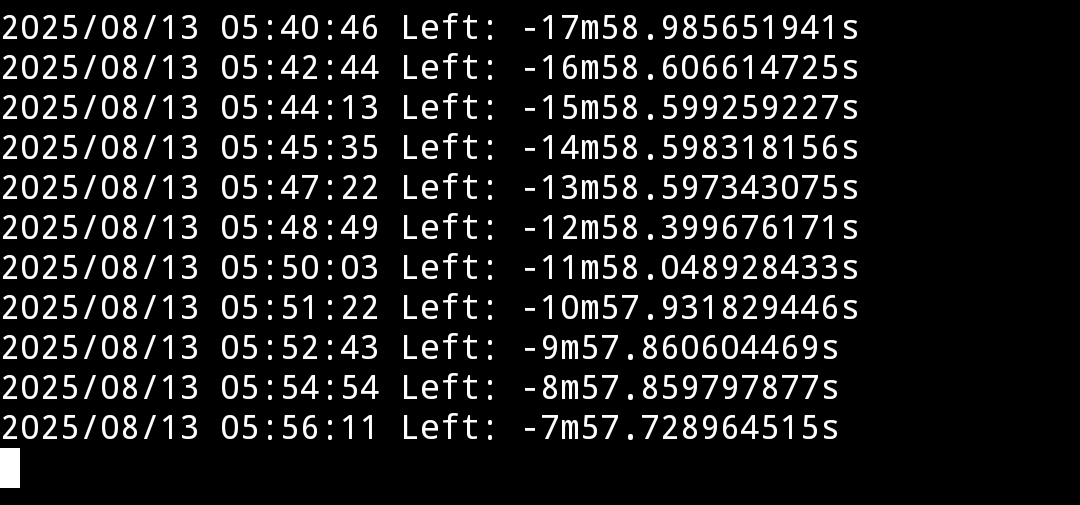

heres a simple example

i don't think this is a timezone issue

well then is there a way to request current systems time

oh yea there's date command

so my last question then

does this issue persist on other languages?

I don't either. just a heads-up. :)

so you're taking

now before a sleep and then doing math on it after

I don't think the assumption that it'll be almost exactly a minute before you get CPU time again is accurate, especially on androidoh oops

thats when i was replacing time.Now() with now var

this is the only solution i thought of

solution to what, I guess

is the problem the mismatch between the times things are emitted and the timekeeping in your program, or the fact that it's significantly off from 60s when your minute sleep returns?

its not just 1 minute

its been sometimes up to 10 minutes

when my phone was low on battery

so the inability to sleep for precise amounts of time is the problem

yeah

you just can't have expectations like this on android

the basic app model is you get CPU time only when the system decides you should, and it is free to decide you shouldn't when you're in the background, when the screen is off, etc

and if this is the only way to get current phones global time then this is solved

i tested it works either way

seems right to me

with the go-specific asterisk about apparently getting UTC

there's a short workaround in the comments of that issue

so weird its not solved by golang tho

the one with timezone?

what's not solved?

go cannot solve this

android literally does not let your process run if it doesn't want to. android apps are written under this assumption, software that thinks it's on linux via termux cannot be

the program has to be running in order to know the real time?

so I'm trying to separate the two issues you're talking about

"what time is it" works normally on android, with what you have, with the small issue of the tz always being UTC due to go weirdness

"wake me up in 60 seconds, and not significantly more than 60 seconds" is not a thing you can really expect to work on android, in general

yeah this

its not an issue if it wakes up later by any time, the issue is that it doesnt even know sometimes what REAL time is

I didn't see that in the output you sent

I saw a program that records the time, sleeps for 60s, may sleep for a lot more than 60s, and then does math on the time from (60 + x)s ago and expects to see a delta of 60s

there's nothing suggesting the timestamps on these logs are wrong

(off for the day, will look after work)

the issue resolved after taking this into mind

thanks and sorry for wasting time

nw, glad you figured it out

@sephrain i saw that you figured it out but basically, in your example, you use

time.Sleep() and count time based on that, you should know that Sleep() is not a reliable function to use for counting time (in any language I know) and your problem would also be reproducible on desktop PC. To avoid this kind of problem in the future, you should preferably use the most popular preexisting datetime libraries and functions from either the standard library of the language, or at the very least from a shell command to get the result of the date command.

the most useful practical purpose of sleep() is for things that definitely only need to pause (the entire thread) for roughly the number of seconds specified, and when there is no need for precision in the particular case, and not for precise timing.

note that the Go documentation does seem to reflect this -

https://pkg.go.dev/time#Sleep

"Sleep pauses the current goroutine for at least the duration d". this explicitly defines Go's time.Sleep() in a way that means it could take the exact amount of time, or it could also take any amount of time longer than that.oh i shouldve checked documentation earlier

i really thought this is android's issue and i feel bad for making this thread now and making people waste time on this

still thank you for clearing this up though

it's probably slightly more noticeable on average on Android but historically for example the comparison most often brought up is that if you use a laptop and then put the laptop to sleep and wake it up again, the same or a similar problem will happen to the code as if you turned off the screen of Android while running the code. however, even without the PC going to sleep,

sleep() timers on desktop and laptop PCs can drift a very small amountI don't think I agree with that

for a normal linux machine, on mains power, not comically overloaded or otherwise suffering in pain, "at least d" is technically correct, but you can reasonably expect a small delta between the actual sleep period and what you asked for, if what you asked for is the order of a second or more

I don't think it's accurate to cast the difference here as in the details of

Sleep() rather than android having much more intrusive control of scheduling

I don't think the laptop comparison is helpful, either - obviously, suspending the system will prevent you from getting woken up when you expect from a simple sleep()? I don't think suspend is a good comparison for what android's doing, either - I think android's behavior is in a much more complex and opinionated scheduling approach and doesn't resemble the computer being effectively off

tldr I think you had the correct take when you were blaming android :Pi disagree. the standard way

sleep() is implemented is not a reliable timer on any (non-RT) operating system.

I am going to provide this article as my evidence for my argument because I agree with this author and when I skimmed this, I believe they covered everything I'm aware of on the subject plus more.Making an accurate Sleep() function

Programming and game development blog.

The perfect Sleep() function

Programming and game development blog.

ok, i skimmed both articles, they cover all the other stuff about OS schedulers but not about sleep mode which we were talking about.

it doesn't matter that an Android phone's sleep mode works differently from a laptop's sleep mode. they both skew timers implemented with

sleep() in the same direction, that is, slow.

and that is just one of the many factors that influence timers that are implemented with sleep().you are arguing against a different argument than the one I made:

you can reasonably expect a small delta between the actual sleep period and what you asked for, if what you asked for is the order of a second or morethose articles talk about inaccuracy on the scale of a millisecond or less what we saw here is as much as 71s of additional delay on a

sleep(60s)

I think you would have a very hard time reproducing an inaccuracy of that scale on a normal linux machine, on mains power, not comically overloaded or otherwise suffering in pain

my understanding of why that's possible on android is a scheduler difference - on a normal linux system, schedulers are essentially eager. if there's a task read to run and somewhere to run, it'll run ASAP. android I think tries very hard to bunch up work that's ready, under the basic principle that the way you protect a battery is racing to idle.

that's a very different mechanism from being able to produce a 71s (or arbitrarily long) delay by suspending/hibernating the computer. obviously you can delay a sleep indefinitely by literally stopping time for the OS, that's not the same thing and is also not what happens to android when you turn your screen off

does that make more sense?if i was strawmanning you, you might have also been strawmanning me.

what specific thing did I say that you said you disagreed with here?

they observed discrepancies on the order of a minute in a sleep(minute). this message says that the primary reason they should take away for this is the fact that sleep isn't precise (later clarified to be "not sub-millisecond-accurate" in those blog posts), that this problem is reproduceable on a desktop, and that they should change libraries/use shell commands to address it.

you are correct that sleep isn't sub-millisecond accurate, but I don't think that's the reason they saw the minute-scale delays, I don't think it's reproduceable on a desktop, and I don't think changing their time/thread or date libs would fix this on android

(they had another problem of recording the time incorrectly - I'm measuring off the timestamped logs, the first field in that screenshot)

this message specifically is them reaching the wrong conclusion, which is why I am disagreeing

we have different philosophical interpretations of the facts, and that leads us to explain the problem in different ways and reject each others' specific ways of phrasing the problem, even though the reality we are observing might be visually identical.

There is a pretty helpful analogy here:

Evolutionist terminology for a perceived phenomenon: "evolution"

Creationist terminology for perceived subcategories of the phenomenon: "microevolution and macroevolution"

my terminology for the perceived phenomenon: "clock drift"

your terminology for perceived subcategories of the phenomenon: "sub-millisecond imprecision and minute-scale delays"

The most generalized solution to clock drift is NTP time synchronization. the

date command is a system source of data that the operating system is assumed to regularly perform NTP time synchronization on. That's why I told them that for consistent and accurate timers, they need to use a method like that of synchronizing their code with the operating system date and time.

The comparison to religion is deliberate. I normally avoid bringing it up, but I think it is important for the clarity of the explanation because it emphasizes this: I do not believe you will agree with my position because your beliefs on the subject are different from mine, but still wanted to make it clear what my beliefs are so you can understand them.I don't think I agree with a single word of that :( clock drift is yet another separate topic - the articles you linked attribute the inaccuracy I'm agreeing exists to the frequency the scheduler runs at

I don't agree that clock drift is a separate topic. You can see how, the differences between the philosophical and phrasing preferences we have on this subject make it difficult to form common ground.

no, not at all

that religion comparison is deeply unhelpful, actually

it's coming across as saying that any disagreement of fact here is actually a disagreement of perspective, and I don't think we can constructively continue after that

@sephrain I stand by what I wrote here - I do think you should interpret what you observed as specific to android

this difference is kind of key to android, and a lot of the recurring issues we help people with here - programs built for linux assume they get to run, assume they are unlikely to be killed by the OS unless the machine's in trouble, etc. but android's entire app model is "unless you're on-screen, you'll get CPU time when the OS says so, you can be killed whenever with little notice" - https://developer.android.com/develop/background-work/background-tasks is my favorite starting point for understanding the completely different way you have to write software for android due to that difference in model

termux is a bunch of programs written for linux, running inside an android app, with predictable conflicts. yours is in that category.

I could record a video of the problem being reproduced on a desktop (with no Android present),

but I am firmly convinced that even if I showed you such a video, you would post-facto invent a reason why it's not sufficient to meet your criteria for a reproduction, because you have already stated that your criteria for reproduction necessarily include Android,

so even if a non-Android device appears to visually exhibit the same behavior from the same Go program, you have already said many things in response to what I said like "i don't agree with a single word of that", so it's clear there is no common ground to even use as a point of reference for a video.

again: not going to be able to continue after that.

im just going to mention that i encountereted the same issue with tickers on windows and i found a lot of github issues related to this

to me relying on sleeping like that is just bad design and i overlooked how to write this kind of program but either way i like both of ur points and from that i found answers to everything, thank you all again

do you have equivalent output from windows showing delays of that scale / could you show me any github issues that show something like that?

I'm speaking mostly from a linux perspective; I know a lot less about windows, but 1) that level of inaccuracy seems unlikely from first principles and 2) the blog posts up there discuss sleep() being insufficiently accurate for a 60fps loop, which is true everywhere

really emphasizing that "sleep(1s/60) returned after 17.5 ms" and "sleep(60s) returned after 131s" are not the same problem and have completely different causes as far as I understand either

GitHub

time: Timer ticks at incorrect time. · Issue #19810 · golang/go

What version of Go are you using (go version)? go version go1.8 linux/amd64 What operating system and processor architecture are you using (go env)? GOARCH="amd64" GOBIN="" GOEX...

so this comment is the bottom line, right? https://github.com/golang/go/issues/19810#issuecomment-291157031

1) the scale of the inaccuracy is a consistent 2%. your inaccuracy was highly variable and >100% in some loops.

2) the conclusion there was that a specific VM's time was running 2% off of accurately, which is huge. for context, good ntp daemons track the inaccuracy of the hardware clock - for a few data points,

chronyc tracking reports that my laptop's, server's, and desktop's hardware clocks are 1, 8, and 14 parts per million slow, respectively. the last one is actually pretty high and means my desktop would fall 1.2 seconds behind per day without ntp. that azure VM would run almost half an hour ahead. that is a (virtualized) hardware problem and not a property of using sleep() generally.

or of doing anything specific in or specific to go

@sephrain does that make sense?

I'm still typing because I care about you coming away from this with a model of what happened that'll accurately predict what happens in the future - I don't think this is the right model. if you don't care about millisecond-scale inaccuracy, sleep()ing like you did is absolutely the right thing to do, and I don't think a single word has been sent here that explains you seeing minute-scale inaccuracy on android except the android-specific scheduler stuff I said.

if you need to do something as close to zero minutes and zero seconds after the hour, every hour, it's a bad move. if you are building a loop that needs to run at a precise 60Hz to drive a game at 60Hz, definitely a bad move. but that's not what you were doing.