Self-Hosted Supabase constant 100% CPU usage

Hello,

I’ve been testing Supabase as a replacement for another self-hosted BaaS. I set it up on my VPS (8 vCPU cores, 24GB RAM) using Docker, following the official documentation.

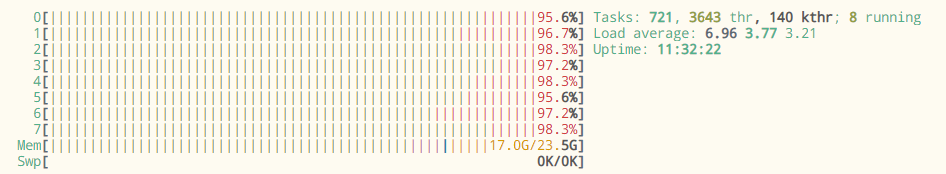

Everything runs without errors, but I noticed that all CPU cores stay at 100% usage constantly. It’s been several hours since starting Supabase, and the CPU load hasn’t gone down.

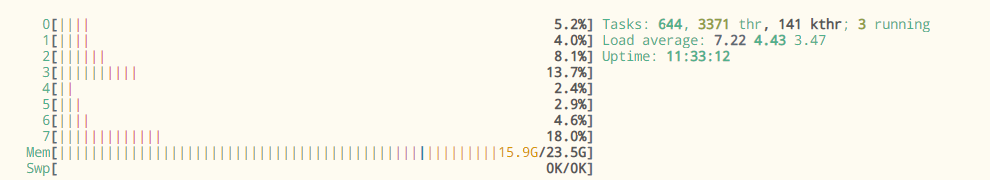

When I stop the containers with

This also happens on a completely clean install, with no data or configuration changes. As soon as I run

Does anyone know what might be causing this or how to fix it?

I'm attaching images of htop before and after shutting down the Supabase containers.

I’ve been testing Supabase as a replacement for another self-hosted BaaS. I set it up on my VPS (8 vCPU cores, 24GB RAM) using Docker, following the official documentation.

Everything runs without errors, but I noticed that all CPU cores stay at 100% usage constantly. It’s been several hours since starting Supabase, and the CPU load hasn’t gone down.

When I stop the containers with

docker compose downThis also happens on a completely clean install, with no data or configuration changes. As soon as I run

docker compose upDoes anyone know what might be causing this or how to fix it?

I'm attaching images of htop before and after shutting down the Supabase containers.