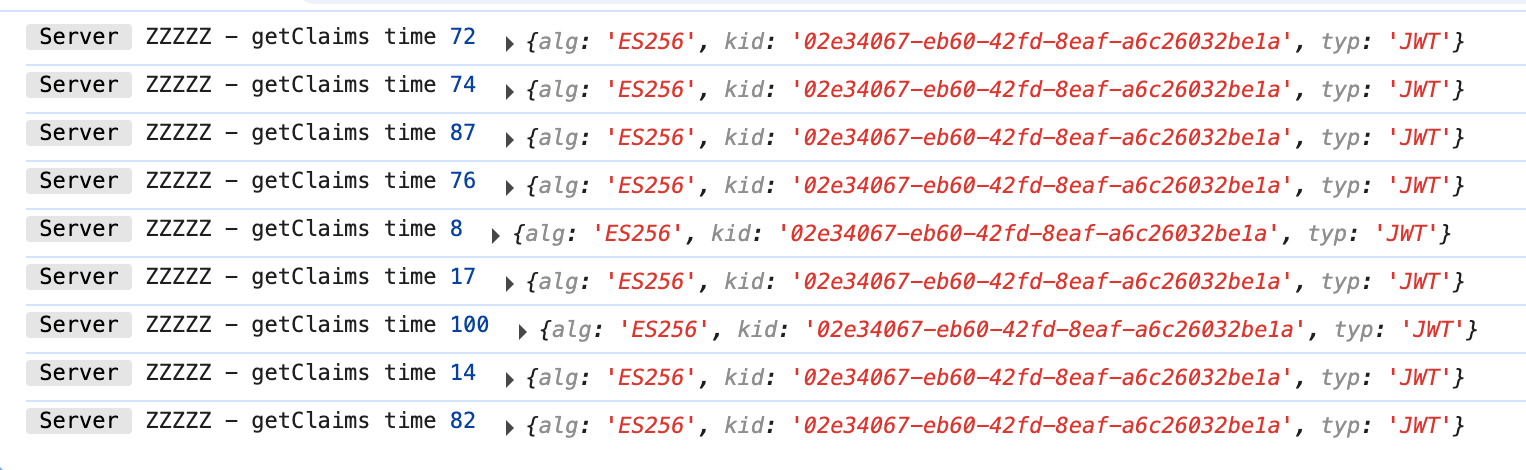

getClaims seems quite slow

I just recently ran through the asymmetric JWT migration, following along with the posted video from the blog post. I'm pretty sure I have everything setup correctly however I'm seeing a major difference in performance relative to the video. There it was showing sub 10ms times for the

For reference, literally the code I have for fetching claims looks like this:

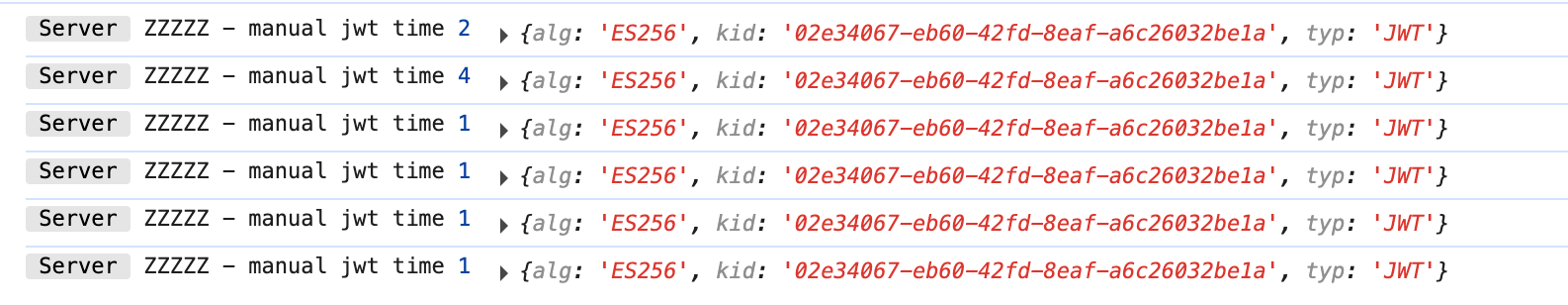

However, if i do it all manually (unclear if this is is the right way to do it) I see great performance improvements:

This decoding method was mostly cribbed from the announcement blogpost, so unclear if i'm shortcutting some work here or not. But also it has me concerned that I'm missing a step somewhere and getClaims isn't working properly

getClaimsFor reference, literally the code I have for fetching claims looks like this:

However, if i do it all manually (unclear if this is is the right way to do it) I see great performance improvements:

This decoding method was mostly cribbed from the announcement blogpost, so unclear if i'm shortcutting some work here or not. But also it has me concerned that I'm missing a step somewhere and getClaims isn't working properly