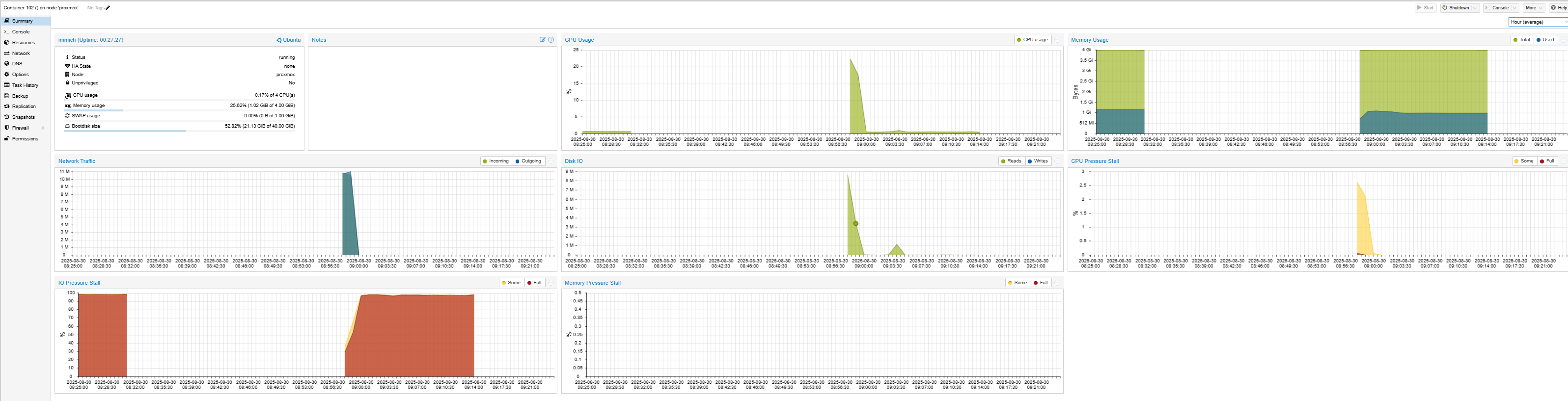

LXC Immich randomly experiencing IO Pressure Stall

Since at least a few days, LXC container with Immich randomly experiences IO Pressure Stall. As soon as this happens, all other containers and VMs shows as unknown on my Proxmox host. After that, the Immich UI will eventually open but would not render any photos. The container cannot be killed through various known methods (immich_server Docker cannot be shutdown as well at that time) and the only way to get it back is to hard reset the entire Proxmox node.

When checked logs, the only thing which happened before I restarted my node today is DB backup:

[Nest] 8 - 08/30/2025, 12:00:00 AM LOG [Microservices:LibraryService] Initiating scan of all external libraries...

[Nest] 8 - 08/30/2025, 12:00:00 AM LOG [Microservices:LibraryService] Checking for any libraries pending deletion...

[Nest] 8 - 08/30/2025, 12:00:00 AM LOG [Microservices:SessionService] Deleted 0 expired session tokens

[Nest] 8 - 08/30/2025, 1:04:32 AM LOG [Microservices:VersionService] Found v1.140.0, released at 8/29/2025, 5:55:39 PM

[Nest] 8 - 08/30/2025, 2:00:00 AM LOG [Microservices:BackupService] Database Backup Starting. Database Version: 14

[Nest] 8 - 08/30/2025, 3:04:32 AM LOG [Microservices:VersionService] Found v1.140.0, released at 8/29/2025, 5:55:39 PM

[Nest] 8 - 08/30/2025, 5:04:32 AM LOG [Microservices:VersionService] Found v1.140.0, released at 8/29/2025, 5:55:39 PM

[Nest] 19 - 08/30/2025, 6:55:27 AM LOG [Api:EventRepository] Websocket Connect: LECAWsLuGJ2S1JpRAAAf

Initializing Immich v1.139.4

Detected CPU Cores: 16

Starting api worker

Starting microservices worker

Any ideas what else I could check?

9 Replies

:wave: Hey @arcz1,

Thanks for reaching out to us. Please carefully read this message and follow the recommended actions. This will help us be more effective in our support effort and leave more time for building Immich :immich:.

References

- Container Logs:

docker compose logs docs

- Container Status: docker ps -a docs

- Reverse Proxy: https://immich.app/docs/administration/reverse-proxy

- Code Formatting https://support.discord.com/hc/en-us/articles/210298617-Markdown-Text-101-Chat-Formatting-Bold-Italic-Underline#h_01GY0DAKGXDEHE263BCAYEGFJA

Checklist

I have...

1. :blue_square: verified I'm on the latest release(note that mobile app releases may take some time).

2. :blue_square: read applicable release notes.

3. :blue_square: reviewed the FAQs for known issues.

4. :blue_square: reviewed Github for known issues.

5. :blue_square: tried accessing Immich via local ip (without a custom reverse proxy).

6. :blue_square: uploaded the relevant information (see below).

7. :blue_square: tried an incognito window, disabled extensions, cleared mobile app cache, logged out and back in, different browsers, etc. as applicable

(an item can be marked as "complete" by reacting with the appropriate number)

Information

In order to be able to effectively help you, we need you to provide clear information to show what the problem is. The exact details needed vary per case, but here is a list of things to consider:

- Your docker-compose.yml and .env files.

- Logs from all the containers and their status (see above).

- All the troubleshooting steps you've tried so far.

- Any recent changes you've made to Immich or your system.

- Details about your system (both software/OS and hardware).

- Details about your storage (filesystems, type of disks, output of commands like fdisk -l and df -h).

- The version of the Immich server, mobile app, and other relevant pieces.

- Any other information that you think might be relevant.

Please paste files and logs with proper code formatting, and especially avoid blurry screenshots.

Without the right information we can't work out what the problem is. Help us help you ;)

If this ticket can be closed you can use the /close command, and re-open it later if needed.Usually it happens later after a restart, but today it happened again almost intermediately (see another screenshot attached).

[Nest] 18 - 08/30/2025, 6:57:50 AM LOG [Api:Bootstrap] Immich Server is listening on http://[::1]:2283 [v1.139.4] [production]

[Nest] 18 - 08/30/2025, 6:58:02 AM LOG [Api:EventRepository] Websocket Connect: hxb3WwICgyhCca-oAAAB

[Nest] 7 - 08/30/2025, 6:58:16 AM LOG [Microservices:BackupService] Database Backup Starting. Database Version: 14

[Nest] 18 - 08/30/2025, 6:59:07 AM LOG [Api:EventRepository] Websocket Connect: MSYf7CZb9InHAoxnAAAD

[Nest] 18 - 08/30/2025, 6:59:37 AM LOG [Api:SystemConfigService~sj6yzegi] LogLevel=log (set via system config)

[Nest] 18 - 08/30/2025, 7:08:29 AM LOG [Api:EventRepository] Websocket Disconnect: hxb3WwICgyhCca-oAAAB

[Nest] 18 - 08/30/2025, 7:08:29 AM LOG [Api:EventRepository] Websocket Disconnect: MSYf7CZb9InHAoxnAAAD

[Nest] 18 - 08/30/2025, 7:22:18 AM LOG [Api:EventRepository] Websocket Connect: 0ZHtLmF6whw_EyPkAAAF

[Nest] 18 - 08/30/2025, 7:22:23 AM LOG [Api:EventRepository] Websocket Disconnect: 0ZHtLmF6whw_EyPkAAAF

[Nest] 18 - 08/30/2025, 7:24:06 AM LOG [Api:EventRepository] Websocket Connect: RWJyAjC4hIgIBVhOAAAH

[Nest] 18 - 08/30/2025, 7:24:08 AM LOG [Api:EventRepository] Websocket Connect: c4izKLugtbctvXPQAAAJ

I have a feeling that it could be because of the DB backup but what is interesting, I disabled auto backup job before recycling the Proxmox host today. In the meantime, I just enabled DEBUG logs to see if something extra will show up here when the issue happens.

Is this new in Proxmox 9? I can't see that panel 👀

Yes, it is 9.0.6

What hardware are you hosting on?

AMD Ryzen 7 5700U, 16GB of RAM, two ZFS Raid 1 pools: one 500GB NVME for hosting VMs and containers and second 4TB on HDDs to host data. For Immich, there is a mounting point on OpenMediaVault NFS share to keep my photos and videos. That part is hosted on HDDs while the LXC itself is hosted on NVMEs.

I would suggest mounting the backup folder to the NVMe, I assume DB_DATA_LOCATION is also on the NVMe?

You only have 4 GB ram for the LXC. that is far too small

I assume that is causing issues as well

I need to check it. Thanks for the hint.

Well, looking at the usage it is not exceeding 2GB but I can give a try to assign more.