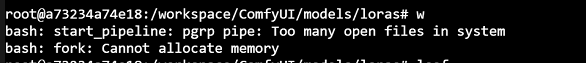

Too many open files on GPU pod A6000

On Pod A6000, frequently i'm facing "Too many open files" and "cannot allocate memory" whereas Comfyui is not using all VRAM/RAM.

Usually, it happens after sampler generation, during an interpolation process.

OS: Ubuntu 22.04 LTS

Soft: Comfyui with venv

Model: Wan 2.2

Volume: Network volume

Any clue is welcome.

Usually, it happens after sampler generation, during an interpolation process.

OS: Ubuntu 22.04 LTS

Soft: Comfyui with venv

Model: Wan 2.2

Volume: Network volume

Any clue is welcome.