Workers stuck at "Running" indefinitely until removed by hand

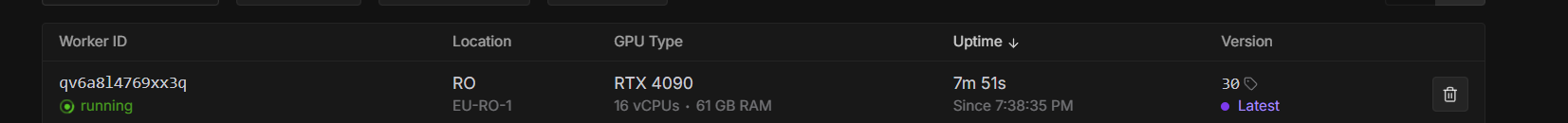

It was all good for a month or so but lately (3-4 days) many of my workers started randomly being stuck at "Running" with errors like this: (this is worker qv6a8l4769xx3q). They keep running like this, generating infinite uptime and seemingly affecting the queue (noticed many jobs waiting for minutes despite having 5 idle workers ready)

10 Replies

the image itself is nothing special, and it worked without problems before, no changes were made to the image (I even increased the required disk size just in case)

after a while I've got this in the worker's log, but is still was shown as idle:

it is happening again: one request in queue and one running worker, doing nothing

nothing in the log this time even

It keeps happening to new workers with no apparent reason

Also getting many of those:

absolutely no changes made on my side before it all started to happening

do I pay for this as well?

Can you email help@Runpod.io? We will get all of this sorted and I'll make sure they refund you for everything you've spent on this.

just sent an e-mail with the same subject as the topic here. Please look into it, as it affects my production service serverely

(ticket # 23140)

hi @Dj , I'm getting the same thing too with my serverless endpoint

- It started happening more after I updated my base image to

pytorch/pytorch:2.8.0-cuda12.9-cudnn9-runtime (to get compatibility with 5090s)

- it's happening from time to time, and usually it works without issues

- I do have a filter set to only allow CUDA 12.9 in my endpoint settings

probably the worst thing for me is that it gets stuck in "Running" - the worker process doesn't see that comfy died and keeps waiting and waiting. I guess that's something I can look into myself

but why does that even happen in the first place? is the 12.9 tag inaccurate on some machines? is there more nuance to this?

I think I might have fixed this in my custom image by returning an error (return {"error": "ComfyUI server unavailable - failed to start or connect after maximum retries"}) in my runpod handler instead of doing os.exit(1)

it's probably still happening, but I'm not feeling the impact nearly as much