experimental_ouput support for other models

I would love to see this being supported for other models than openai

I am not sure but I suspect under the hood it depends on the structured_ouput from openai models option so natuarelly it doesnt work with other models like gemeni .

would be cool if the options could register that if the provided model is not openai that it goes for a slightly different strategy without using the structureted output

My use case is basically

1. gemeni + structured ouput + tool calls

and I would love to keep using the structured otuput option for this

right now since it only works for openai models

i have to fallback parsing the repsonse manually into a zod schema and define in the prompt what the ouput schema would look like so i can mix openai and gemeni models

From what i saw it seems the experimental_ouput opiton add a second system prompt with the given sschema to the messages.

Would like if this was supported out of the box since it always feels like a core feature is missing here .. like parsing response into zod.. dont like that i have to ductape that myself still 😄

34 Replies

📝 Created GitHub issue: https://github.com/mastra-ai/mastra/issues/7560

GitHub

[DISCORD:1414186078067232932] experimental_ouput support for other ...

This issue was created from Discord post: https://discord.com/channels/1309558646228779139/1414186078067232932 I would love to see this being supported for other models than openai I am not sure bu...

Hi, @Steffen ! You should have a look at the

structuredOutput provided by Mastra. It allows for providing any model you want. There's an example in the docs that uses openai, but you can use any ai-sdk compatible model provider (e.g. @ai-sdk/google). See this link for the example https://mastra.ai/en/docs/agents/output-processors#structured-output-processor@Romain already saw that .. but i assumed it doesnt support tool calls when using gemeni + structurted otuput + tool calls ?

@Romain or am i wrong there ?

Hi, @Steffen ! Let me double check that

I just tested this out using gemini-2.5-flash,

structuredOutput and tool calls and it does work. I simply used the usual weather agent which has a tool, and plugged i gemini and structuredOutput like below it worked fine 😉

@Romain thanks . Will try it soon 🙂

is this only supported in generateVNext ?? I just tried it and it seemed to not repsect it

@Romain

it gave me my schema in the wrong language 😄 .. the prompt itself is german ( comign from portkey ) .. while the outpuut schema is in english

@Steffen yes it's only in generateVNext/streamVNext but those will become the new stream/generate the week after next week

structuredOutput also has an

instructions key, you can use that to give the json output llm custom instructionsNice will try that next week. Thanks

ok i tried generate vnext

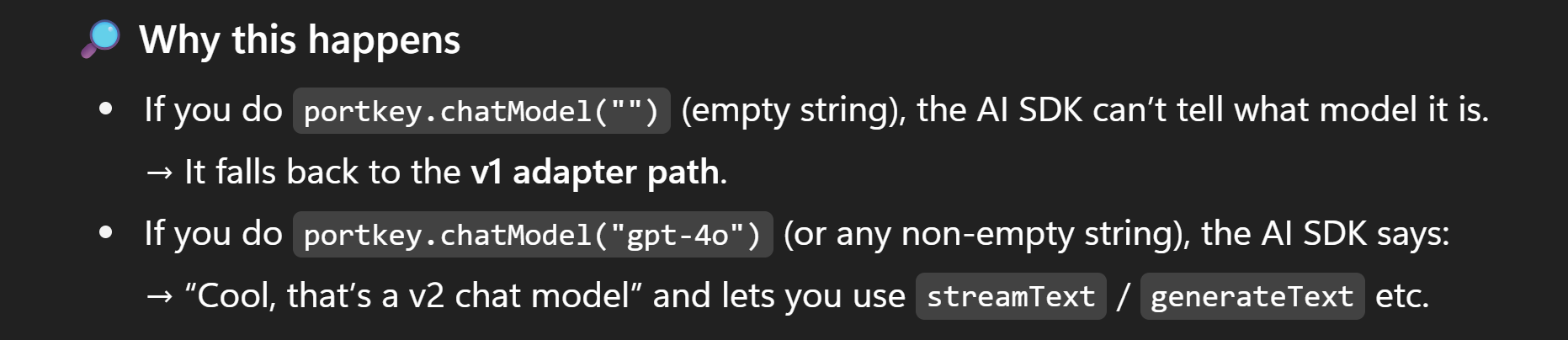

Error executing step generate-lesson-plan: Error: V1 models are not supported for streamVNext. Please use stream instead.

at Agent.streamVNext (file:///Users/sunger/Desktop/toteach/toteach-agents/node_modules/@mastra/core/dist/chunk-HHRWDBFK.js:554:1648)

iam using @portkey-ai/vercel-provider

with gemini 2.5-flash / gpt4.1

dont really understand whats the root of the problem here .

why is it saying streamvnext even thouguh i use generate ?? i guess it uses stream always behind the scenes or soemthing

"@mastra/core": "^0.15.2",

"@mastra/libsql": "^0.13.7",

"@mastra/loggers": "^0.10.9",

"@mastra/memory": "^0.14.2",

I will update tommorrow for the 0.16 when generateVnext replaces the generate ... but still there is this problem with the v1 models . hope you cann tell me what this is about and how to fix 😄 .. Really would love this structured output to work across all the models gemeni / openai + tool calls .. so i dont have to implement some custom parsing...

also its abit confusing that structred_ouput is avaiablee in .generate even though it only works in generateVnext as you said

@Tyler @Romain

yeah that is confusing @Steffen , thanks for pointing it out, we'll remove it! for the v1 model error message, that is also a confusing message. We're going to improve that error this week but it means your model provider package needs to be updated to work with AI SDK v5 (which is what streamVNext is compatible with)

i see. so portkey package is the culprit. Thanks

Yeah, I was just taking a look and I'm not sure if they have an ai v5 compatible version 🤔

their last publish was 7 months ago https://www.npmjs.com/package/@portkey-ai/vercel-provider?activeTab=versions

npm

@portkey-ai/vercel-provider

The Portkey provider for the Vercel AI SDK contains language model support for the Portkey chat and completion APIs.. Latest version: 2.1.0, last published: 7 months ago. Start using @portkey-ai/vercel-provider in your project by running `npm i @port...

yeah i saw that . so i guess iam out of luck there

you might be able to use the openai compatible provider

not sure about the image model part but the other parts seem to be openai compatible (from the types in their code) https://github.com/Portkey-AI/vercel-provider/blob/main/src/portkey-provider.ts#L26-L44

GitHub

vercel-provider/src/portkey-provider.ts at main · Portkey-AI/verce...

portkey vercel native integration. Contribute to Portkey-AI/vercel-provider development by creating an account on GitHub.

and there's an aiv5 version of the openai compatible provider

yeah perhaps the image model part is the only one that wouldn't be compatible https://github.com/Portkey-AI/vercel-provider/blob/main/src/portkey-provider.ts#L103

GitHub

vercel-provider/src/portkey-provider.ts at main · Portkey-AI/verce...

portkey vercel native integration. Contribute to Portkey-AI/vercel-provider development by creating an account on GitHub.

will give it a try tommorrow . when will you drop the mastra update tommorrow that updates the .generate() --> vnext ?

we're delaying til next week to make sure it's rock solid

we want the docs quality to be higher and we have a list of bugs to fix as well

ohh okay. thanks for the heads up 😄 .

tbd but we may delay it again after that, hopefully not though! We wont move it over until we're totally confident in replacing the main one

np got a alternative in the meantime . ill keep an eye on this

sounds good! lmk if I can help

sure thanks !

ok passing the model doesnt work either ...

this seesm to be the only solutions right now 😄

https://github.com/Portkey-AI/vercel-provider/issues/11

Didnt work after all ... I tried using generatevnext

with custom openai provider .. mentioned in the issue ... it

it stilll complains about the V1 Model .... guess i will just wait and use my manual ductape for now 🙂

do you mean you ran into issues using the openai-compat provider?

yes i used the openai compat provider (portkey + generateVnext and still got the Model V1 error

thats odd, I used it the other day with streamVNext 🤔 I'll see if I can reproduce it on my end

any chance you remember which package version of openai-compatible you used?

think i just tried the latest did a fresh install

ok cool, thanks! I'll try it out

i think it was something like this .. in the headers I added the apikey .. maybe i made some mistake somewhere

@Steffen I can't reproduce the openAI compatible issue, it works as intended for me, can you paste what versions you're using and log out the provider object

ok . have to bake it in again . give me a sek @Daniel Lew

"@ai-sdk/openai-compatible": "^1.0.18",

not sure why but you are right .. I implemented again waht i posted above ... maybe i had the wrong version before or something it works now 😄

@Daniel Lew and @Tyler Thx. Feels Good now

hell yea! glad to hear it's working!