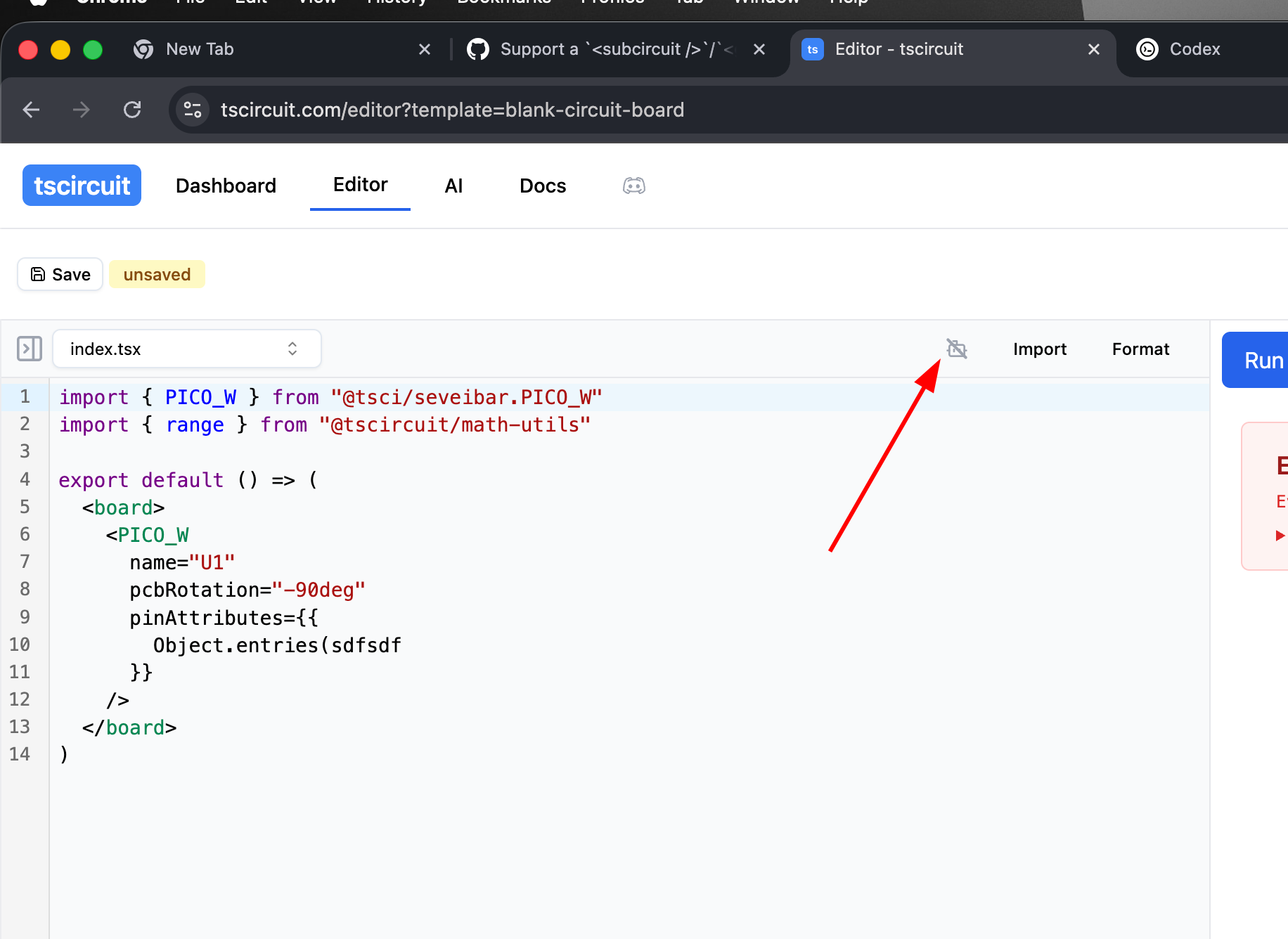

Seve - @Abse this isn't working on prod, it's b...

@Abse this isn't working on prod, it's been there a long time, I don't think we should ship broken features- we need to decide if we're going to remove this or fix it

39 Replies

so I've tested it for a while on local and the hallucination is very high I'm not sure we can fix it , I tried lots of message but nothing fixed the issue , wdyt ?

can you figure out how to get it fixed on prod

btw, we should never ship a broken feature to prod this long

like you just want it to work ?

yea i want to see the feature

yeah

and i don't want useless buttons on prod

just annoyed because probably lots of users are pressing this going "wtf"

i want to see this feature

I mean if you add an API key for openrouter I think it should work an we can check if we want to keep it or remove it

where does it need to be added

does an endpoint need to be implemented?

no one sec

VITE_OPENROUTER_API_KEY

this is the .env

fake-snippets-api\routes\api\autocomplete\create_autocomplete.ts

the end point does exist

on fake , not sure if you guys implemented it on the backend tho ?

+ we need to guard against spaming right ?VITE_OPENROUTER_API_KEY doesn't make sense

that would be insecure

it should just be OPENROUTER_API_KEY and should not be passed to the frontend

yeah it's not passed

it can never be passed

that should be removed

or renamed OPENROUTER_API_KEY

I mean the api key in .env and the end point in the backend

yeah I can rename it

yes

VITE_* always means that it's being passed to the frontendoh

there's no implementation on the backend for create_autocomplete rn

i also noticed that when i hit the bot my editor started to lag a lot

but idk maybe unrelated

could be a rendering issue

it does work after renaming

working locally doesn't matter at all to me

like the only way it helps users is on prod

you don't have backend access so it's not really your fault, but it shouldn't have shipped without working

ofc so how do we move it from fake to prod never worked on the back end I remember you talking to @DOPΣ about it IIRC

yeah for sure

yea i'm frusterated but it's clear that it's more or less a project management issue

that was a mistake

but it's very frusterating still haha

true it is

we needed a checklist issue and delegation for the different parts

ty

no problem

btw this prompt should work much much better if adapted https://docs.tscircuit.com/intro/quickstart-ChatGPT

Quickstart ChatGPT | tscircuit docs

Preview and iterate on tscircuit designs directly inside ChatGPT using a small CDN bundle and a simple repo convention.

i noticed you're loading the props readme, that's super slow and way way too verbose

we've experimentally tested the chatgpt prompt in benchmarking

ok let me do this

so we don't need the props anymore just this promt and do more testing ?

for prompts we have a repo that runs benchmarks https://github.com/tscircuit/tscircuit-prompts

GitHub

GitHub - tscircuit/tscircuit-prompts: Devvelopment of prompts that ...

Devvelopment of prompts that enable AI to write tscircuit via context - tscircuit/tscircuit-prompts

so we'll always use stuff from that repo and benchmark it

does it accept openrouter api key ?

let me try to use it

uhh good question

we might also want specific benchmarks for autocomplete, but it is good

note that gpt5 is much better than 4

we would need to add support for handling either OPENAI_API_KEY or OPENROUTER_API_KEY

I thought that endpoint is totally spammable so thought to wait to learn something like lsp

You know?

Or should we go with basic endpoint? With rate limiter and account credits integration

we don't need anything fancy, we have redis so we should be able to rate limit

Hmm, if nobody working I can give it a try on api prod. After Wednesday

i think i have an implementation soon via codex

but let's review weds

Yeah more options better, public codex ig if