Started Pod takes a long time and uses no RAM on VLLM GPT-OSS-120B

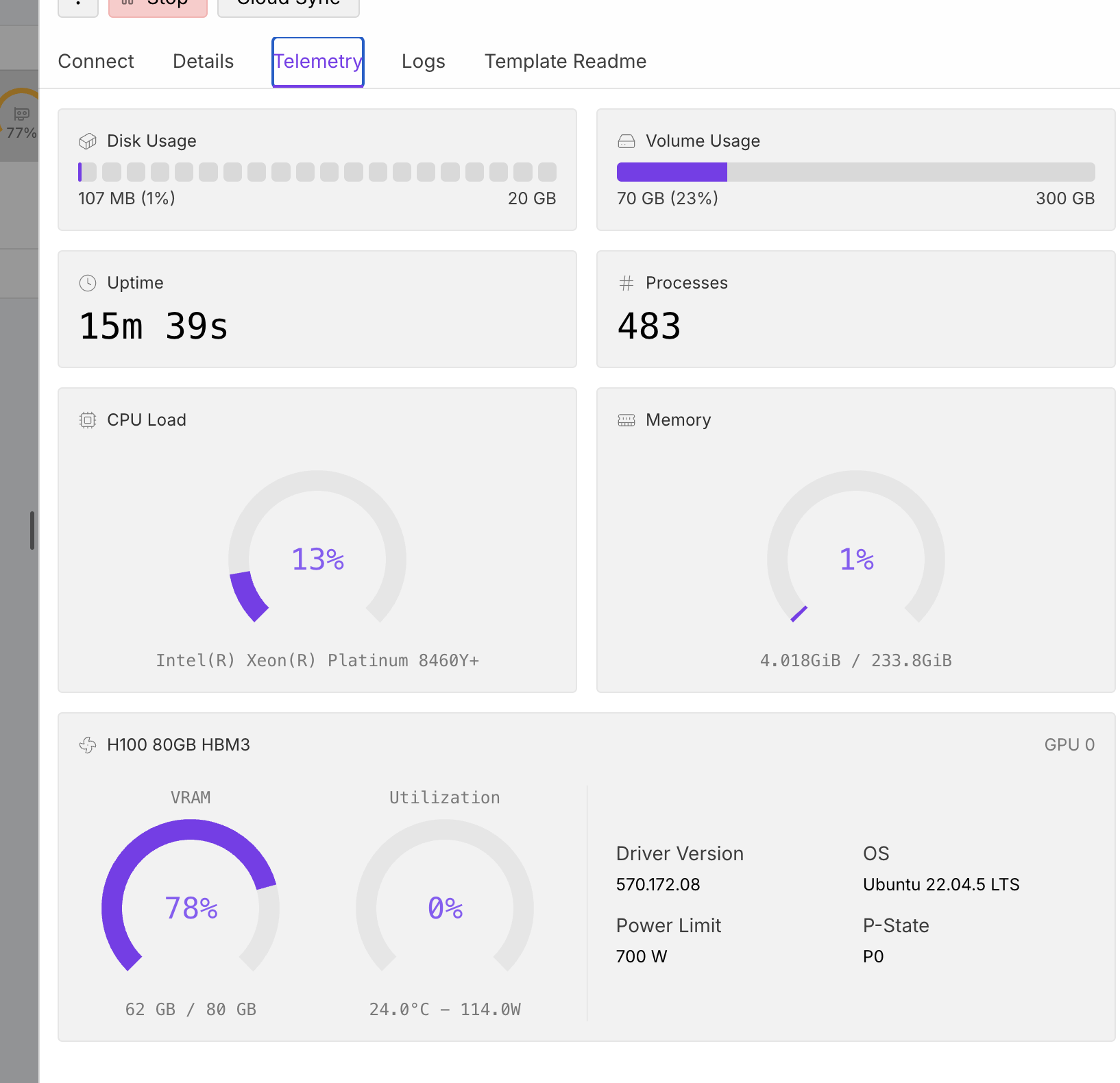

I am so confused, yesterday I did the same thing, the Pod was running quite quickly. Now, I have tried restarting it, and the GPUs were taken- ok. So I spin up a new pod, and it just does not work. I get a 502 error, and it seems like it just doesn't do anything anymore?

Solution

For anyone else wondering - it took 30 minutes to start and load the model, then it worked. That's what caused the 502 error. The low RAM utilization persisted throughout