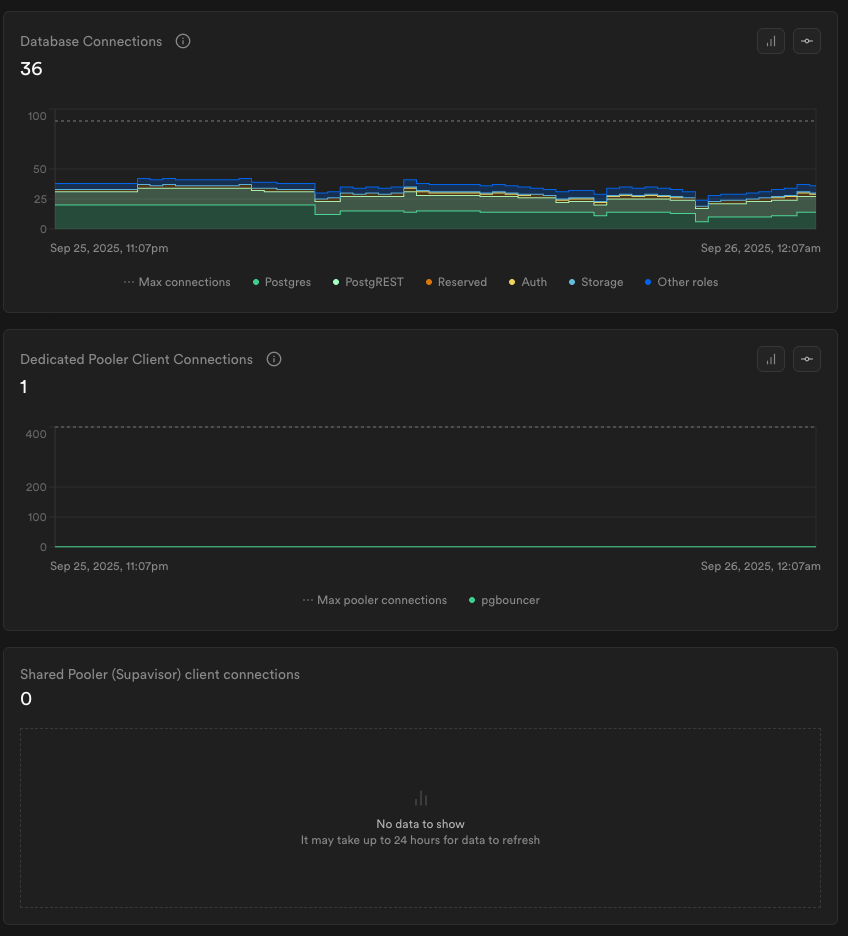

Shared Pooler (Supavisor) connections graph is empty (always showing 0)

Hi,

We are serving a SvelteKit app on Netlify Functions that's (just recently) using Prisma ORM, and connecting to Supabase via the

As described here: https://www.prisma.io/docs/orm/overview/databases/supabase

Our app is live in prod and running, but the Database Reports page graphs look like this (view attached screenshot)

We've had a couple of

errors happen in prod and are currently trying to debug, and those empty graphs are puzzling. Why would the Shared Pooler (Supavisor) chart show nothing?

We are serving a SvelteKit app on Netlify Functions that's (just recently) using Prisma ORM, and connecting to Supabase via the

As described here: https://www.prisma.io/docs/orm/overview/databases/supabase

Our app is live in prod and running, but the Database Reports page graphs look like this (view attached screenshot)

We've had a couple of

errors happen in prod and are currently trying to debug, and those empty graphs are puzzling. Why would the Shared Pooler (Supavisor) chart show nothing?