Crons just randomly failing to run

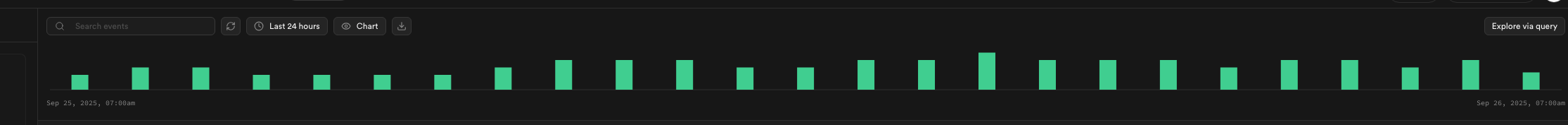

I'm seeing over the last few weeks by the looks of it that cron jobs are just randomly failing to run, I have 3 crons set to run every 15 min on the hour , 15, 30, 45. I'm seeing that some just ramdomly didnt run in any logs. These crons fire some edge functions ad I'm seeing the same pattern in the edge function logs. So it leads me to believe that its the cron just not being run. It was rock solid, could see in logs a "flat pattern" 12 an hour, now just randmoly up/ down