"Internal agent did not generate structured output"

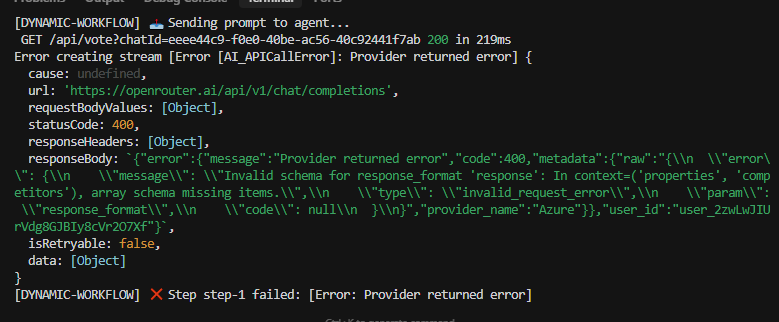

I am getting:

Shouldn't this kind of errors be impossible since I believe OpenAI should be guaranteed to return structured output? What I am possibly doing wrong?

44 Replies

I updated from earlier version to latest and this started to happen. I'm a bit puzzled as per OpenAI documentation this should not happen: https://platform.openai.com/docs/guides/structured-outputs

Hi @Ville ! Are you able to share a small repro example?

Something like this:

const CTAAnalysisSchema = z.object({

ctas: z.array(

z.object({

text: z.string(),

intent: z.string().describe('Open-ended intent classification'),

intentConfidence: z.enum(['high', 'medium', 'low']),

urgency: z.enum(['high', 'medium', 'low']),

urgencyEvidence: z.string(),

priority: z.enum(['primary', 'secondary', 'tertiary']),

effectivenessScore: z

.number()

.min(MIN_EFFECTIVENESS_SCORE)

.max(MAX_EFFECTIVENESS_SCORE),

effectivenessBreakdown: z.object({

clarity: z

.number()

.min(MIN_EFFECTIVENESS_SCORE)

.max(MAX_EFFECTIVENESS_SCORE),

valueProposition: z

.number()

.min(MIN_EFFECTIVENESS_SCORE)

.max(MAX_EFFECTIVENESS_SCORE),

urgency: z

.number()

.min(MIN_EFFECTIVENESS_SCORE)

.max(MAX_EFFECTIVENESS_SCORE),

friction: z

.number()

.min(MIN_EFFECTIVENESS_SCORE)

.max(MAX_EFFECTIVENESS_SCORE),

}),

improvements: z.array(z.string()).max(MAX_IMPROVEMENTS),

}),

),

})

const response = await agent.generate([{ role: 'user', content: prompt }], {

format: 'aisdk',

structuredOutput: {

schema: CTAAnalysisSchema,

},

})

But I am wondering why it happens in the first place. Never had any issues with OpenAI & Structured ouputs directly

Based on the error the LLM is generating invalid enum values like "medium-high" instead of strictly using ['high', 'medium', 'low']

If I (and claude code) understood the error correctly

This happens with, and without " format: 'aisdk',"

wait... Mastra has something called "StructuredOutputProcessor". Does it not use the llm native structured output?

📝 Created GitHub issue: https://github.com/mastra-ai/mastra/issues/8480

GitHub

[DISCORD:1423776090631049226] "Internal agent did not generate stru...

This issue was created from Discord post: https://discord.com/channels/1309558646228779139/1423776090631049226 I am getting: [StructuredOutputProcessor] Structuring failed: Internal agent did not g...

I think I found the issue. For what ever reason mastra does not use the open-ai native structured output (a critical flaw that totally breaks the workflows), and the issues are pronounced when using small but fast models like gpt-5-nano: https://discord.com/channels/1309558646228779139/1417910880275795989/1424698537106477167

Tested some more and cannot get this working with a small model at all. It always fails. And bumping the model only for structured output step defeats the purpose since it would become equally expensive and slow.

I think I had similiar problem with gpt4o-mini model. I didnt believe at first, but after some research there was cases like that.

We're shipping major improvements to the structuredOutput API this week, so hopefully these issues will go away!

Hi all, we just shipped some big improvements to structuredOutputs in our latest release @mastra/core@0.21.0 including:

- Using native response format by default (if your model does not support that you can opt into

jsonPromptInjection: true which will inject the schema and other information into the prompt to coerce the model to try to return structuredOutput)

- Validating the output properly using zods safeParse and returning a list of detailed zod errors. This allows you to use all Zod's methods like transform and all native zod methods. When something goes wrong you now have detailed error messaging so you can get the model to retry in cases when the LLM trips up

- Only use the structuring sub agent if a model is passed in, otherwise do everything in the main agentic loop

- Better type safety with the response object

- Passing context that was missing before to the structuring agent so it can provide better results

- Merge the subagent stream into the parent stream to get object-result chunks in the main stream.

Try it out and let us know what you think! Let us know if it fixes your issues or if there is still some more work to be done!cant believe that I was just this moment thinking about this, and you responded in same minute I was facing I guess the issue caused by that.

Is it covered bit more in documentation so I can feed to Cursor/CC for better understanding?

@! .kinderjaje I don't believe we've shipped the documentation updates yet, we'll get that done today!

awesome man! In case you remember, update me here please. No obligation

Hey, just feedback, I didnt get the error anymore, after following your suggestion here.

I guess its working!

Thats awesome!

@Ville can you check and give confirmation as well

I'll try when on my computer! A question: is the zod parsing happening regardless? Or only with models that do not natively support structured output?

Zod parsing will happen regardless!

@! .kinderjaje here's the docs PR https://github.com/mastra-ai/mastra/pull/8881/files, those docs pages will be updated once it's merged

GitHub

docs: update structuredOutput related docs by DanielSLew · Pull Re...

Description

remove maxSteps: 1 as this isn't needed another to make it not use structuredOutput processor

update places that mention structuredOutput for more clarity

change provider model...

tnx mate! Appreciate a lot!

Why regardless? afaik we should be able to expect 100% correct structure from openai structured output.

@Daniel Lew I updated and now I am getting "Error: Structured output validation failed" even for steps that previously worked fine?

Afaik that shouldn't be possible at all anymore with open-ai models?

There are missing fields & invalid enum values. How should I pass the zod schema in order to use native structured output?

From the docs https://platform.openai.com/docs/guides/structured-outputs:

Structured Outputs is a feature that ensures the model will always generate responses that adhere to your supplied JSON Schema, so you don't need to worry about the model omitting a required key, or hallucinating an invalid enum value....

Reliable type-safety: No need to validate or retry incorrectly formatted responsesAlso looks like I am not the only one having issues: https://github.com/mastra-ai/mastra/issues/8480#issuecomment-3410522004

@Ville can you give me more information around what code you're running and the error you're seeing? You should get detailed zod validation errors in the error chunk from the stream

this is how I call it:

And it is a regular agent without tools using gpt-5-2025-08-07 (

model: openai('gpt-5-2025-08-07'),). If the outputSchema is passed to the model like here: https://platform.openai.com/docs/guides/structured-outputs it should always produce valid json object as a result. I all honesty, the mastra abstractions are currently causing way more issues than what I am getting as benefits :/

And yeah I did get detailed zod errors, but the point is that if the structured output works, I should not be getting them: openai is guaranteed to return valid output.Can you provide the schema and the output and error message that is coming back? That will help a lot!

I already went ahead and begged in the prompt to return correct format, that helped. But the mastra bug is still there: Structured output is not being passed to the openai (if it were, I wouldn't have seen those errors in the first place)

There were some wrong enum values, some missing values and so on

This sounds like one of two things might be happening:

1. when we transform zod to jsonSchema to pass to openai there might be some inconsistencies there

2. openai sometimes returns wrong or incomplete data, we have a PR up to better handle retries and give information back to the LLM so it knows where it went wrong https://github.com/mastra-ai/mastra/pull/8888.

But having access to the schema and the prompt that led to the error would help out quite a lot to fix this

I guess right now the situation is that the custom formatter step is no longer used, but also the schema is not passed to the open-ai either

openai sometimes returns wrong or incomplete data, we have a PR up to better handle retries and give information back to the LLM so it knows where it went wrong https://github.com/mastra-ai/mastra/pull/8888.in what kind of cases it happens? I have not seen it ever when using their sdk directly with structured output (though, it has also been a different schema but still) also their docs says the format & structure should be guaranteed I have a feeling that there is too much complexity introduced that's now biting back. It should be fairly straight forward to follow where the ball (schema) gets dropped and settings goes haywire

It's likely due to transformation issues, the schema isn't getting dropped, going to investigate this now, I'll get back to you

and sorry that you're running into these issues! I know it can be a frustrating experience that you're not able to resolve it yourself. But we'll make sure to get to the bottom of this!

I do think it is dropped: OpenAI would not have been returned malformed json (as far as I know) otherwise. In this case we had enum definitions in the schema, but had to explicitly define them in the prompt too in order to not fail the zod validation.

Open ai docs says "Simpler prompting: No need for strongly worded prompts to achieve consistent formatting" which is exactly what I had to do

Okay so I was able to reproduce an error in openai, I passed it a required field and got it to not return that field, and I can see the schema passed to it in the request. So in this case the schema is correctly passed to openAI with all the right required fields but openAI returns invalid data

There is a chance, if OpenAI, after all, is not returning valid json based on the schema in some cases (e.g too vague prompting), that the schema was not dropped but it's quite strongly stateed in their docs that it should always follow the schema

I dont think they validate missing data

so it might help to get your specific example of prompt/schema so I can look into why it would be returning wrong data

Okay so I was able to reproduce an error in openai, I passed it a required field and got it to not return that field, and I can see the schema passed to it in the request. So in this case the schema is correctly passed to openAI with all the right required fields but openAI returns invalid dataOh! That's news to me! And contradicts their docs quite hard. Are you using the strict mode? https://platform.openai.com/docs/guides/structured-outputs#structured-outputs-vs-json-mode If it's indeed an issue on the open-ai end, I can fix it with prompting. But it's also surprising! There were some clear contradictions in the prompt and expected schema (for which some of the errors were useful btw!), but I was under impression that the output is guaranteed

hmm let me look into that, it's possible that that is not being set one level higher in the model provider itself

open-ai expects the

"strict": true to be set, as well as the schema to be given

With that I have not had any issues before, but also in all fairness, I've had different schemas & prompts too so not 100% comparable.ahh, yes, I just looked

ai-sdk@openai does not set strict: true

and it doesn't look like there's a way to set thatohh...

That's also very surprising for such a popular library 😅

There's

strictJsonSchema though: https://ai-sdk.dev/docs/ai-sdk-core/generating-structured-data#accessing-reasoning

Not sure if it exclusively for reasoning or not though

ooh nice find, do you have that set?

Nope I don't, didn't know it was up to me 😅

https://ai-sdk.dev/providers/ai-sdk-providers/openai#responses-models

oooo that prevented the openai error we were seeing before. I think that might be the solution

I think we might ship a PR to make sure it's always included, schema should always be validated

Thanks for finding that for us though and being patient!

You welcome! 🙂 Are you going to flip that flag true by default when the user provides the schema?

(imo it would make sense)

yeah exactly, if a schema is provided and they're using open ai responses it'll be flipped automatically

makes total sense! From the user perspective, I think it would be expected.

And personally, I don't grasp why someone would not want to set strict: true when expecting json output. a bit odd design choice from openAI, but might also be some backward compatibility thing.

haha yes, "here's my schema, feel free to ignore it"

yeah 😄

Now when I set that to true I am actually getting some very valid, openai originated errors:

These I believe are totally on me and some schema compatibility mismatch with openAI

These are kinda the errors I was expecting from the get go 😅

haha nice

'required' is required to be supplied and to be an array including every key in properties

FYI @Ville it looks like that error will happen whenever your zod schema has a field marked as optional with .optional() because when the zod schema is transformed into a json schema and passed in to response format the optional fields are left out of that required array that openai is complaining about. Quick fix is to just replace optionals with nullables instead. Will consider if this should be handled for users or maybe just a better error message to help out here.Yep figured it out! 🙂

Tbh, I think just showing the openai error is enough. Mangling the schema would probably lead to further hard to debug issues.

Imo better to avoid masking any errors. The closer to the source the better. I think its now fixed but I already spent quite some time at some point to figure out some masked errors that turned out to be a too large context issues

Yeah good points. I wouldn't want to mask any errors like that. If anything it should show the error from the source and if people need it then just add additional logging below.