Serverless loadbalancer scaling config (Request Count)

From the documentation:

It's not clear at what interval Math.ceil, runs at. If my server can handle 10 requests per second, what's a good value for the Request Count? Also, since my FastAPI handels an internal Queue, requestsInQueue will always be 0.

It would be more intuitive to first set the interval per Worker, say [1s, 10s, 30s, 60s], say I select [10s], then have a value Request Per 10s value, say [100], and when that is reaches 100 requests every 10s per Worker, it scales up. And if the value is say [300], it automatically adds two workers.

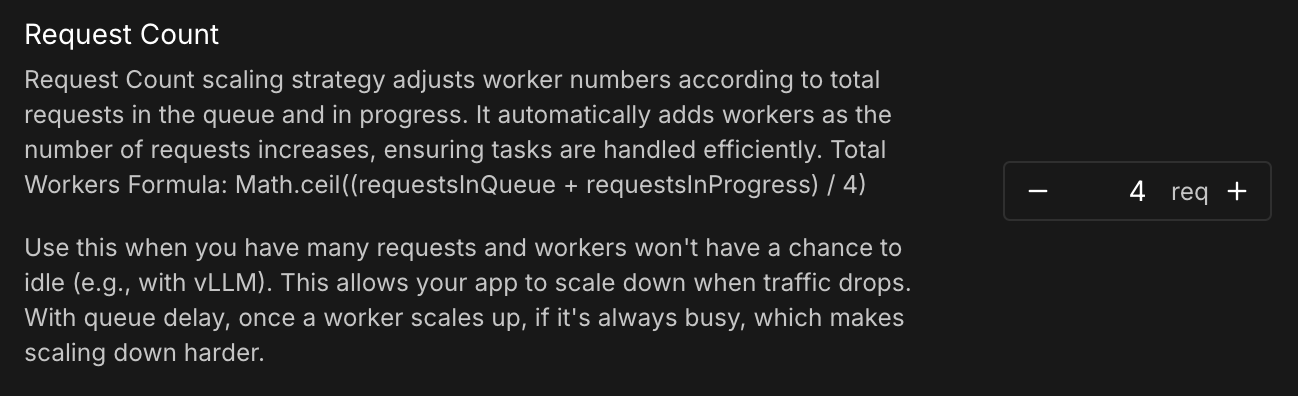

"Request Count scaling strategy adjusts worker numbers according to total requests in the queue and in progress. It automatically adds workers as the number of requests increases, ensuring tasks are handled efficiently. Total Workers Formula: Math.ceil((requestsInQueue + requestsInProgress) / 4)

Use this when you have many requests and workers won't have a chance to idle (e.g., with vLLM). This allows your app to scale down when traffic drops. With queue delay, once a worker scales up, if it's always busy, which makes scaling down harder."It's not clear at what interval Math.ceil, runs at. If my server can handle 10 requests per second, what's a good value for the Request Count? Also, since my FastAPI handels an internal Queue, requestsInQueue will always be 0.

It would be more intuitive to first set the interval per Worker, say [1s, 10s, 30s, 60s], say I select [10s], then have a value Request Per 10s value, say [100], and when that is reaches 100 requests every 10s per Worker, it scales up. And if the value is say [300], it automatically adds two workers.