Why does LMStudio have documentation and OIllama does not?

I like the idea behind the project and I’ve started implementing it in my NextJS project, but I can’t find any documentation explaining how to properly integrate Ollama.

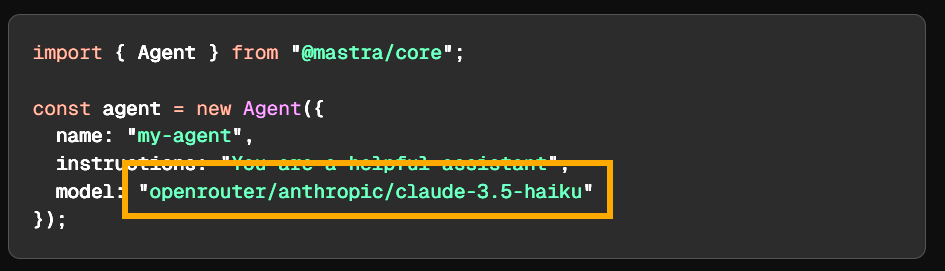

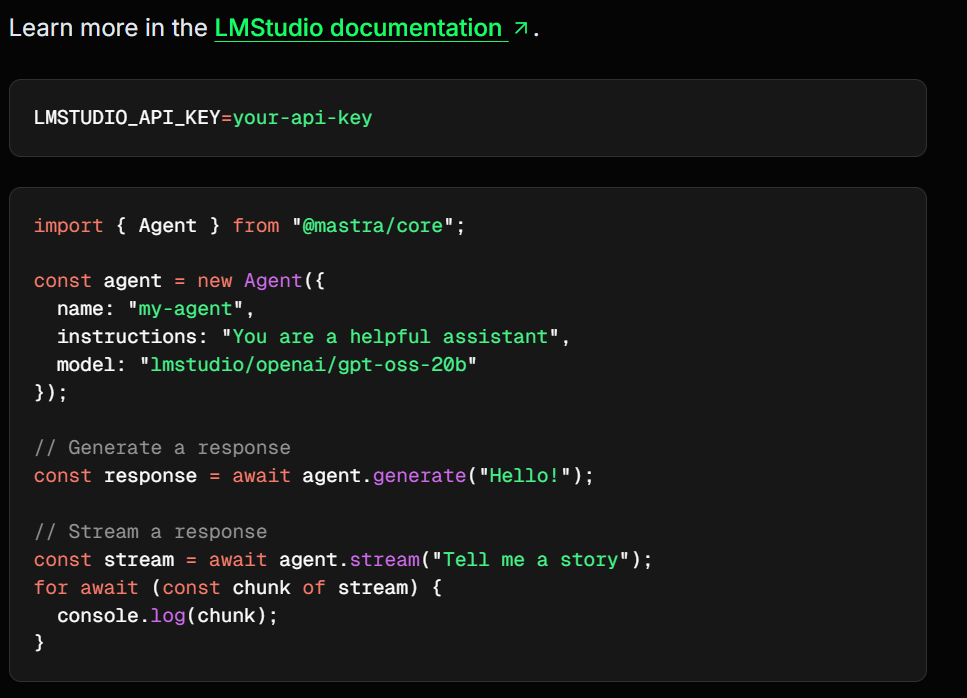

There’s even an example showing how LMStudio is invoked in the Mastra agent (“lmstudio/openai/gpt-oss-20b”).

I don’t understand why Ollama doesn’t get the same treatment.

24 Replies

📝 Created GitHub issue: https://github.com/mastra-ai/mastra/issues/8913

GitHub

[DISCORD:1428186249662824569] Why does LMStudio have documentation ...

This issue was created from Discord post: https://discord.com/channels/1309558646228779139/1428186249662824569 I like the idea behind the project and I’ve started implementing it in my NextJS proje...

Hi @vk3r ! We pull the list of models from https://models.dev, unfortunately they don't have anything for ollama, and very few models for lmstudio. This is mainly because you can't really maintain a list since users can load any model they want when using these tools.

You should be able to use ollama this way though:

hey @Romain – I've been trying to use ollama in my Mastra instance as well and the snippet you provided doesn't seem to work for me:

This is coming from a fresh project I just generated using

npm create mastra@latest

I previously had luck using local models via https://github.com/nordwestt/ollama-ai-provider-v2 but I was hoping to get this done without any additional dependencies

never mind, using id instead of modelId seems to work

thanks!yes, sorry, my snippet was wrong 🙂

We'll definitely look into adding the example to the docs though 😉

@Romain Why is this structure tied in this way? What happens if there is an Ollama model that does not have this structure?

Shouldn't they just add “ollama/MODEL_NAME”?

I still don't understand why they don't just add support for Ollama directly. Even Ollama is compatible with the OpenAI SDK...

I got it to work by referring to my local models as

openai/my-ollama-model-name

had to provide a placeholder openAI API key as well, but it worksAnd why don’t they add “ollama/”?

This isn’t about whether it works or not — we’re talking about the biggest open-source model tool out there right now.

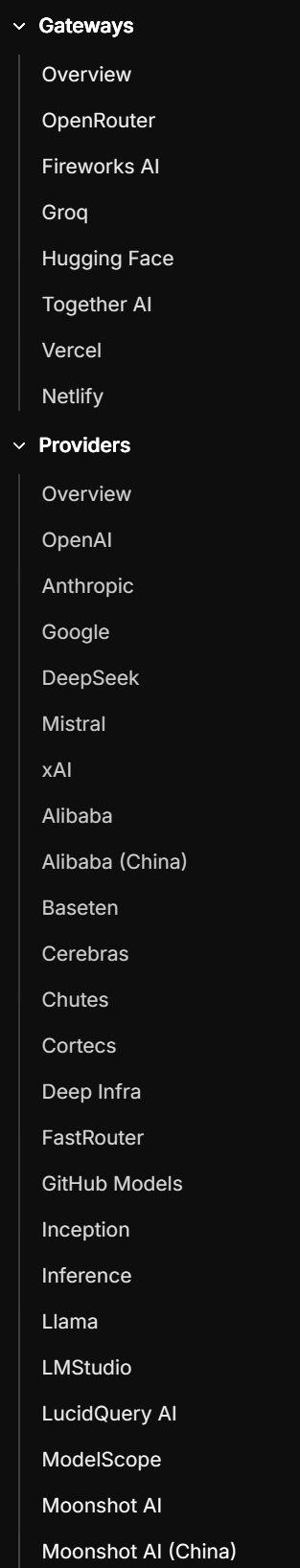

There’s support for tons of APIs (even Chinese ones), and even for LMStudio — which isn’t open-source — yet they still don’t provide direct support for Ollama.

I just don’t get it.

not disagreeing with you – I just needed to get my app working so I am glad to have a solution lol

definitely something I'd appreciate seeing support for

you can always use ai-sdk package for ollama

Why?

LMStudio does not use it.

why not? it provides standartized interface to any provider possible

Sure. Practically all the other providers/gateways don’t require any additional configuration, except for Ollama.

I guess there’s nothing weird/strange about that, right?

As I said, we pull the list from models.dev, you can always open a PR on their repo to add more default models 😉 https://github.com/sst/models.dev

GitHub

GitHub - sst/models.dev: An open-source database of AI models.

An open-source database of AI models. Contribute to sst/models.dev development by creating an account on GitHub.

Why?

The OpenAI SDK doesn’t require the specific model name — just a string, which allows any model name to be used.

Why is it necessary to maintain a specific list of accepted models?

If any provider releases a new model or updates the name of an existing one and models.dev isn’t updated immediately, how is that handled?

I think that models list is mainly used for the IDE completion and provider routing. It should still be able to accept any model name even if its not in that list, and the types should also allow that.

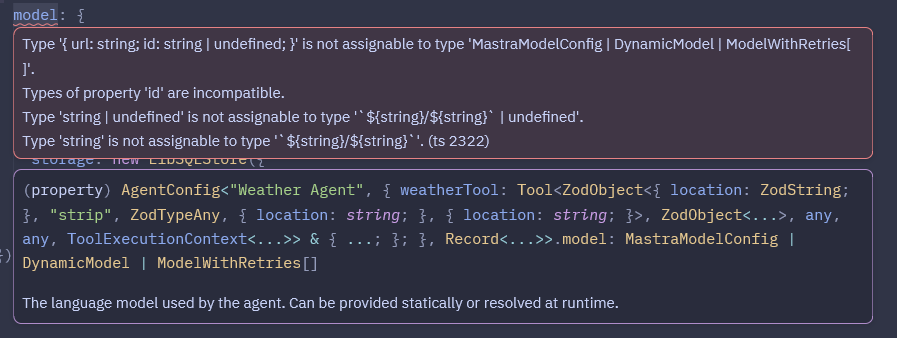

Well, it wouldn’t make sense to perform the validation Romain mentioned above. As long as it’s just a string, it should work — but it doesn’t (see the image I uploaded earlier).

Even so, this still doesn’t justify the fact that Ollama isn’t part of the direct integration, nor the lack of official documentation on how to integrate it.

Ahh I see what you mean in that screenshot. Yeah that could be a little confusing.

id uses ${providerId}/${modelId} format for convenience but you can still also use providerId and modelId if you don't want to use that string type.

or:

Thanks for calling this out though, it revealed an incorrect provider validation that shouldn't be happening if url is provided.

When url is provided you should be able to use anything you want as the providerId. We'll get a fix out for that soon. For now you'd have to use "openai" as the providerId.I see.

Does this mean that “ollama” will be added as the providerId / id?

Even in this structure: ${providerId}/${modelId}?

Will documentation for Ollama be added as well?

Yeah this means in the next release you can use "ollama" in both this structure

${providerId}/${modelId} and also just by using providerId and modelId separately. They are treated the same.

And we're always working on adding and improving docs. Ollama would be a great example to add in the docs.I understand.

I really appreciate the effort.

I've seen many people asking for help with the same kinds of problems with Ollama, and it's great to provide options and alternatives — especially for the open-source community.

Yeah, we've supported Ollama since day one through the ai-sdk providers, but our model router is still fairly new and still need these minor improvements! Not sure when you'll see ollama show up in the auto-completion though, because this is a list that's pretty much impossible to maintain (even the lmstudio one shows only 3 models). Documenting how to use Ollama with Mastra is probably a better route.

No offense intended, but I insist.

I don’t think autocompleting the model name is a truly necessary feature.

I’m not sure how you’re actually implementing it, but OpenRouter has thousands of models, and I doubt that models.dev is always up to date with the ones uploaded to its platform.

Even so, the world’s largest API provider (OpenAI) doesn’t include this kind of validation in its own SDK.

I don’t think it’s something you should have to implement or maintain.

I suppose the thousands of models on OpenRouter are auto-completed.