Agent Network Streaming Issue (AI SDK v5)

Hi guys, I have old production app (50K+ users) that I am trying to convert to AI assistent chat bot. I have built a working versionwith Vercel AI SDK, but decided to migrate to Mastra (greedy). Stuck for 3 weeks on

Streaming Issues

Streaming Issues

This works but defeats the purpose of streaming - user sees nothing until full execution completes.

Confirmation on the intended streaming behavior of

@_roamin_ @Ward I would really appreciate your assistance on this.

agent.network() streaming. Streaming is still broken after 0.21 upgrade. Need guidance urgently - my option is to revert to AI SDK but that wastes 3 weeks of migration work. Really want to stay with Mastra.Setup

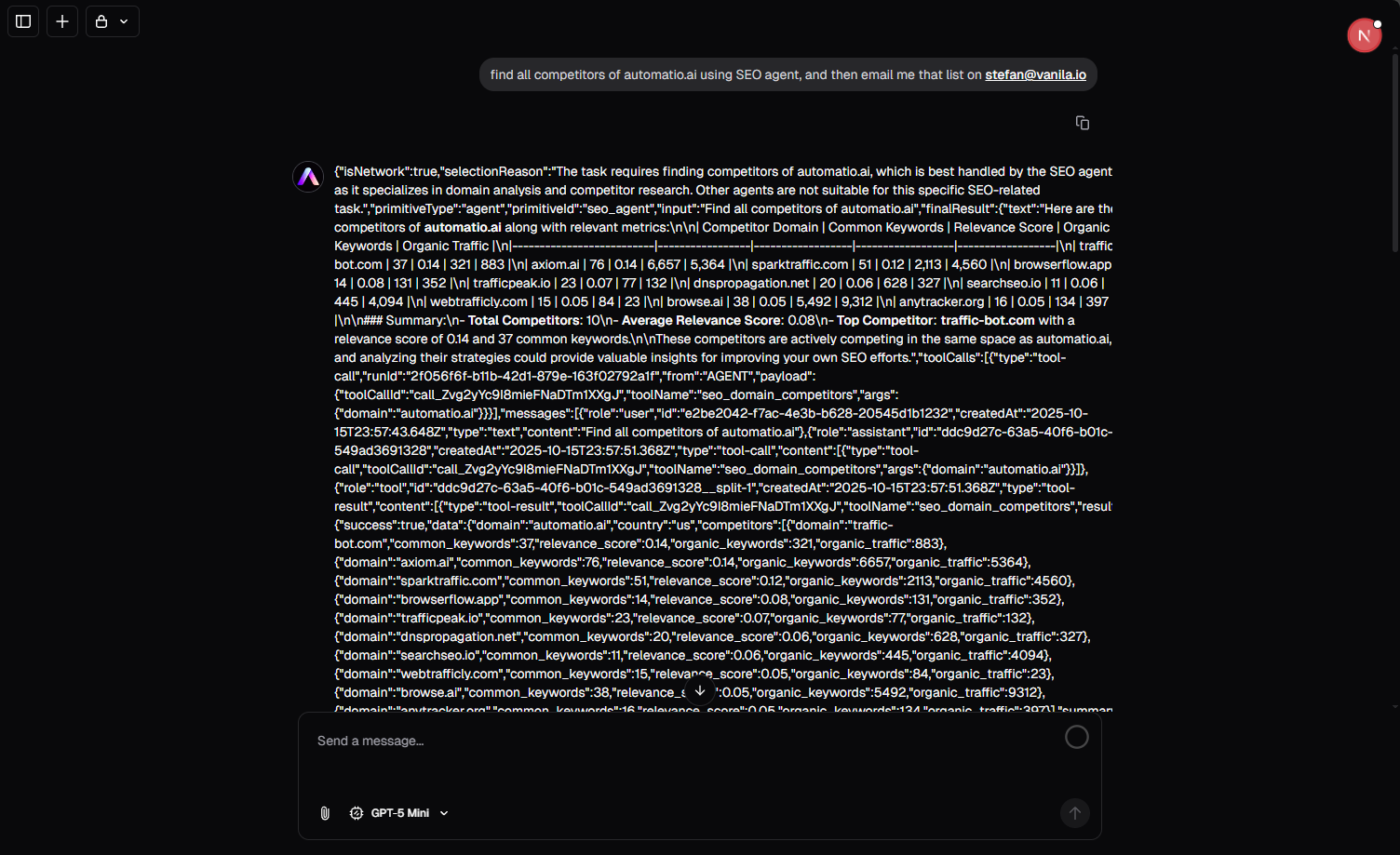

- Mastra 0.21.0 + AI SDK v5, orchestrator with 8 sub-agents (Gmail, SEO, Sheets, Calendar, Ghost, Web, Voting, General), OpenRouter gpt-4o-mini

What We Observe

What Works

What Works

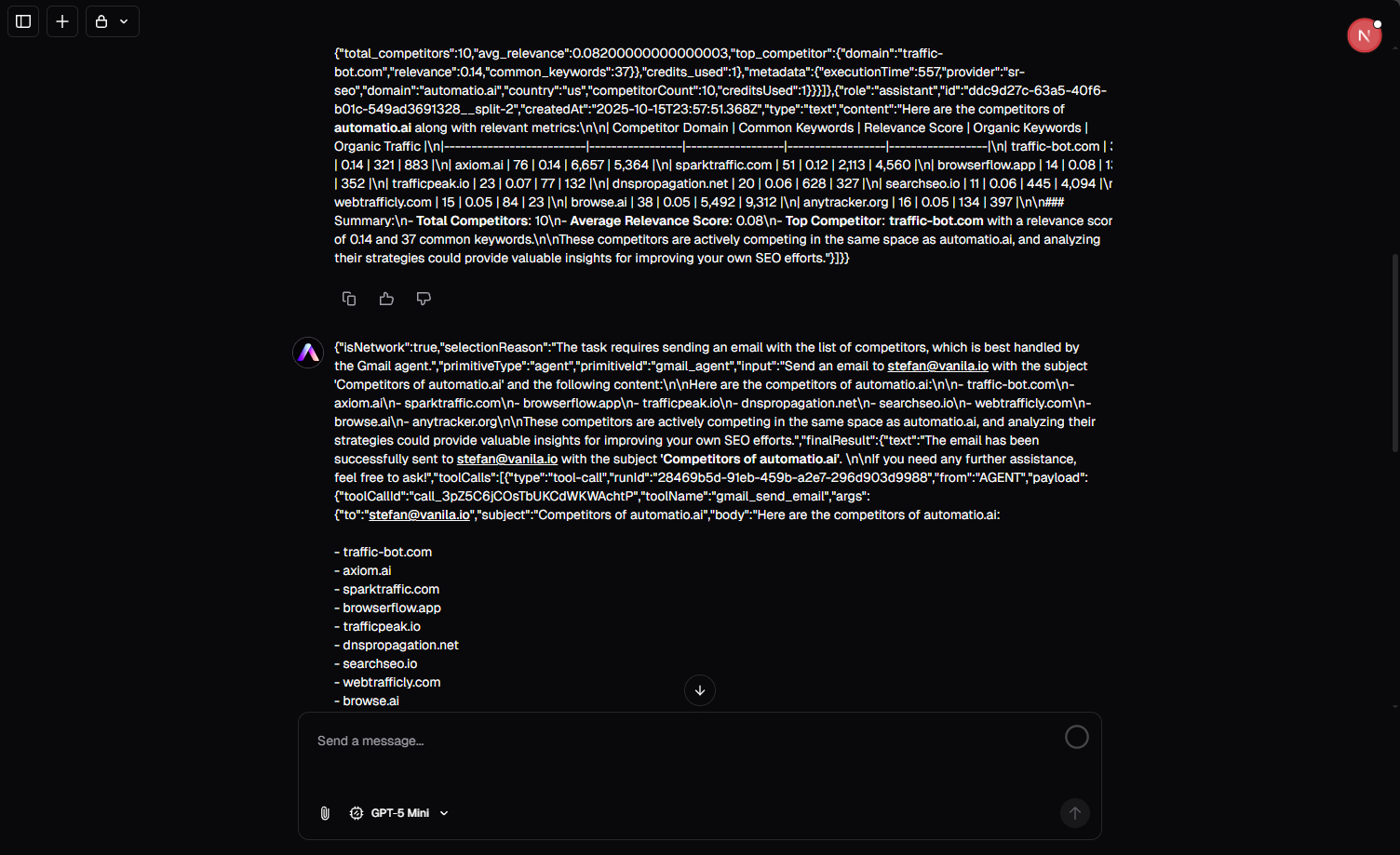

- Agent routing is flawless - orchestrator correctly identifies and delegates to appropriate sub-agents

- Sub-agents execute successfully - tools called, operations completed

- Terminal logs show proper execution flow: orchestrator → sub-agent → tool execution → completion

Streaming Issues

Streaming Issues- No text-delta chunks during execution

- Stream only emits

data-networkmetadata chunks - Zero

text-deltachunks throughout entire execution - No real-time text updates to UI

- Text only available after full completion

- Text appears in

networkResult.result.resultpath - Arrives as single blob after all agents finish

- Not streamed incrementally

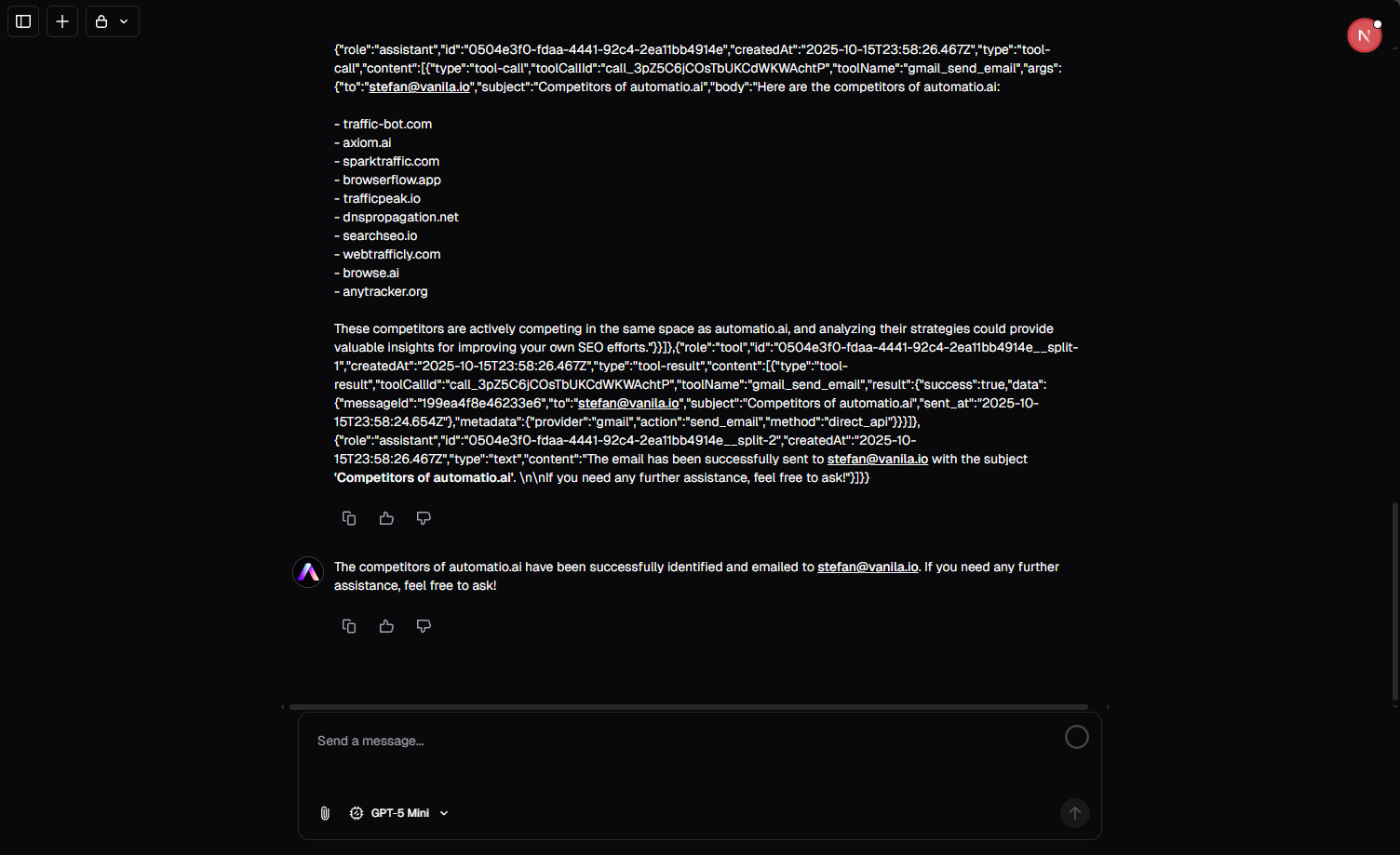

- Frontend receives raw metadata

- Without filtering, UI displays raw

data-networkJSON - After page refresh, database-saved chunks appear as

[object Object]in UI

This works but defeats the purpose of streaming - user sees nothing until full execution completes.

Questions

- **Is `agent.network()` designed to stream text-delta chunks in real-time?**

- Or is it purely for internal routing with final result extraction?

- Should we expect incremental text updates during sub-agent execution?

- **What should `data-network` chunks contain and how should they be handled?**

- Should these be filtered from UI display?

- Are they being saved to database incorrectly?

- Should they only be used internally by Mastra?

- **Are we using the right API for UI streaming?**

- Should multi-agent streaming use

agent.stream()with manual routing logic instead? - Is

network()intended for backend orchestration only?

- Should multi-agent streaming use

- **What's the expected data flow?**

- Should orchestrator stream its own "thinking" text while routing?

- Should sub-agent responses be streamed back through the network stream?

- Or is the pattern: route silently → extract final result → display?

Confirmation on the intended streaming behavior of

agent.network() for real-time UI updates. The routing works perfectly - just trying to understand if we're using the right API pattern for streaming multi-agent responses to users.@_roamin_ @Ward I would really appreciate your assistance on this.