Human in the Loop Workflows

Issues & Learnings from Building Human-in-the-Loop Workflow in Mastra

What We’re Trying to Do

We’re building human-in-the-loop workflows that can:

Pause for manual review and resume from the same state

Be observed and monitored mid-execution

Stream step updates to a frontend as they complete

This is for an iterative content-brief generation workflow where users approve or edit AI output mid-flow.

Key Issues Encountered

1. Suspend / Resume Instability

Coordinating workflows that use dowhile, parallel, and suspend/resume has been unreliable.

Even when logic is correct, behavior is inconsistent:

Loops sometimes exit prematurely or hang indefinitely on suspend

Resumed steps often lose their inputData, even though it’s present in the Mastra DB snapshot

We’ve had to manually fetch snapshots and inject inputData into resumeData, casting to unknown due to incomplete typings

Overall, the suspend/resume API feels brittle — it works after trial-and-error but isn’t predictable enough for production.

2. Lack of Workflow Observability

Currently, there’s no way to observe a running workflow, only a suspended one.

Because Next.js request/response cycles are short-lived, workflows die when a user refreshes the page.

We need a background task runner or persistent worker (e.g., queue + runner) so workflows can continue independently and later be resumed or observed from the frontend.

3. Unclear Snapshot Persistence

Docs mention snapshots only exist for suspended workflows, but we’ve noticed snapshots persisting even without suspend.

There’s no documented API to manually create or manage snapshots, making it difficult to externalize or version workflow states ourselves.

What We’d Love Guidance On

Correct orchestration pattern for human-in-the-loop suspend/resume

Recommended way to observe active workflows

Whether there’s a supported API or hook for custom snapshot management

4 Replies

Hey @wiz2202 ! I think you're running into these issues because workflows are meant to run on long-lived runners, like the Mastra server offer, or something like Inngest.

I have not heard about instability in the workflows before, so there might something that's off in your project, you might need to share some repro code with us so we can help debug 😉

I am basically trying to reconnect to a running workflow. I use the watch and pass it the runID, but the issue is that when I reconnect, I am not aware of the current state of the workflow, so my ui can not refelect the state of the workflow until the next stream comes through. I tried to make a workaround where I get the snapshot and that can tell me where I am so I can initally show that state, but the snapshots do not handle parallel steps well from what I did.

i did this test to find the problem:

Here was the test workflow:

export const testSimpleWorkflow = createWorkflow({

id: "testSimpleWorkflow",

description: "Test workflow mirroring refreshUrlWorkflow structure",

inputSchema: TestInputSchema,

outputSchema: TestOutputSchema,

})

.then(getUrlOutlineWorkflowTest)

.parallel([

keywordAnalysisWorkflowTest,

serpResearchWorkflowTest,

refreshURLRedditResearchWorkflowTest,

])

.then(comprehensiveSynthesisStepTest)

.commit();

async function fetchSnapshot(runId: string) {

const store = mastra.getStorage();

if (!store) {

throw new Error("Storage not configured");

}

const workflowRun = await store.getWorkflowRunById({

runId,

workflowName: "testSimpleWorkflow",

});

if (!workflowRun || !workflowRun.snapshot) {

throw new Error("Workflow run not found");

}

return workflowRun.snapshot as {

status: string;

context?: Record<string, any>;

};

}

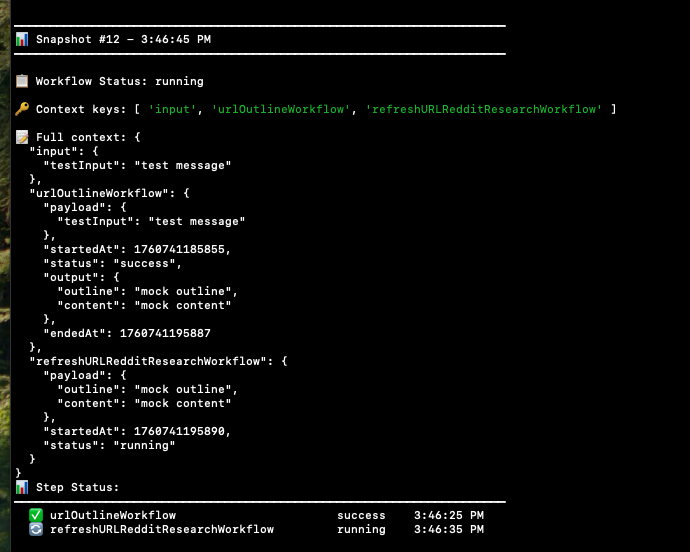

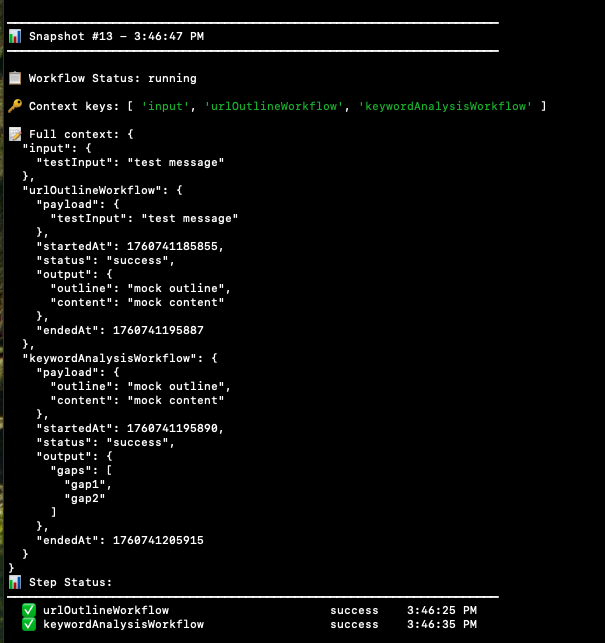

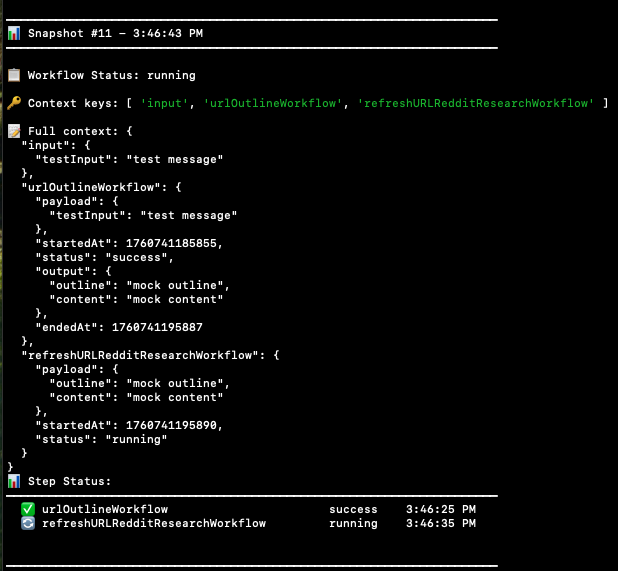

BELOW YOU CAN SEE THE SNAPSHOTS THAT WERE REUNTED USING THE ABOVE METHOD AT DIFFERENT POINTS IN WORKFLOW: Overarching issue is that I assumed that the output to the snapshots would contain all the currently running workflows. However, these are only returning one of the ones in parallel, not all of them. I did some more digging adn they are running in parallel, but the workflow snapshots do not show all 3 steps as active?

Let me know if this is helpful or if more cotnxt would be?