Duplicate Assistant Messages with agent.network() + Memory

Summary

I'm experiencing duplicate assistant messages being saved to the database when using

agent.network() with memory enabled. For each network interaction, TWO assistant messages are saved:

1. ✅ The correct user-friendly response

2. ❌ Raw JSON containing internal network routing metadata

The second message pollutes memory with orchestration data that should be internal only.

---

Impact

- Doubles token usage for memory retrieval (semantic recall fetches 2x messages)

- Pollutes agent context with internal metadata that confuses future conversations

- Wastes credits by embedding and storing useless routing information

- No workaround available - memory is required for network() to function

---

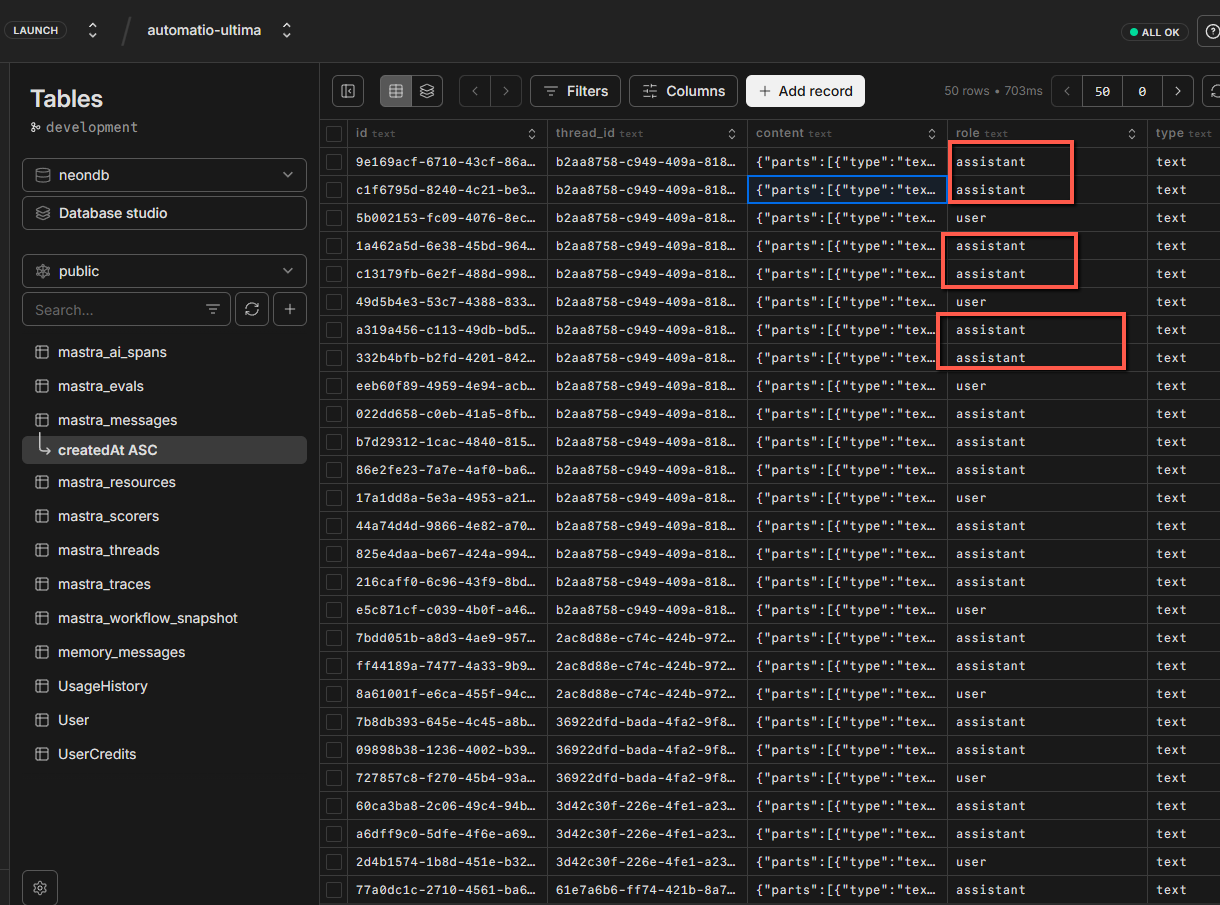

Database Evidence

Here's an example of the duplicate pattern from my mastra_messages table:

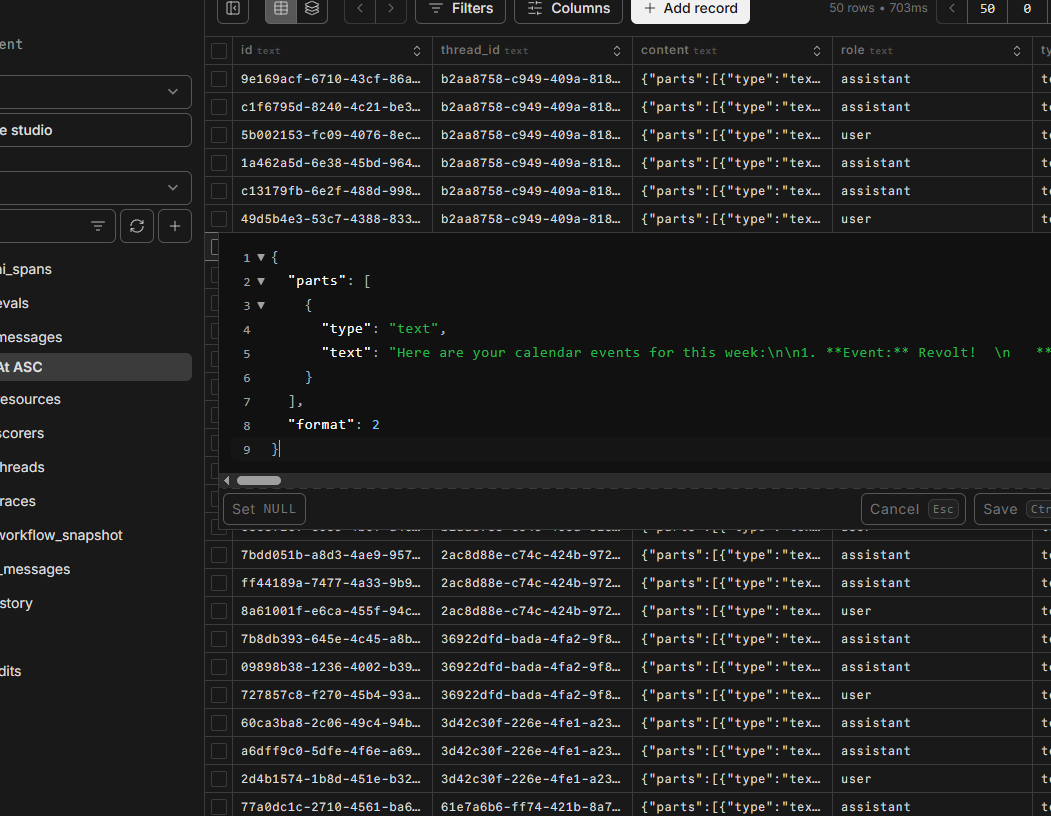

Message 1 (Correct):

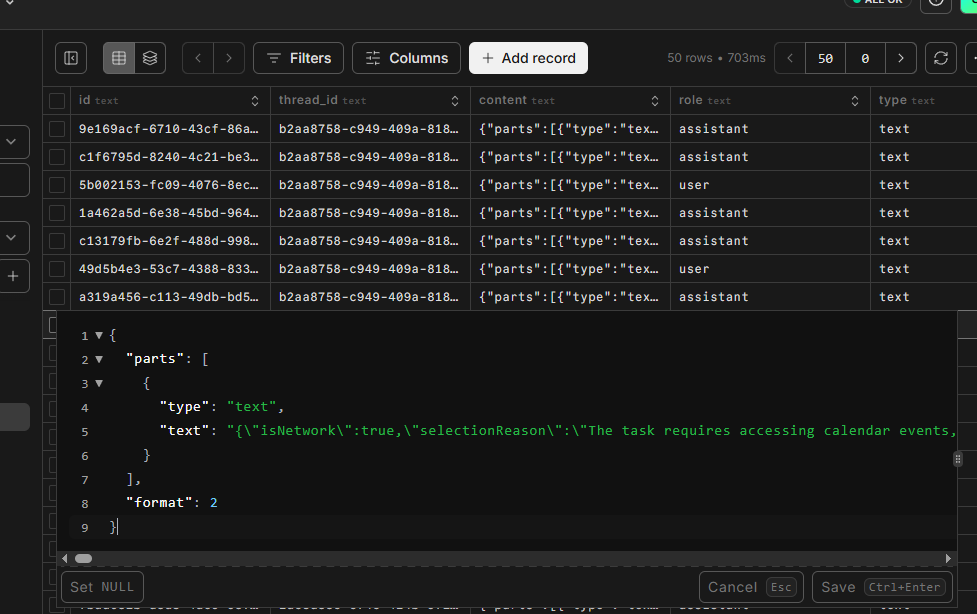

Message 2 (Bug - Internal Metadata):

The second message contains fields like:

- isNetwork

- selectionReason

- primitiveType

- primitiveId

- finalResult

These are clearly internal orchestration metadata, not user-facing content.

---

Reproduction Steps

Setup

Orchestrator Agent:

Chat API Route:

Steps to Reproduce

1. Create an orchestrator agent with agent.network() capability

2. Add sub-agents to the agents property

3. Enable memory with storage + vector configuration

4. Call orchestrator.network() with memory context (thread + resource)

5. Use toAISdkFormat() to transform the stream for UI

6. Check the database after the interaction

Expected: ONE assistant message with the user-friendly response

Actual: TWO assistant messages - one correct, one with internal routing JSON15 Replies

---

Investigation Results

I've done comprehensive research to rule out implementation errors on my side:

✅ Verified Our Implementation

- No custom handling of

isNetwork, selectionReason, primitiveType, or primitiveId in our codebase

- Our code follows the exact pattern from Mastra documentation

- We use the standard toAISdkFormat(networkStream, { from: "network" }) pattern

✅ Checked Documentation

- Reviewed agent.network() reference docs

- Reviewed memory configuration docs

- Checked streaming events documentation

- Found NO mechanism to filter network metadata from being saved

✅ Searched for Similar Issues

- Found GitHub Issue #5782: "Assistant toolcall messages can lead to duplicate responses"

- This describes similar duplicate message storage with memory + tool calls

✅ Confirmed Memory Behavior

From the official docs:

"Memory is required (not optional) when using network(), as it is needed to store the task history."This confirms that when memory is enabled with

network(), everything gets saved - but there should be a distinction between user-facing content and internal orchestration metadata.

---

Expected Behavior

When using agent.network() with memory:

- User messages should be saved ✅

- User-facing assistant responses should be saved ✅

- Internal network routing metadata should NOT be saved ❌

The internal metadata (isNetwork, selectionReason, primitiveType, etc.) should remain in the execution context but should not be persisted to the user's memory.

---

Environment

- Mastra Version: Latest (using @mastra/core, @mastra/memory, @mastra/pg)

- Database: PostgreSQL (via Neon)

- Storage: @mastra/pg with PgVector

- Pattern: agent.network() + toAISdkFormat() + AI SDK v5 streaming

---

Suggested Fix

I believe the issue is in how network() streams data when memory is enabled. The network routing metadata should be:

1. Available to the orchestration system internally

2. NOT included in the messages saved to mastra_messages table

Possible solutions:

- Add a message type filter in memory auto-save to exclude network metadata

- Separate network orchestration data from user-facing content in the stream

- Add a saveMetadata: false option to network() configuration

@Romain @Ward guys can u confirm if this is happening on your side as well, or its bad implementation by me? I spent some time trying to fix this clean way, but Claude Code suggesting this is Mastra bug.

My agents currently not using memory (chat history) because it would probably consume raw JSON output and burn tons of tokens.

If you can confirm this is actually the bug, I would consider it important one.📝 Created GitHub issue: https://github.com/mastra-ai/mastra/issues/9024

GitHub

[DISCORD:1429451505647353968] Duplicate Assistant Messages with age...

This issue was created from Discord post: https://discord.com/channels/1309558646228779139/1429451505647353968 Summary I'm experiencing duplicate assistant messages being saved to the database ...

Probably a bug

hopefully. I tried many different things to make it work, at the end Claude Code/Cursor and I made those conclusions.

Those two/three issues, really blocking me forward. Thanks for the support!

🫡 we will get this sorted, thanks for the bug reports, we really appreciate it

This is actually very critical to the functioning of the agent network. The routing agent relies on memory to make routing decisions, and it won't be able to run complex tasks unless it knows which primitives were run before and why.

I don't think this is a bug per se.

Also, a lot of users actually want to see these messages because they are useful in knowing what the network is doing/did.

@Ward I think one should be able to use an output processor or smth for this to exclude these?

Not sure what the right solution is here, but it's definitely on the "fetching side", not a change we should do in whether this gets persisted.

Maybe we need some different API parameters for reading messages in a network context to exclude these

tbh, I find that first output useful as well, but just wondering how to filter it out properly. Maybe its the issue on my end, but I really tried to make it work.

It's definitely fair feedback 🙂 Just trying to brainstorm the right solution here. We'll need to keep those messages in memory/in the database, but maybe there's something we could do in making it easier to filter these out when you're reading back the conversation history

What all of those messages have in common is that they are JSON structured and they have the

isNetwork: true property at the top level

What's the way that you're reading these back @! .kinderjaje

Let's start there, I'm sure we can come up with something 🙂let me check. I was about to go to sleep, but can't reject you guys, ur time is valuable

I'm sure someone else can help out on your time zone

Go get some sleep and I'll make sure someone follows up with you when the US west coast folks are awake

its okay, my sleeping schedule is already broken.

This is my CTO (CC) says:

For reading back conversation history, I'm using

memory.query() in my chat page component. Here's the exact code:

This returns all messages and I display them directly in the chat UI.

Since all those network messages have isNetwork: true at the top level, would the right approach be to filter them out after calling memory.query() but before converting to UI messages? Something like filtering the uiMessages array to exclude messages where the content contains "isNetwork":true?

Or is there a parameter I can pass to memory.query() to exclude these automatically?That could be a quick way around that, yeah

We can think about adding some options perhaps so these can be optionally excluded/included and what that API signature should be, but I think you could move forward with something that just filters these out from the uiMessages there in your sample code

alright, let me see how it goess with filtering this myself.

Hey Tony, before I implement the filtering solution, I want to make sure I understand this correctly because I have some concerns:

Question 1: When Does Network Routing Actually Happen?

The docs say:

"Memory is the core primitive used for any decisions on which primitives to run"But I'm confused about the timing: - When a user sends their FIRST message in a new thread, there's NO network routing history in the database yet - So how can the routing agent rely on memory to make decisions if there's nothing there yet? - Does the routing decision happen during the network execution (in-memory/on-the-fly), and THEN get saved to the database? My understanding: The network routing JSON is created AFTER the execution completes, not before. Is this correct? Question 2: Token Impact - These JSON Responses Are HUGE Looking at my database, some of these network routing JSON messages are massive - especially when they include full markdown responses. Example size: Some are 5000+ characters of JSON with nested

finalResult objects.

Questions:

1. Are these huge JSON blobs included in the context when the orchestrator reads memory for the NEXT routing decision?

2. If yes, isn't this extremely token-expensive? We'd be sending thousands of tokens of metadata on every routing decision.

3. Does Mastra automatically parse/extract just the relevant parts (like primitiveId, selectionReason) or is the ENTIRE JSON blob sent to the LLM?

Question 3: Double Messages in Database - Is This Expected?

Currently I'm seeing two assistant messages saved for every network interaction:

Message 1:

Message 2:

Questions:

1. Is this intentional behavior by Mastra?

2. Should there really be TWO separate assistant messages in mastra_messages table?

3. Or should the network routing metadata be stored in a different table/field (like metadata column) instead of as a separate message?

Question 4: Filtering Solution - Will This Break Network Routing?

If I filter out the network JSON messages only when displaying in the UI (using memory.query() results):

Questions:

1. When the orchestrator calls network() again for the NEXT user message, does it use memory.query() or does it read from the database directly?

2. If it uses memory.query(), will my UI filtering affect the orchestrator's ability to see routing history?

3. Or does the orchestrator have its own internal way to access ALL messages (including the filtered ones)?

Question 5: What's the Recommended Approach?

Given all the above concerns, what's the best solution?

Option A: Filter on UI side only (what you suggested)

- Pro: Easy to implement

- Con: Concerned about token waste if these huge JSONs are included in every routing decision

Option B: Add a message type/metadata flag

- Store network routing in a separate field or table

- Don't save as assistant messages

Option C: Something else?

Sorry for all the questions, but these network routing JSON messages are really large and I want to make sure we're handling this correctly before implementing. Appreciate your help!Q1:

The messages are created after every step of the agent network, but the agent network runs for many iterations (in the typical case). Are you running it just with one step?

Q2:

The

finalResult will only be stored at the very end of the interaction. This message would be stored any agent using memory.

Q3:

They are not double messages, there are two assistant messages because one is a step response and the other is just associated metadata.

The finalResult isn't necessarily the same as the response of the last step.

Q4:

It won't break anything because you are filtering memory that you are using somewhere else right? Or what is the use case here exactly?

Q5:

Filter whatever comes back. Be that on the backend or UI side, depending on how things are implemented. If you call .filter() on a javascript array, that can't in any way have any impact on what is stored in the database.

Using network mode on an agent has trade-offs. It uses more tokens, but gains the ability to solve much more complex problems by using multiple primitives and being able to break down problems and execute plans better.

However, if you don't need multiple iterations with multiple iterations to get to your desired outcomes, you should probably just be using generate/stream instead of network

A regular agent can have workflows, agents and tools as well, and those can be used via .stream()/.generate()

If you are using multiple iterations to get to your desired end goal, then most of the questions above aren't concerns at all, more of a necessity.

But for simpler cases where you don't need to iterate for multiple steps the concerns are valid, but .network() isn't necessaryI need clarification on something critical, sorry if for iterative follow up questions and if its dumb. But I am bit in doubt over here.

The Problem

I just discovered that the network routing JSON messages are MASSIVE - some are 11KB+ in size.

Example from my database:

This single message contains:

- The entire

finalResult with full calendar events

- Complete toolCalls metadata

- The entire messages array including full tool results (all calendar JSON data)

- Everything nested in routing metadata JSON

Critical Question

Are these huge JSON blobs included in the chat history context when the orchestrator reads memory?

My orchestrator has:

If lastMessages: 20 includes these network routing messages, I'm potentially sending:

- 20 messages × 10KB each = ~200KB of routing JSON

- On EVERY user message

- That's thousands of tokens of metadata being sent to the LLM

What I Need to Know

1. Does lastMessages: 20 include these network routing messages, or are they automatically filtered out when building context?

2. If they ARE included, should I drastically reduce lastMessages (e.g., to 5) to avoid massive token waste?

3. Or is there a way to configure memory to exclude these routing messages from context while keeping them in the database for routing decisions?

Additional Context

From your earlier message, you said:

"Using network mode on an agent has trade-offs. It uses more tokens"But I want to make sure I'm not accidentally 10x'ing that token cost by including huge routing metadata in every context window. Quick Follow-up Question We also want to mix deterministic and non-deterministic approaches: - Use

agent.network() for complex multi-step tasks that need AI routing

- Use simple agent.generate() or predefined workflows for straightforward tasks (e.g., "send an email")

What's the best architecture for this? Should we:

- Have a higher-level orchestrator that decides: network vs. direct agent?

- Use different API endpoints for complex vs. simple tasks?

- Something else?

Thanks for your help!