Serverless spins up needless workers during cold boot

I'm using llama 3.3 70b on vLLM using Serverless. When I don't have any workers and a new job comes in, Serverless starts a worker. However, even though I'm using cached models and flashboot, the worker will show as "running" but will not actually start processing any jobs for about 3-4 minutes. During that time, Serverless starts a 2nd and a 3rd worker because of Queue Delay.

However - starting a 2nd and 3rd worker won't do anything to help the situation, because they will also take 3 minutes before they can start accepting jobs. The only thing it does is charge me money for useless workers every single time that we go from 0 to 1 (or in this case 2-3) workers.

It seems to me that the Queue Delay should count from the time a worker is actually processing jobs, not just blindly the time the job is in the queue. Or, like any other autoscaling solution I've used, we should be able to set an upscale time limit that prevents additional upscales from happening within X minutes of an upscale.

Are there any workarounds? Obviously I don't want to set my queue delay to 4 minutes...because if I'm going from 1 to 2 workers, I would want to scale up sooner. Is this a known issue? Any planned features to address it?

4 Replies

Currently I have 0 jobs waiting in queue, but 3 running workers. And these workers are not cheap, so this is costing us significant money for no reason!

If workers could really start and begin processing jobs within 1-2 seconds, this problem would also not be an issue. But that is not the case. This means I also have a longer idle timeout, because shutting down a worker can cause a long delay for new jobs coming in, while I wait on workers to start accepting jobs.

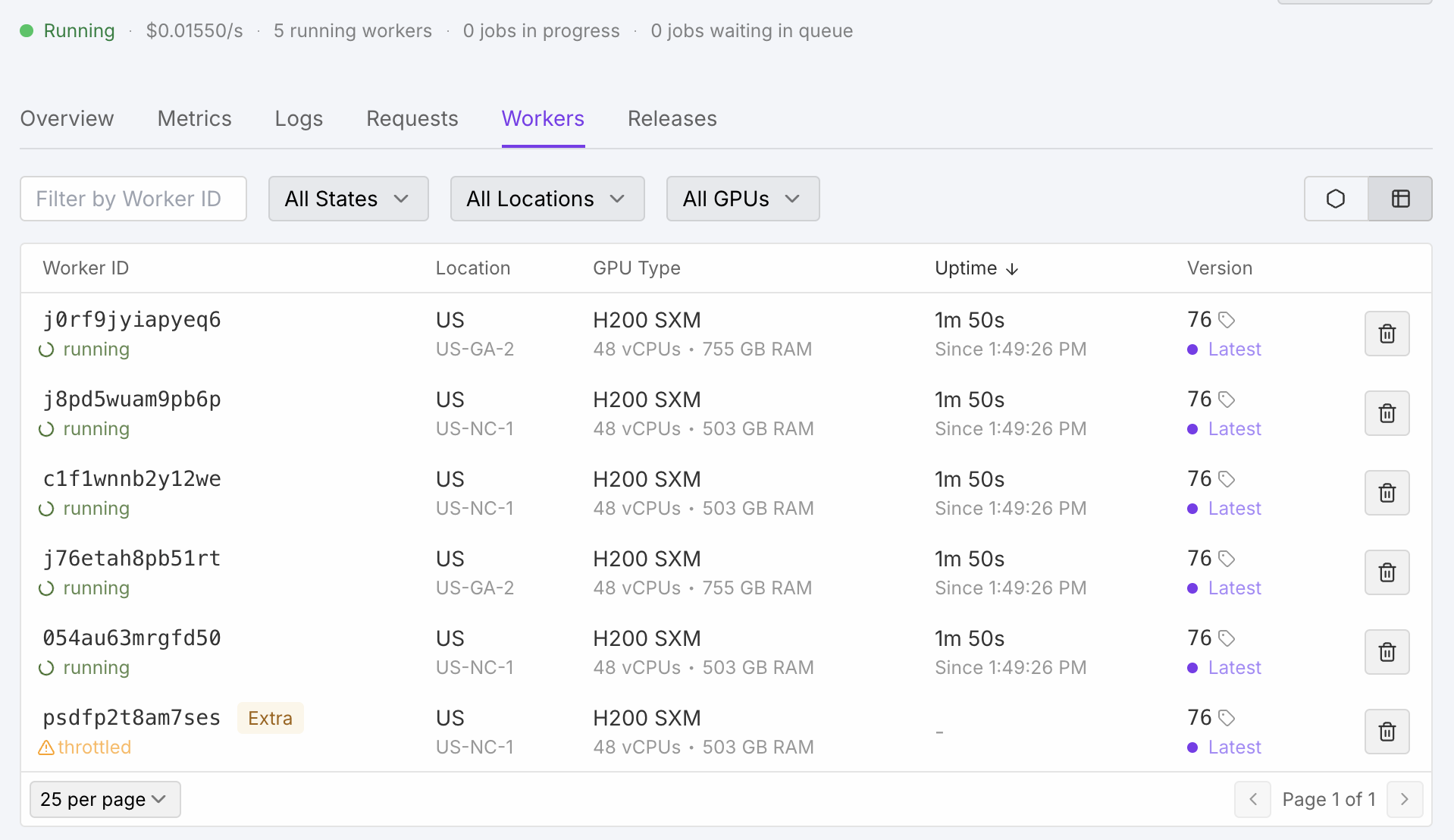

What the heck? It just started 5 workers and I have 0 jobs in queue...

It looks like you were getting a little bit of traffic around the time of your reports? All but one worker on this endpoint is idle.

I terminated them all. It happens when we get a job that comes in. I explained it all above.

I mean..if you need me to leave them all up next time it happens, I can do that. But it's pretty easy to understand and reproduce. And if I leave the workers running, I'm getting charged for it. So whenever I see it happening, I usually terminate them manually to stop being charged for workers I don't need.

I took the screenshot to show the issue before terminating

"little bit of traffic" is the key phrase here - definitely not enough that we needed more than 1 worker to service the traffic.