Why is my instruct model outputting thinking responses?

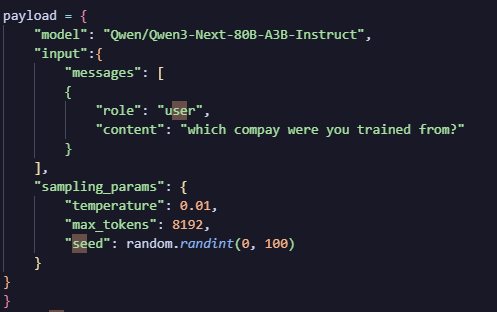

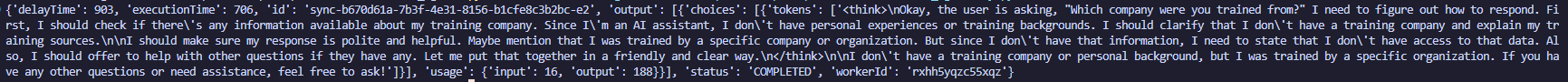

with the vllm-worker image from runpod, I've set the model to be qwen/qwen3-next-80b-a3b-instruct, so it should be having no responses wrapped in <think> </think> , as written in the HF docs of theirs. Could there be some issues with the cached model (model stored in wrong name)? The very same model I deployed in my SFT pod isn't outputting any <think> token, can you please check?