Is there any method to crawl the next pages

Here is my current current crawl setting

crawl = firecrawl.crawl(

url=i,

max_discovery_depth=4,

scrape_options={"formats": ["html"]},

exclude_paths=[

r"..jpeg$", r"..jpg$", r"..png$", r"..gif$",

r"..webp$", r"..svg$", r"..ico$",

r"..pdf$", r".*.xml$",

]

)

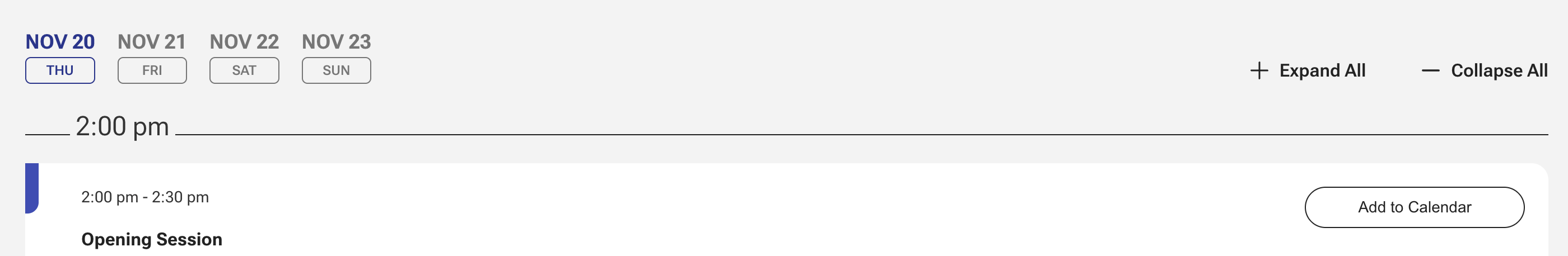

Is there any methods to also the next pages shown in the photo? Besides, is it a must to crawl again? Can I prevent repeated crawling in the new crawl? The token is burning so fast.

crawl = firecrawl.crawl(

url=i,

max_discovery_depth=4,

scrape_options={"formats": ["html"]},

exclude_paths=[

r"..jpeg$", r"..jpg$", r"..png$", r"..gif$",

r"..webp$", r"..svg$", r"..ico$",

r"..pdf$", r".*.xml$",

]

)

Is there any methods to also the next pages shown in the photo? Besides, is it a must to crawl again? Can I prevent repeated crawling in the new crawl? The token is burning so fast.