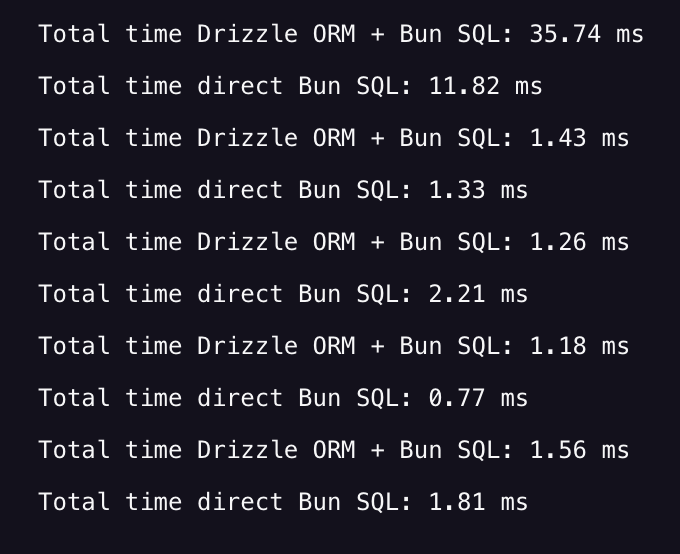

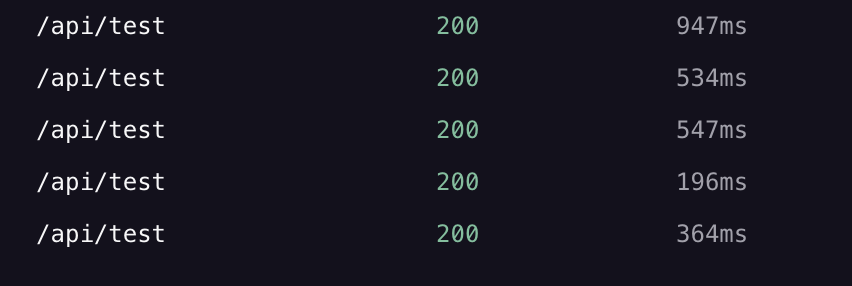

Server Routes are quite slow on Railway with bun preset.

Simple test API with 2 serial DB calls taking a total of 2 ms but the http logs shows over 200 ms total duration. Any one facing/faced anything like this ??

This is my API route

This is my API route

import { env } from "@/env/server";

import { db } from "@/lib/db";

import { createFileRoute } from "@tanstack/react-router";

import { json } from "@tanstack/react-start";

import { SQL } from "bun";

import { sql } from "drizzle-orm";

const directClient = new SQL(env.DATABASE_URL);

export const Route = createFileRoute("/api/test")({

server: {

handlers: {

GET: async () => {

const start = performance.now();

const result = await db.execute(sql`SELECT 1 as id`);

console.log(

"Total time Drizzle ORM + Bun SQL:",

(performance.now() - start).toFixed(2),

"ms",

);

const start2 = performance.now();

const directResult = await directClient`SELECT 1 as id`;

console.log(

"Total time direct Bun SQL:",

(performance.now() - start2).toFixed(2),

"ms",

);

return json({ result, directResult });

},

},

},

});import { env } from "@/env/server";

import { db } from "@/lib/db";

import { createFileRoute } from "@tanstack/react-router";

import { json } from "@tanstack/react-start";

import { SQL } from "bun";

import { sql } from "drizzle-orm";

const directClient = new SQL(env.DATABASE_URL);

export const Route = createFileRoute("/api/test")({

server: {

handlers: {

GET: async () => {

const start = performance.now();

const result = await db.execute(sql`SELECT 1 as id`);

console.log(

"Total time Drizzle ORM + Bun SQL:",

(performance.now() - start).toFixed(2),

"ms",

);

const start2 = performance.now();

const directResult = await directClient`SELECT 1 as id`;

console.log(

"Total time direct Bun SQL:",

(performance.now() - start2).toFixed(2),

"ms",

);

return json({ result, directResult });

},

},

},

});