502 Error - Serverless Endpoint (id: z2v5nclomp5ubo) 1.1.4 Infinity Embeddings

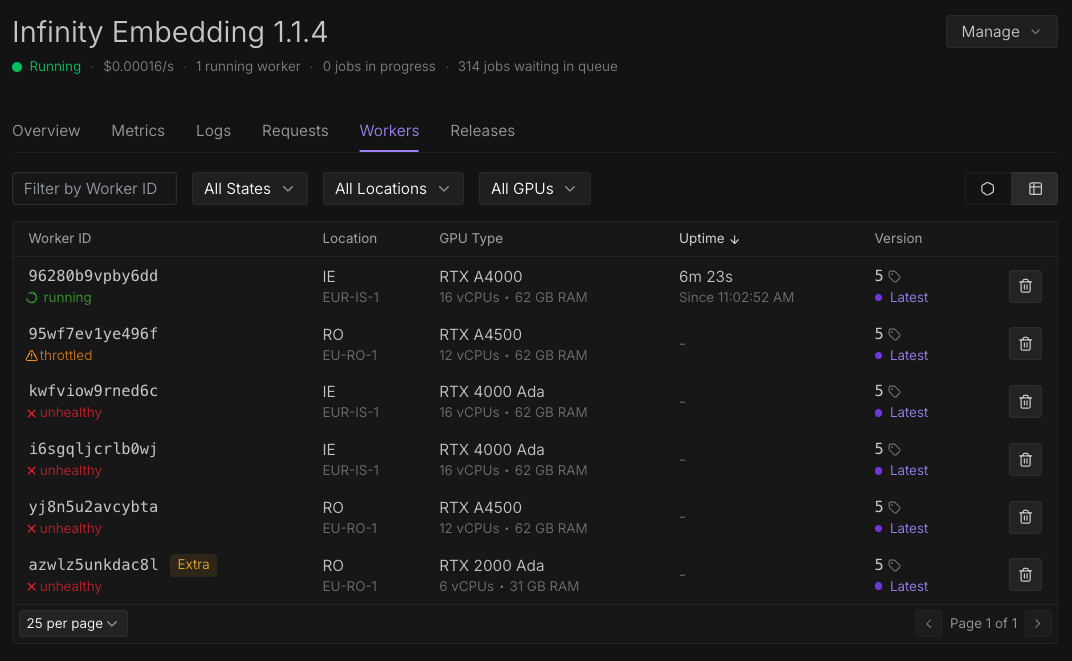

It seems my pipelines that leverage RunPod Serverless Infinity Embeddings are unable to requisition an pod, likely due to volume? All of the workers are showing status of Initializing or Throttled permanently in the UI. However when I check the GPU configuration, I have selected 4 valid GPUs all of which are either "Medium Supply" or "High Supply"

I end up getting a 502 error in my pipeline in response to my request, which based on my research appears to be related to the RunPod load balancer failing to find a backend pod that it can send jobs to, which when combined with the Throttled / Initializing messages in the UI makes me think that the RunPod service is overwhelmed. Is there a plan to improve the reliability of the service? I could also easily be missing something regarding my configuration though it is generally the Infinity Embeddings 1.1.4 quick start offered through the RunPod UI running BAAI/BGE-m3 with minimal additional configurations.

Is there a recommendation for how I can use a fallback service, an additional endpoint, anything from RunPod so that it can have time to self heal?

19 Replies

@Dj here you go. Thank you for your help!

Thank you! I just need the endpoint id. I wanted to check your supply settings to make sure we're reporting the high/medium status right

Its in the title of my post: z2v5nclomp5ubo

Oh haha sorry I didn't look up that high :fbslightsmile:

One second!

No problem 😉`

I have a suspicion this is related to the model caching feature but it's not super obvious to me. Someone from Support will probably follow up if I can't figure it out.

If you have a model selected in the UI for preloading I would try starting the worker without it.

@Dj removing the

MODEL_NAMES from the env variables appears to have worked, but this will increase our cold start times, correct?Yes, but it's good to have the issue confirmed. Let me grab a few peoples attentions.

Thank you @Dj this is a good solve process, no problems 🙏

My containers require the MODEL_NAMES env variable

I am going to create a new endpoint and change the id. I won't delete this one so you guys can investigate on it

Here are the overall combined logs for the endpoint. I do need to reduce the max allotted workers on this endpoint to set up the one for our work, so in case that deletes containers this might help you avoid having to repro the issue or dig deeper than necessary

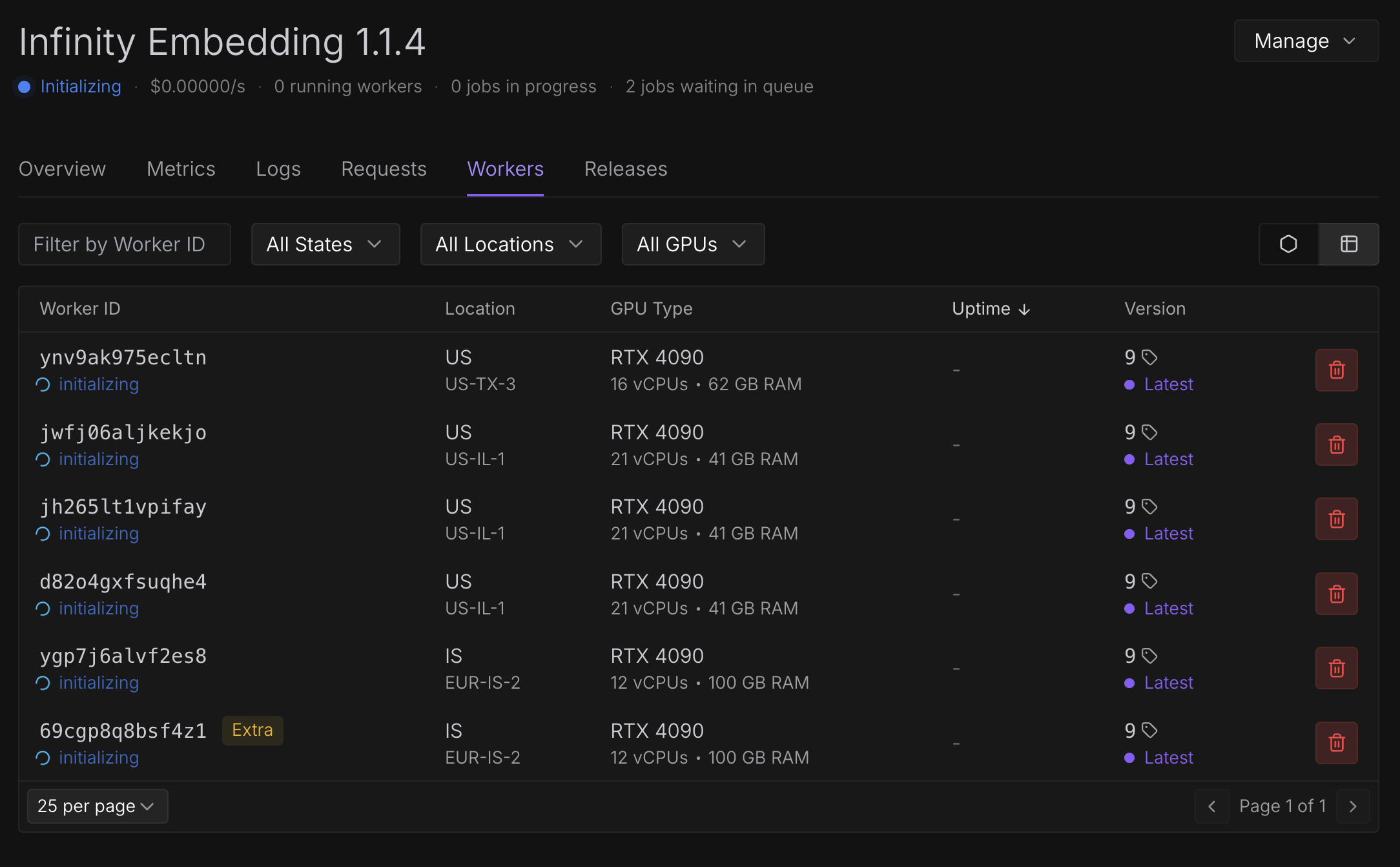

@Dj I made a new endpoint with id:

v1xlb0aeduu217 . This one is not able to get past Initializing either when using the out of the box Infinity 1.1.4 image. Default registry is the runpod registry. Trying to launch infinity embeddings container directly from Docker Hub michaelf34/infinity:0.0.77-trt-onnx

None of the solutions I tried worked. We are going to need to support another neocloud using an adapter pattern so that we can go live, but I am wondering if its clear what the issue here is? Its totally fine if you guys need more time but we need to evaluate our vendors for stability, and I want to make sure I know whats going on and if its my mistake.I sent you a DM explaining the issue and a solution.

Repeating what I said there, there are two problems here - our automatic parsing of

MODEL_NAMES not being compatible with your format and a bug in what we call machine prioritization. We've released a fix on our side, and you should be fine (pending the format change)The problem doesn't seem to be resolved. After getting one worker running which freed me off the task for a while, that one also crashed and now after cycling all of the workers, they are all stuck on initialization again.

The logs are also deceptive. Within the worker it says "worker is ready" but they never take jobs from the queue nor leave intializing state