Mastra

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

JoinMastra

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

Joinhow to access sub-agent tool results using ai sdk.

[Resolved] - Documentation: Inconsistency in website and code?

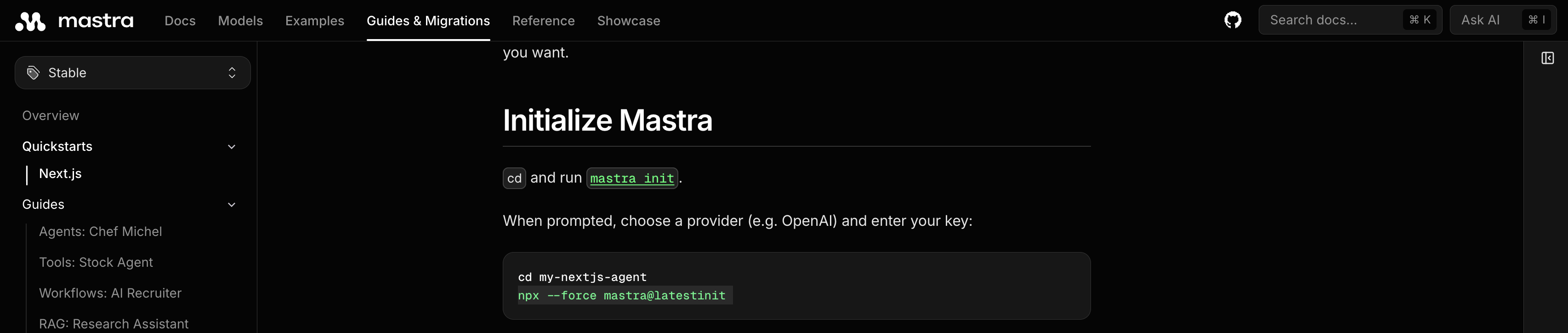

npx --force mastra@latestinit on the website wasted a ton of my time......

Entry/Wrapper needs memory so that other agent dowstream can have memory, why?

entryWorkflow with 2 agents being called. AgentA has memory and AgentB does not.

Can we have conversational chat with this setup? From what I have found, we cannot have previous context in a workflow with agent having or not having memory in it. Is that right?

Now, to resolve that I had to introduce a wrapperAgent with memory which now calls my entryWorkflow. Now my wrapperAgent does not just call and return raw output from worflow execution. After few chat, it starts interpreting and sometimes appending previous answers with the new result. It breaks the stability. If I don't give memory to this wrapperAgent so as to stablise it, where it give the execution output and do not have previous, then the downstream agentA for some reason does have context of previous conversation....I want to assign agent_id to the span of `chat {model}` as well.

Streaming from a workflow step when using Inngest

workflowRoute with patched streaming support as described in this post.

This works fine when using Mastra standalone and consuming it with AI-SDK on the frontend.

...Access AI Tracing of a particular trace id programmatically without mastra studio

The resourceId and threadId for chatRoute and copilotkit integration should be able to contain authe

Mastra wasn't able to build your project. Please add ... to your externals

Dynamically Reload Prompt/Agents

chatRoute + useChat stream unstable

Langfuse integration via mastra/langfuse - tags and cached token count

chatRoute + useChat not working for tool suspension in @mastra/ai-sdk@beta

chatRoute with @ai-sdk useChat hook, tool suspension events

(tool-call-suspended) are not emitted, causing the frontend to

never receive them.

This makes HITL (Human-in-the-Loop) flows impossible with the...Tracing prompts with Langfuse

GeminiLiveVoice: Tool calls work but args always empty - Expected behavior?

Cannot send base64 images to Gemini in Mastra v1.0 Beta [Solved]

pnpm create mastra@beta.

```

"dependencies": {

"@mastra/core": "1.0.0-beta.2",

"@mastra/evals": "1.0.0-beta.0",...Nested Branching

Parallel Steps `writer.custom` throwing writer is locked

PineconeVector not assignable to MastraVector<VectorFilter> in Mastra configuration

PineconeVector instance into the main Mastra instance under vectors throws this TypeScript error:

Type 'PineconeVector' is not assignable to type 'MastraVector<VectorFilter>'.

Type 'PineconeVector' is not assignable to type 'MastraVector<VectorFilter>'.

Firebase Auth - Dev Bypass