Mastra

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

JoinMastra

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

JoinDynamically Reload Prompt/Agents

chatRoute + useChat stream unstable

Langfuse integration via mastra/langfuse - tags and cached token count

chatRoute + useChat not working for tool suspension in @mastra/ai-sdk@beta

chatRoute with @ai-sdk useChat hook, tool suspension events

(tool-call-suspended) are not emitted, causing the frontend to

never receive them.

This makes HITL (Human-in-the-Loop) flows impossible with the...Tracing prompts with Langfuse

GeminiLiveVoice: Tool calls work but args always empty - Expected behavior?

Cannot send base64 images to Gemini in Mastra v1.0 Beta [Solved]

pnpm create mastra@beta.

```

"dependencies": {

"@mastra/core": "1.0.0-beta.2",

"@mastra/evals": "1.0.0-beta.0",...Nested Branching

Parallel Steps `writer.custom` throwing writer is locked

PineconeVector not assignable to MastraVector<VectorFilter> in Mastra configuration

PineconeVector instance into the main Mastra instance under vectors throws this TypeScript error:

Type 'PineconeVector' is not assignable to type 'MastraVector<VectorFilter>'.

Type 'PineconeVector' is not assignable to type 'MastraVector<VectorFilter>'.

Firebase Auth - Dev Bypass

Struggling to deploy to Mastra Cloud

Build Error When Upgrading Beyond Mastra v0.12.3

"mastra": "^0.12.3", but any version after that results in the following error:

Error:...How to debug runtime server issues

Azure Voice setup + outdated doc

Error: HTTP error! status: 500 - {"error":"Speech recognition failed: NoMatch - ","stack":"Error: Speech recognition failed: NoMatch - \n

here is my code: ...How to stop agent midway during stream while preserving the output streamed till that point

Streaming Workflow Using Copilot Kit

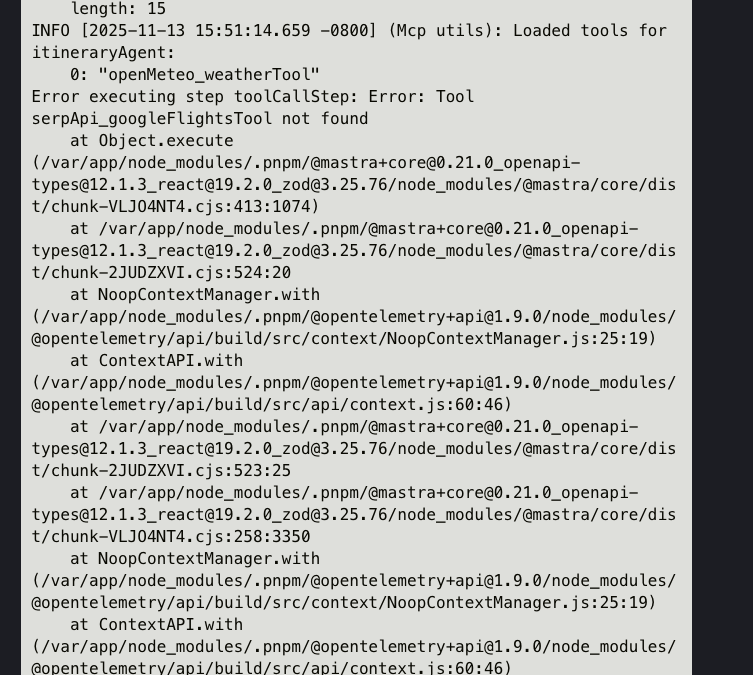

Why the step Call execution tells me the tool not found?

Circular dependency issue

mastra build command.