am i billed for any of this

Unauthorized while pulling image for Faster Whisper Template from Hub

ComfyUI Serverless Worker CUDA Errors

CUDA error comfyui

Workflow execution error: Node Type: CLIPTextEncode, Node ID: 10, Message: CUDA error: no kernel image is available for execution on the device\nCUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.\nFor debugging consider passing CUDA_LAUNCH_BLOCKING=1\nCompile with TORCH_USE_CUDA_DSA to enable device-side assertions.\n\n"...Is there any way to force stop a worker?

Long delay time

Can't Create 5090 Endpoint via REST APIs

{"error":"create endpoint: create endpoint: graphql: gpuId(s) is required for a gpu endpoint","status":500}

{"error":"create endpoint: create endpoint: graphql: gpuId(s) is required for a gpu endpoint","status":500}

Workers downloading and extracting docker images again and again, not loading from cache

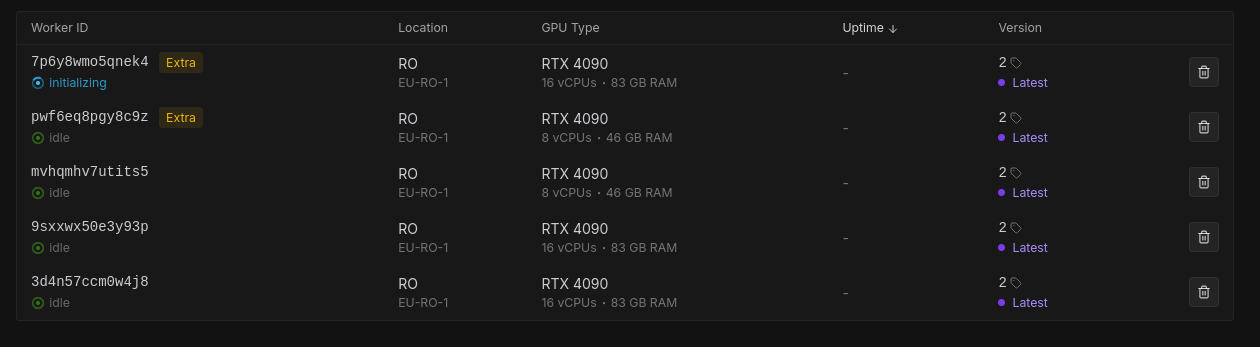

Getting workers id via api?

Runpod serverless wan 2.2

why is serverless 2x more expensive than normal pod?

Bad performance on runpod

Serverless max 2 workers, queue delay with 5s idle timeout : Create 4-6 idle workers not terminated

Worker went idle before finishing downloaded my doker image

Add more worker restriction options?

Delay Time spike via public API; same worker next job is ~2s

Delay time of 120,000 ms?

Terraform provider or alternative deployment strategy?