Unable to create MI300x pod

I was able to do so about a week ago where I was using the pods to write some rocm code. Now when I try to do it, I get some transient ui error message.

Determing a fit for my needs.

Hello, I currently have a Ubuntu VM with GPU support, a static IP and storage with Vultr.

The project is being developed so it’s not using really any resources (no clients).

The client iPhone and android app will offload GPU processing to the backend GPU enabled server....

Running out of memory

Hi, the OG kohya template from runpod was taken down and not replaced, so now I'm using the InvokeAI template. I can't complete any training because it keeps crashing because it keeps running out of memory. I've never had this happen before

...

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 512.00 MiB. GPU 0 has a total capacty of 23.54 GiB of which 303.12 MiB is free. Process 2505369 has 384.00 MiB memory in use. Process 2505421 has 7.50 GiB memory in use. Process 2521763 has 15.35 GiB memory in use. Of the allocated memory 14.30 GiB is allocated by PyTorch, and 581.12 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 512.00 MiB. GPU 0 has a total capacty of 23.54 GiB of which 303.12 MiB is free. Process 2505369 has 384.00 MiB memory in use. Process 2505421 has 7.50 GiB memory in use. Process 2521763 has 15.35 GiB memory in use. Of the allocated memory 14.30 GiB is allocated by PyTorch, and 581.12 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

ollama template not working

Trying to start a pod running ollama either the base or also better-ollama-webui

create container madiator2011/better-ollama-webui:cuda12.4

cuda12.4 Pulling from madiator2011/better-ollama-webui

Digest: sha256:2926109085a545a2d5e83545fa0b7e2d10c3fa773c7cb2cf95331da37ff72413...

How do I get rid of "Running your iPod without GPUs"?

I've already set up focuuus on this pod and I've spent a lot of time on it, but now I can't use it because of this error. And the only option I see is to create a new pod and transfer all the settings there, initialize focuuus again.... in fact, doing double work, because waiting is clearly not an option, this inscription never disappears. Maybe someone has other options?

Issue with Changing Pod Template on RunPod (Pytorch 2.4.0)

Hello everyone,

I’m experiencing an issue on RunPod when trying to change the template of my pod. Currently, the template is set to Pytorch 2.1, but I want to switch it to Pytorch 2.4.0 to use ComfyUI.

The problem is that even after selecting the Pytorch 2.4.0 template from the list, the interface automatically switches back to Pytorch 2.1 without applying the change. I’ve tried several solutions:

Reloading the page...

Hello Runpod team,

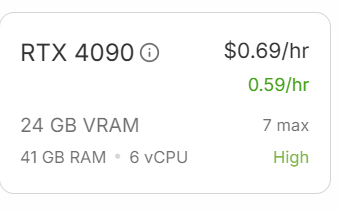

I have registered on Runpod platform and was very excited to speed up my project with better hardware. But the experience has been very disappointing. I have deployed a ComfyUI pod, the machine itself had problem( RTX 4090), and after waiting for 2 days I had to terminate the pod. this is after I finished the configuration.

I have reconfigured another Pod( RTX 4090), now i dont know when GPUs will be available. This is shown as High Availability instance . I feel I am stuck here. can someone guide me how can I get GPUs allocated, instead of not knowing when it will be available. Also do let me know if there any best practices for a short term project....

Proxied SSH not working

SSH'ing via

ssh.runpod.io hasn't worked for the last 2 weeks. Is there any plan to fix this?Persistent Issues with SDXL + IP-Adapter Stability Across RunPod AI Templates (ComfyUI & A1111)

Hey @RunPod Support / Community,

I'm running into persistent critical issues with the hearmeman/comfyui-wanvideo:v9 template when trying to use SDXL + IP-Adapter (specifically with h94 IP-Adapter weights and the LAION CLIP-ViT-H-14 encoder).

Despite correct model placement and extensive troubleshooting, enabling IP-Adapter consistently leads to highly unstable/disfigured outputs or backend crashes, even with simple prompts and base SDXL 1.0 (which works fine without ControlNet/IP-Adapter). The "Resampler size mismatch" error has been resolved by using the correct ~2.5GB ViT-H encoder.

Is anyone else facing issues with stable SDXL + IP-Adapter character consistency? I'm trying to get basic Text-to-Image, Image-to-Image, and eventually Image-to-Video workflows functional.

I'm currently unable to get a usable character output. Any verified workflow examples (JSONs), specific ComfyUI node configurations for this template, or insights into known incompatibilities would be hugely appreciated. I've been trying to resolve this since last Friday....

SSH key generation issue with `runpodctl config`

I'm receiving a new error as of today when I run

I wasn't getting this error before today. I tried generating a new API key (with full perms) just in case there was an issue with it (even though I could use it successfully with a GraphQL request). Still fails. I added a print statement before this to confirm that

runpodctl config --apiKey $RUNPOD_API_KEY

Error: failed to update SSH key in the cloud: failed to get SSH keys from the cloud: API error: Unauthorized

Error: failed to update SSH key in the cloud: failed to get SSH keys from the cloud: API error: Unauthorized

$RUNPOD_API_KEY has the correct value....Something wrong with A4000

My stable diffusion generations gets mangled very blurry after 15 mins of use with same checkpoint when using A4000. I use forge UI. I have to change models from time to time to get decent generations.

404 Proxy / Terminal error

I've been consistently not able to load a template (which should've mostly worked before). It always shows 404 error or the web terminal cannot be spawned. This is the first time it has happened. Anyone has similar issue?

The template is

atinoda/text-generation-webui:latest, or does anyone has a different template they recommend?...Solution:

Yep i think that's it, the image is broken. I'll be using the attached template i guess

US-TX-3 Pod Availability

There doesn't seem to be any pod availability in US-TX-3 region. Do we have any estimation on when availability will be back? Thanks!

global networking through REST API

Is it possible to create a pod using the REST API with “Global networking” enabled?

On the "Global networking" docs page (https://docs.runpod.io/pods/networking) or on the REST API docs (https://rest.runpod.io/v1/docs#tag/docs/GET/openapi.json) I can't find info about that.

Thanks...

torch cuda shows no devices available (B200)

on 8x B200 system

lz1ew4cgoiot8f :

```

Python 3.11.11 (main, Dec 4 2024, 08:55:07) [GCC 11.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information....very slow 5090 pod

hello, this pod

a02462e46395 seems to be terribly slow. i'm trying to install flash_attn and it's building for more than 30 minutes. can someone please check?error starting container: Error response from daemon: failed to create task for container

Received this email at 2AM: There seems to have been a possible issue with the server that one or more of your pods is hosted on.

The following pods were impacted.

80pvzctxtwaruc

...