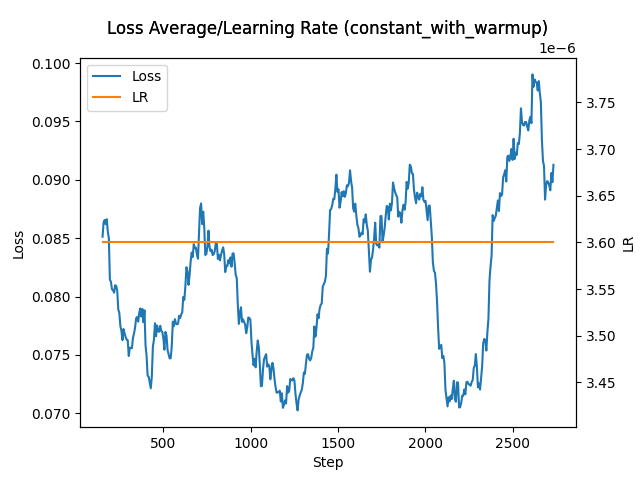

Num batches each epoch = 32

Num Epochs = 100

Batch Size Per Device = 2

Gradient Accumulation steps = 2

Total train batch size (w. parallel, distributed & accumulation) = 4

Text Encoder Epochs: 75

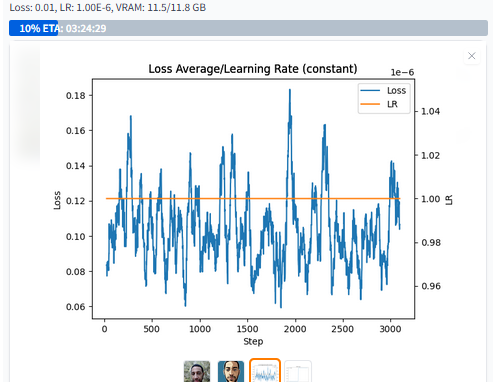

Total optimization steps = 3200

Total training steps = 6400

Resuming from checkpoint: False

First resume epoch: 0

First resume step: 0

Lora: False, Adam: True, Prec: bf16

Gradient Checkpointing: False

EMA: True

UNET: True

Freeze CLIP Normalization Layers: False

LR: 8e-06