Slow cold start times in Vercel with my T3 App API

I'm trying to debug some really bad cold start times with my web app's api. The UI loads up really quickly and I haven't had a problem with it. However, my trpc api sometimes takes a full 10 seconds to return a response. My api performs fairly simple CRUD operations against a cloud firestore backend.

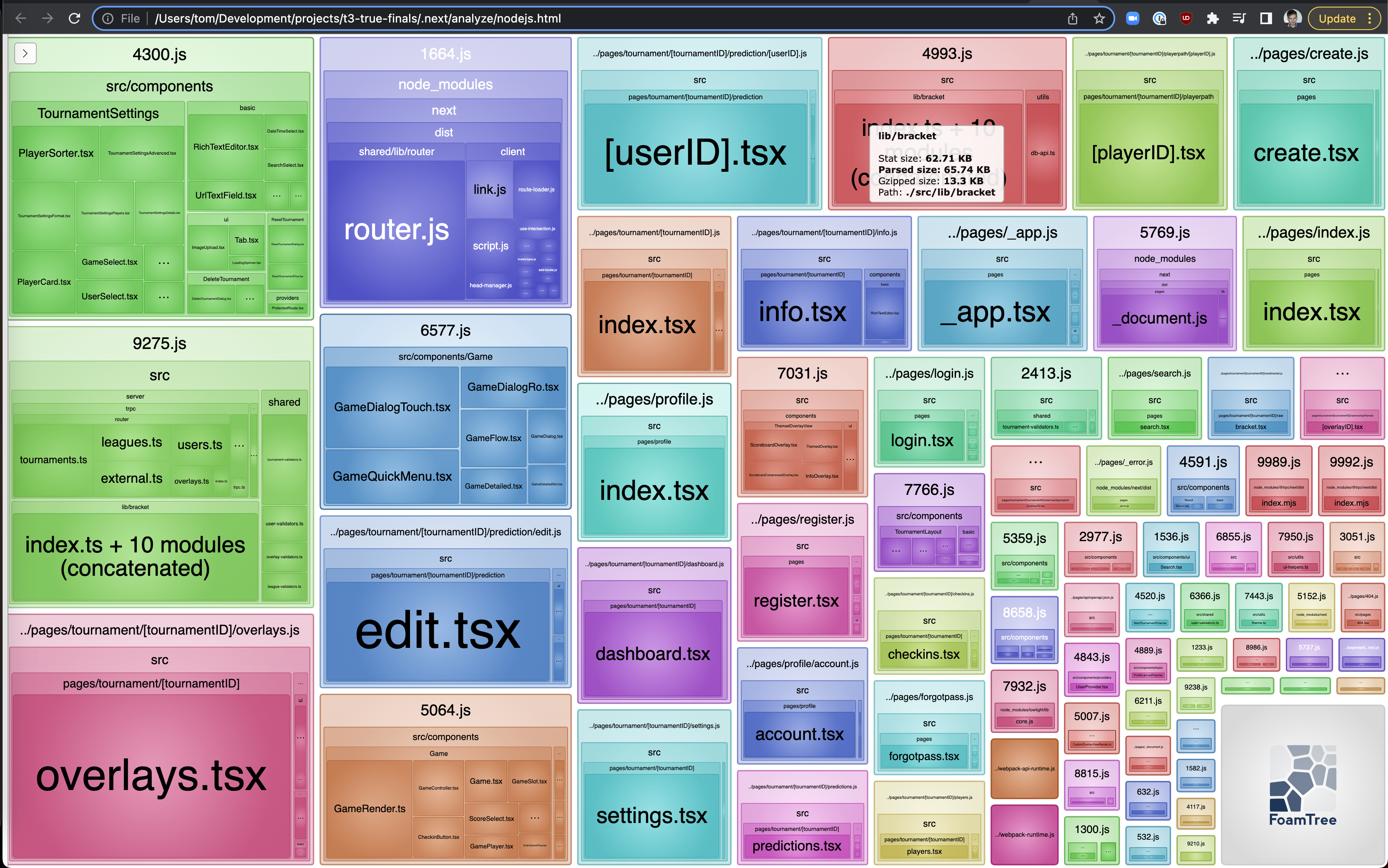

I'm not really sure how to debug this. I got the webpack analyzer working and the results are... confusing:

For starters, it seems like all of my pages are in the red in terms of 'First Load JS' size. However, again, the only routes I have problems with are the API ones. When I look at the block diagrams I'm even more confused.

I guess my biggest questions are:

1) how do i take the info from webpack analyzer and do something with it?

2) how do I know if my bundle sizes are too big?

3) Do layouts in _app.tsx add to the bundles sizes in pages/api?

4) How do I know if tree shaking isn't working?

I'm not really sure how to debug this. I got the webpack analyzer working and the results are... confusing:

info - Collecting page data

info - Generating static pages (48/48)

info - Finalizing page optimization

Route (pages) Size First Load JS

┌ ○ / 6.34 kB 425 kB

├ /_app 0 B 319 kB

├ ○ /404 504 B 319 kB

├ λ /api/[...trpc] 0 B 319 kB

├ λ /api/blocked 0 B 319 kB

├ λ /api/openapi.json 0 B 319 kB

├ λ /api/trpc/[trpc] 0 B 319 kB

├ ○ /create 3.71 kB 732 kB

├ ○ /forgotpass 2.17 kB 352 kB

├ ○ /login 3.71 kB 350 kB

├ ○ /profile 6.53 kB 355 kB

├ ○ /profile/account 6.44 kB 352 kB

├ ○ /register 4.23 kB 354 kB

├ ○ /search 3.43 kB 364 kB

├ ○ /tournament/[tournamentID] 3.37 kB 444 kB

├ ○ /tournament/[tournamentID]/checkins 4.08 kB 432 kB

├ ○ /tournament/[tournamentID]/dashboard 5.4 kB 433 kB

├ ○ /tournament/[tournamentID]/info 10.4 kB 461 kB

├ ○ /tournament/[tournamentID]/overlays 53.4 kB 610 kB

├ ○ /tournament/[tournamentID]/playerpath/[playerID] 5.67 kB 431 kB

├ ○ /tournament/[tournamentID]/players 3.19 kB 462 kB

├ ○ /tournament/[tournamentID]/prediction/[userID] 1.05 kB 448 kB

├ ○ /tournament/[tournamentID]/prediction/edit 1.11 kB 448 kB

├ ○ /tournament/[tournamentID]/predictions 2.97 kB 461 kB

├ ○ /tournament/[tournamentID]/raw/bracket 3.98 kB 423 kB

├ ○ /tournament/[tournamentID]/raw/overlay/custom/[overlayID] 2.93 kB 330 kB

├ ○ /tournament/[tournamentID]/raw/overlay/themed/[overlayID] 1.2 kB 462 kB

└ ○ /tournament/[tournamentID]/settings 7.03 kB 696 kB

+ First Load JS shared by all 325 kB

├ chunks/framework-1f1fb5c07f2be279.js 45.4 kB

├ chunks/main-cb03577a9d89cf82.js 28 kB

├ chunks/pages/_app-880c146f5b0f946f.js 244 kB

├ chunks/webpack-dd8919a572f3efbe.js 1.04 kB

└ css/74fa4113ed4c0e78.css 6.7 kBinfo - Collecting page data

info - Generating static pages (48/48)

info - Finalizing page optimization

Route (pages) Size First Load JS

┌ ○ / 6.34 kB 425 kB

├ /_app 0 B 319 kB

├ ○ /404 504 B 319 kB

├ λ /api/[...trpc] 0 B 319 kB

├ λ /api/blocked 0 B 319 kB

├ λ /api/openapi.json 0 B 319 kB

├ λ /api/trpc/[trpc] 0 B 319 kB

├ ○ /create 3.71 kB 732 kB

├ ○ /forgotpass 2.17 kB 352 kB

├ ○ /login 3.71 kB 350 kB

├ ○ /profile 6.53 kB 355 kB

├ ○ /profile/account 6.44 kB 352 kB

├ ○ /register 4.23 kB 354 kB

├ ○ /search 3.43 kB 364 kB

├ ○ /tournament/[tournamentID] 3.37 kB 444 kB

├ ○ /tournament/[tournamentID]/checkins 4.08 kB 432 kB

├ ○ /tournament/[tournamentID]/dashboard 5.4 kB 433 kB

├ ○ /tournament/[tournamentID]/info 10.4 kB 461 kB

├ ○ /tournament/[tournamentID]/overlays 53.4 kB 610 kB

├ ○ /tournament/[tournamentID]/playerpath/[playerID] 5.67 kB 431 kB

├ ○ /tournament/[tournamentID]/players 3.19 kB 462 kB

├ ○ /tournament/[tournamentID]/prediction/[userID] 1.05 kB 448 kB

├ ○ /tournament/[tournamentID]/prediction/edit 1.11 kB 448 kB

├ ○ /tournament/[tournamentID]/predictions 2.97 kB 461 kB

├ ○ /tournament/[tournamentID]/raw/bracket 3.98 kB 423 kB

├ ○ /tournament/[tournamentID]/raw/overlay/custom/[overlayID] 2.93 kB 330 kB

├ ○ /tournament/[tournamentID]/raw/overlay/themed/[overlayID] 1.2 kB 462 kB

└ ○ /tournament/[tournamentID]/settings 7.03 kB 696 kB

+ First Load JS shared by all 325 kB

├ chunks/framework-1f1fb5c07f2be279.js 45.4 kB

├ chunks/main-cb03577a9d89cf82.js 28 kB

├ chunks/pages/_app-880c146f5b0f946f.js 244 kB

├ chunks/webpack-dd8919a572f3efbe.js 1.04 kB

└ css/74fa4113ed4c0e78.css 6.7 kBFor starters, it seems like all of my pages are in the red in terms of 'First Load JS' size. However, again, the only routes I have problems with are the API ones. When I look at the block diagrams I'm even more confused.

I guess my biggest questions are:

1) how do i take the info from webpack analyzer and do something with it?

2) how do I know if my bundle sizes are too big?

3) Do layouts in _app.tsx add to the bundles sizes in pages/api?

4) How do I know if tree shaking isn't working?