107 Replies

Hmm, I just did

rclone copy file.dmg r2:sid-experiments/ --s3-upload-cutoff=100M --s3-chunk-size=100M --s3-upload-concurrency=10 -vv and it transferred a 2GB file just fine 🤔Here's what I got when I tried that:

Huh, that 400 is interesting

Oh wait

I think you're missing a space after your bucket name haha

OOp

With that issue fixed:

It's weird. It almost seems like somewhere along the line, something just pauses it...

Yeah.. It doesn't even start uploading it looks like

Because what you should be seeing is:

Is there a way to up the bandwidth it can use per chunk? Seems really odd that it is running ~2 MB/s when the connection can run 400+, even if other stuff is running simultaneously.

Hmm I'm not that familiar with rclone TBH

Do you have a VPN or an app or something that's maybe interfering

For example, I use Little Snitch, which often does this kind of thing

Nope, or at least not anything that I've enabled. Just running bog-standard Ubuntu.

I have WARP, but it isn't on

Give me your account ID? I'll see what requests you're making

864cdf76f8254fb5539425299984d766Wut?

Why is it on? The icon shows it is off, and I can't click on it...

Trying to see if I can get it to shut off

So the 1.1.1.1/help says I'm connected,

warp-cli says I am notOkay so I only see a few CreateBucket conflicts for this

protomap bucket, and that's it..

I've seen Warp kill my speed as wellAnd no other website shows my being AS 13335, so I assume it is a bug

Apparently, it is trying to create the protomap bucket, seeing a Conflict from us, and possibly retrying

Might this be related to how I accidentally created a bucket called

protmap via rclone due to a typo?Hm I don't think so? Those would be treated as separate buckets I think

The requests I see from you are for

protomap though, not protmap

The ones with the ConflictNo, I just meant that I accidentally ran an upload to

protmap, and instead of throwing an error, it just auto-created a new bucket for it.But why is it even trying to create one? Clearly it exists...

I also don't see a ListBuckets, so that's very intriguing

It just... thinks it needs a new bucket

Might be my fault? https://forum.rclone.org/t/rclone-copy-is-trying-to-create-new-b2-bucket-with-each-copy-what-am-i-doing-wrong/20530/2

rclone forum

Rclone copy is trying to create new B2 bucket with each copy, what ...

hello, you left out the version of rclone but based on the go version it looks very old. so best of update and test again https://rclone.org/downloads/#script-download-and-install the output would look like rclone v1.53.2 - os/arch: linux/amd64 - go version: go1.15.3 if you post again, you need to post the exact command with debug l...

Apparently the version is very old...

Oh lol

I'm on 1.61.1 FWIW

Did it do anything different this time on your end? Still seems to get stuck...

Nope, I still see a CreateBucket that conflicts from you

Huh...

Any other good way to upload a 54 GB file in a decent amount of time?

I'll try making an

rclone forum post about itFWIW I'm on macOS, but I would be very surprised if that matters

Okay interestingly, I looked at my own logs and I see a CreateBucket as well from rclon

But it doesn't conflict obv

Wait a second

All this probably means nothing to you, I'm sorry

Do you have this 54G object in your bucket already?

Or like, an old version or something

No:

planet.pmtiles: Need to transfer - File not found at Destination

I have a similar file: map.pmtiles, but it isn't the same size, nor the same name

Just to verify, a CreateBucket on a bucket name that exists would return an error, right?Yep yep

https://forum.rclone.org/t/repeated-createbucket-requests-while-attempting-to-copy-file/36259 Tell me if I missed something, or said something wrong

rclone forum

Repeated CreateBucket Requests while attempting to copy file

What is the problem you are having with rclone? When attempting to copy a 54.779 GiB local file to the remote with --s3-upload-concurrency higher than 2, rclone continuously sends CreateBucket requests for a bucket that does exist. Because this bucket already exists, the remote returns an conflict, which rclone seems to handle silently, and then...

Okay so I've been comparing your account's logs with mine, and I realized I made one mistake: rclone isn't retrying PutBucket (I didn't look at the timestamps earlier).

In my case, it gets back a 409 from R2 for the PutBucket, then proceeds to start a multipart upload. In your case, it seems to just not do anything after the PutBucket 409

I'm now going to try and create a 54G file and see what hapopens

So does it only make one CreateBucket then?

Yeah, sorry, you might want to correct your forum post.

I think I just saw multiple attempts from you and didn't notice that the timestamps were multiple minutes apart

Updated post: https://forum.rclone.org/t/rclone-fails-to-switch-to-multi-part-uploads-when-a-file-is-too-large/36259

rclone forum

Rclone fails to switch to multi-part uploads when a file is too large

What is the problem you are having with rclone? When attempting to copy a 54.779 GiB local file to the remote with --s3-upload-concurrency higher than 2, rclone attempts to PutBucket a single time. When it fails(because the file is too large), it seems to just stop, instead of attempting a multi-part upload. rclone does not provide any visual i...

I'm creating a file as large as you now, and my first naive guess is that it might not have anything to do with R2 at all, maybe rclone is checksum-ing it something.

But this is just a guess, waiting to see what I find

Did it actually start uploading? Usually it starts within a second or two, at least if you don't have the bug I have

mkfile is still creating that file haha

Aha it's stuck for me as well nowhead -50000000000 /dev/random > lol.file

Hm...It has for 40s now

Well now I feel stupid

And also FWIW R2 hasn't gotten a CreateMultipartUpload for my account either, so this may be something local going on

Okay it begun after like a minute

Have you tried waiting that long?

I think I tried 1:30, but I'll try again with the new settings

If it is FS related (still a guess), it might be longer for you, depending on the speed of your disk

I'm on a HDD, so probably

I'll try for 5 minutes

It worked, and it only took about 4:40...

I'll try with 50

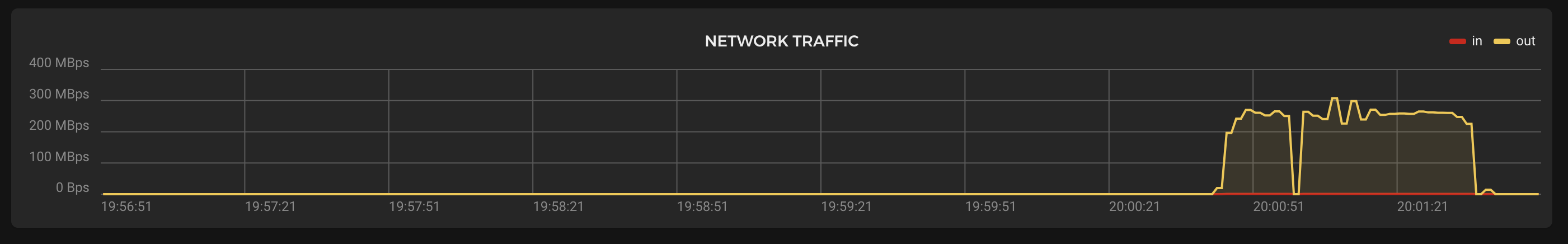

Aw yeah, 165 MB/s!!!

Huh, it ran fast for like 10 seconds, but now it’s running at half a mb…

Actually, it’s still slowing down

84 KB/s

Is this towards the end?

Like after it uploaded all parts?

No, I’m at 8%

Running at 7 KB

4

Still slowing down

I almost feel like it uploaded a few parts, and then got rate-limited…

At 200 years ETA

I think it's just dead now...

Anything on your end?

No I just see a bunch of UploadParts, which is expecte

But none of them hav finished yet

Ah a bunch of thme just finished

Is it still down at 4KB/s?

Oh, so it's just measuring upload speed really weird?

No, it came back up for a second, but now it is back down to 200 KB/s

Hmm not entirely sure, but does your speed go up and down?

Hm yeah maybe it does measure it wrong

Yeah, it seems to only count it as "uploading" when it is generating new Multiparts. Right now it is at 700 B/s

But it shouldn't be taking that long, your parts are like 100MBs each

Weird...

How many parts are you at? Like 20-30?

It's way too slow

62

They come in 5-10 minute spurts

Maybe it's slow to finalize an upload?

Do you have a way to measure network usage outside of rclone?

I'm at 2.8 MiB/s

Hm, there are a few things I want to try.

1. Upload at a different time

2. Create a bucket in a different location

3. Warp on/off

(Location control for CreateBucket lands tomorrow 🤫)

I'm seeing the same pattern. Both of our buckets are in EEUR (Frankfurt) though

But mine doesn't dip that much

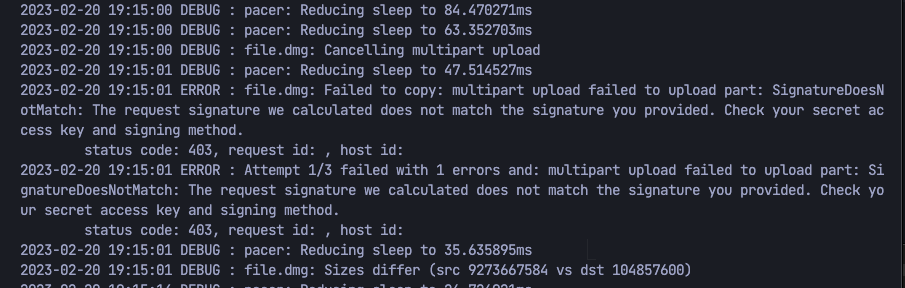

Got some new logs...

Rate limited, increasing sleep to 1.28s

pacer: low level retry 1/2 (error RequestCanceled: request context canceled

caused by: context canceled)

And it restarted itself...Same, wtf

If you can wait till tomorrow, I'll try this on a bucket in ENAM as well, just for my sanity

In the mean time if you-re curious... I wouldn't stop you from creating a bucket in ENAM and trying this there

Sure! Could be interesting to see.

Nice, mine just borked entirely

In ENAM? Also, to make sure, using a VPN should work? Or is there a flag I can flip to get the location picker?

Oh no, I haven't tried ENAM yet. I was talking about the one I started in Frankfurt

Yeah VPN for now, LocationConstraint from tomorrow! (hopefully)

If you want to create one, I can check where the bucket is

Starting tomorrow you'll be able to

GetBucketLocation as well

One major caveat right now (which is why we aren't going to be making a lot of noise yet) is that once you create a bucket with some name, deleting and recreating it will not respect region hints / VPNs

So it's like one bucket, one location, foreverDo you have a list of colos that work for ENAM? I'm struggling to get my VPN to actually route through any colos in the Middle East, so I want to know how far I can overshoot...

Ashburn's one

I don't have a list handy though

I don't understand what you mean though

Oh... Eastern North America? I thought it was Egypt, North Africa and the Mediterranean...

Haha no North America yeah

Sorry I didn't realize these region codes are Cloudflare specific

Newark work?

Yeah that should be cool too

Two things. I can't seem to access the settings for the thread, but you might want to lock it so that no one else can hop in and read it.

Secondly, is this going to be permanent?

Ok got it. Is https://864cdf76f8254fb5539425299984d766.r2.cloudflarestorage.com/enamtest in the right place?

Hmm I can only "Close and Lock" it. No big deal though, there's going to be public docs about it as well, we;re not hiding it, we just don't want to "advertise" location constraints yet because they're not very useful (at least in my opinion) till this caveat goes away

Yep this one's in Chicago

Nice!

To answer your second question: the location is permanent, yeah

No, I mean, is there any plans to make it so that at some point, you would be able to change it? I know you say it won't be immediately possible, but will it ever be?

Change it after creation? Or change location after a delete+recreate

Either?

Eventually yeah, we do want to always try and respect the location constraint, regardless of whether or not you've "used" a bucket name before

Change it after creation, without deleting it is MUCH harder though haha

But that's the ultimate goal, to move data around so locations don't matter at all. Your data is always where you need it

So it won't be replicated, but more auto-migrating? Will it be possible to have it replicate too?

Like, if I have a very large number of requests coming from multiple places in the world, having it auto-migrate would be nice, but it would be even nicer to have multiple closer copies to the end users.

ENAM seems to be having the same issue btw

Right, TBH this kind of thing just doesn't exist so it's hard for me to really judge what we'd end up doing. Maybe both? Maybe neither?

Replication in cloud storage?

Or on  specifically?

specifically?

specifically?

specifically?Oh, if this does end up happening it'll likely be opaque for users, so if I understand your question correctly, it'll probably be CF only

Hm so this ENAM business is interesting

I was referring to you saying that "this kind of thing just doesn't exist". Did you mean that CF doesn't have any similar products to base a model off of, or that no one in-industry has done it before?

Oh oh I meant at CF. I don't actually know if anyone else has done it

That's what our "Supercloud" thingie is about

But anyway I'm going to look into this slowdown, this is not expected

So is it looking like an R2 issue, or an

rclone issue?Yeah looks that way

Although to be fair we haven't tried anything other than rclone

Okay one last thing I did before leaving for the day: I uploaded a 12G file from a Hetzner server and that doesn't seem to dip either (I'm attributing the dip in the screenshot to a measurement problem)

My work computer was also getting ~25MB/s up with WARP on. Went up and down, but never went that low.

Do you mind telling me where you are? (feel free to decline / DM me instead)

Do you need my ISP too?

Ha yeah your ISP is what would be most useful, I can ask our network team tomorrow to see if there’s weird stuff going on there

Telia/Arelion 5G Home Broadband

Hey @sdnts, any update on this issue?

Hey no not yet, but I've asked people

I'm guessing you're still seeing this, right?

Yeah. I received a response on the forum post, to disable the checksum generation, and reduce the chunk sizes. This seems to still have the same errors. The person that responded guessed that the long wait might be

rclone waiting for confirmation that the chunk had settled. Does that sound plausible?Huh, how do they make sure that the chunk has "settled"?

Looks like just a successful response. So basically, R2 uploads the entire chunk, and then hangs for a minute or however long until R2 actually provides a response to the Request.

That's just a guess on our part though

I see. Sorry I got a bit distracted with the location hint bug, but I'll follow up on this

No worries!

Oh hey, just to add to the info you have, now it stalls when I don't have any upload-related config flags: This used to work(really slowly), but now it doesn't.

Do you have any defaults set in your config directly?

I think this should behave the same because rclone's defaults (even if your config doesn't have them) should kick in

No. I specifically nuked my config and then set it up from scratch

Hey, I'm back on this and want to get to the bottom of this. There doesn't seem to be a network problem in Denmark, so I guess back we go to R2.

One thing that's intriguing to me is how you say that we take a while to respond after the upload is complete, and I want to look into that

Should I retry it now? I have a few settings I can tweak/test from the forum, if that might work?

Sure! Please let me know what you find

It did something different. I got about 5 seconds per chunk, with but each chunk is very small, so it still takes a very log time.

Okay so at this point I can all but guarantee that rclone's speed measurement isn't accurate.

IIRC your speed fluctuates up and down, and goes really low after a part is uploaded (at least according to rclone)

I'm not a Linux person, but is there a way to see the actual data transfer speeds from your computer and see if they actually do go up and down as well?

I'm on my personal laptop now and even connecting to Denmark via a VPN, I can't repro.

I see the rclone speed measurement going up and down, but I'm on macOS and the network up speed is consistently ~30MB/s up

Meanwhile rclone fluctuates from 15-5

I think it's just doing (data transferred / time elapsed)

So the longer an upload takes, the lower it'll keep going

My other hunch was that maybe our file type detection is causing delays, so I tried uploading a

.pmtiles file, but no luck

Oh I should mention my bucket is in Frankfurt (and I'm in Berlin)

Your case specifically is puzzling to me because from how you explain rclone's behaviour, it almost sounds like R2 waits a bit before sending back a 200. R2's Worker just streams your upload to our storage nodes, encrypting it as it goes, so there's really no reason why we'd wait before sending back a response. That could be plausible if we were buffering your file in memory or something, but we don't (can't)

File type information is also extracted from the first few bytes of your file, so again, no reason why any part number > 1 would show this kind of slowdown

I actually have one more theory, but I'll wait for your response to does your actual network up speed fluctuate as well before thinking more about itChecking the System Monitor, it does fluctuate, but it generally stays below 3 MB/s. It hit 4 MB/s a single time, and then went straight back down to 2 MB/s.

Well that doesn’t too fluctuate-y?

You sure you paid your Internet bill 😛

It's literally the best(and basically only good option right now). The neighbors across the street have Fiber-to-the-home, but I'm stuck with 5G broadband, that I think fluctuates based on the weather outside.

If this isn't obvious: I'm just completely out of ideas here :/

I forget if you've tried something other than rclone yet, like a JS AWS SDK script or something. If not, that could also be something to try.

Sorry these network-kind of issues are just really hard to debug because there are so many variables

I gave up, and now I’m just slowboating it. Estimated 8 hours for a 50 GB upload