Hi, i'm having issues with setting up xformers with the A5000 GPU in SD Dreambooth

Whenever i click Generate Class Images i'm getting :

Exception generating concepts: No operator found for memory_efficient_attention_forward with inputs: query : shape=(1, 2, 1, 40) (torch.float32) key : shape=(1, 2, 1, 40) (torch.float32) value : shape=(1, 2, 1, 40) (torch.float32) attn_bias : p : 0.0 flshattF is not supported because: xFormers wasn't build with CUDA support dtype=torch.float32 (supported: {torch.bfloat16, torch.float16}) tritonflashattF is not supported because: xFormers wasn't build with CUDA support dtype=torch.float32 (supported: {torch.bfloat16, torch.float16}) triton is not available requires A100 GPU cutlassF is not supported because: xFormers wasn't build with CUDA support smallkF is not supported because: xFormers wasn't build with CUDA support max(query.shape[-1] != value.shape[-1]) > 32 unsupported embed per head: 40

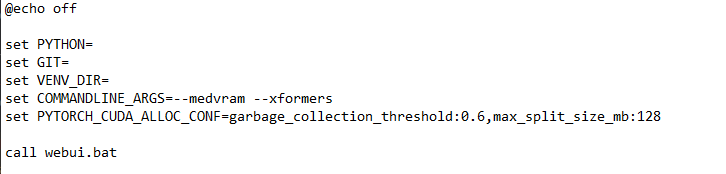

I've set everything based on your youtube tutorial here https://www.youtube.com/watch?v=Bdl-jWR3Ukc&t=158s

Whenever i click Generate Class Images i'm getting :

Exception generating concepts: No operator found for memory_efficient_attention_forward with inputs: query : shape=(1, 2, 1, 40) (torch.float32) key : shape=(1, 2, 1, 40) (torch.float32) value : shape=(1, 2, 1, 40) (torch.float32) attn_bias : p : 0.0 flshattF is not supported because: xFormers wasn't build with CUDA support dtype=torch.float32 (supported: {torch.bfloat16, torch.float16}) tritonflashattF is not supported because: xFormers wasn't build with CUDA support dtype=torch.float32 (supported: {torch.bfloat16, torch.float16}) triton is not available requires A100 GPU cutlassF is not supported because: xFormers wasn't build with CUDA support smallkF is not supported because: xFormers wasn't build with CUDA support max(query.shape[-1] != value.shape[-1]) > 32 unsupported embed per head: 40

I've set everything based on your youtube tutorial here https://www.youtube.com/watch?v=Bdl-jWR3Ukc&t=158s

generate class images with txt2img

much faster better

With or without the --

i think like this can you test?

does not work

with the --

there is no -- the command.

trying without the --. I thought that is how the args are being separated

nope

does not work

let me show you

you are writing incorrectly

oh

I see now

ahahah

Yup

I was being stupid

fingers crossed this works

then I will try train again with drambooth, set some checkpoints and then XYZ select from the checkpoints

i think dreambooth still wouldnt work on 8 gb :d

but worth to try let me know

will do

I am just finding that runpod is harder than running it locally on windows

but I don't get close to the image quality your SD is producing

On text2img im still getting the same error

NotImplementedError: No operator found for

Time taken: 1.82sTorch active/reserved: 2230/2246 MiB, Sys VRAM: 3577/24257 MiB (14.75%)

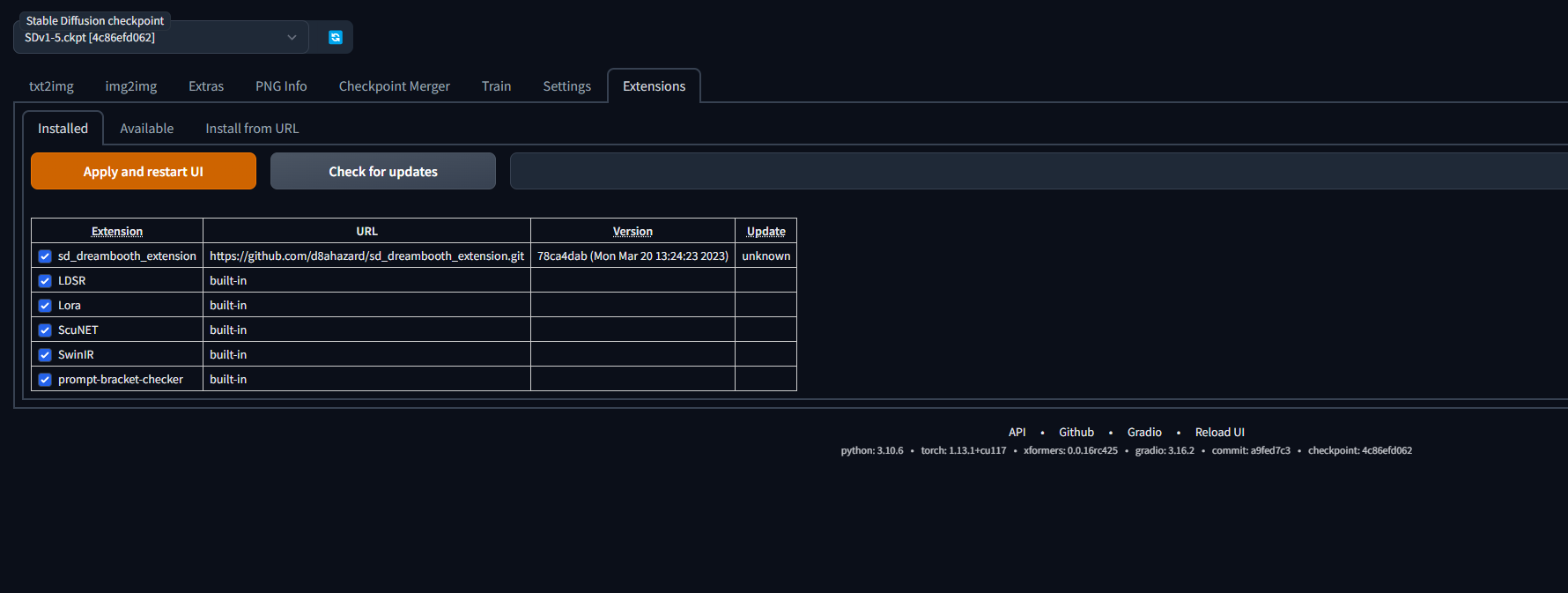

What could be wrong? I'm using SD 1.5.ckpt, and probably the text2img has nothing to do with Dreambooth

NotImplementedError: No operator found for

memory_efficient_attention_forward with inputs: query : shape=(2, 4096, 8, 40) (torch.float16) key : shape=(2, 4096, 8, 40) (torch.float16) value : shape=(2, 4096, 8, 40) (torch.float16) attn_bias : <class 'NoneType'> p : 0.0 cutlassF is not supported because: xFormers wasn't build with CUDA support flshattF is not supported because: xFormers wasn't build with CUDA support tritonflashattF is not supported because: xFormers wasn't build with CUDA support triton is not available requires A100 GPU smallkF is not supported because: xFormers wasn't build with CUDA support dtype=torch.float16 (supported: {torch.float32}) max(query.shape[-1] != value.shape[-1]) > 32 unsupported embed per head: 40Time taken: 1.82sTorch active/reserved: 2230/2246 MiB, Sys VRAM: 3577/24257 MiB (14.75%)

What could be wrong? I'm using SD 1.5.ckpt, and probably the text2img has nothing to do with Dreambooth

python: 3.10.6 • torch: 1.13.1+cu117 • xformers: 0.0.17+6d62b0e.d20230322 • gradio: 3.16.2 • commit: a9fed7c3 • checkpoint: 4c86efd062

I'm currently new to this, apologies if i ask pretty basic questions.

I'm currently new to this, apologies if i ask pretty basic questions.

No luck with dreambooth on a 3070ti 8gig of vram

quick question, i can download LORA files from the civit.ai and not have to use prompts to invoke them, how can I do that with my lora model i'm training?

also my models eyes are super blue and red, not sure why that is, i took the models photos, did face restoration, then used the face restored photos for my dataset

Trying to get dreambooth up and running on Runpod this monring and I can't seem to get it working again

did something change ?

ok looks like that card not supporting

install another xformers

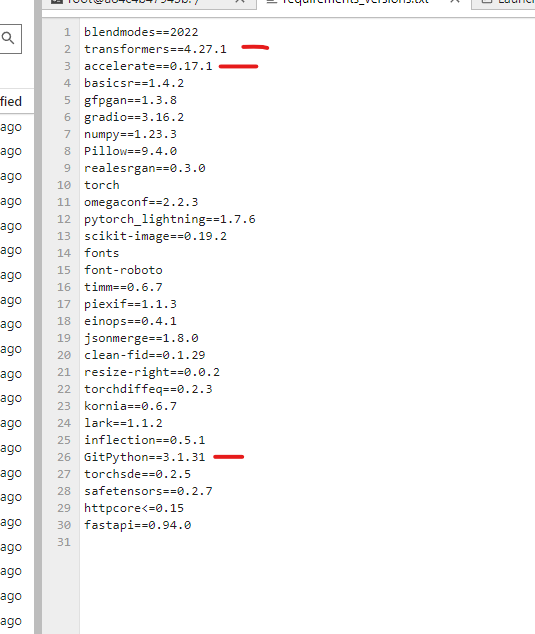

let me tell you version

try this

0.0.17.dev476

pip install xformers== 0.0.17.dev476

Will do it right now sir

i see

that means auto loaded pt files

I've updated the versions as well

so I am not sure what is happening

i am also getting blue eyes usually :d i think related to prompts

your xformers version probably not compatible

activate venv

delete xformers