I wanted to know if your lora training tutorial here covers making sure this lora training doesn't b

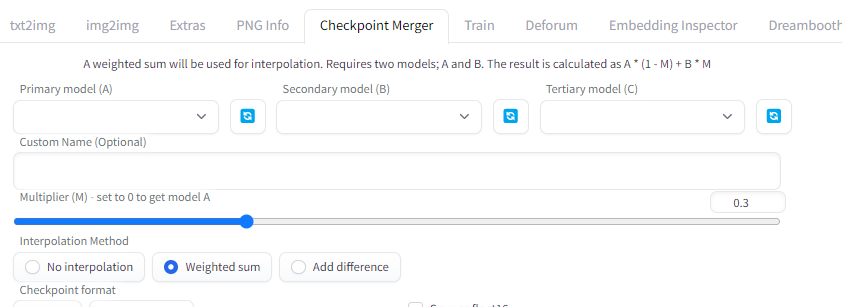

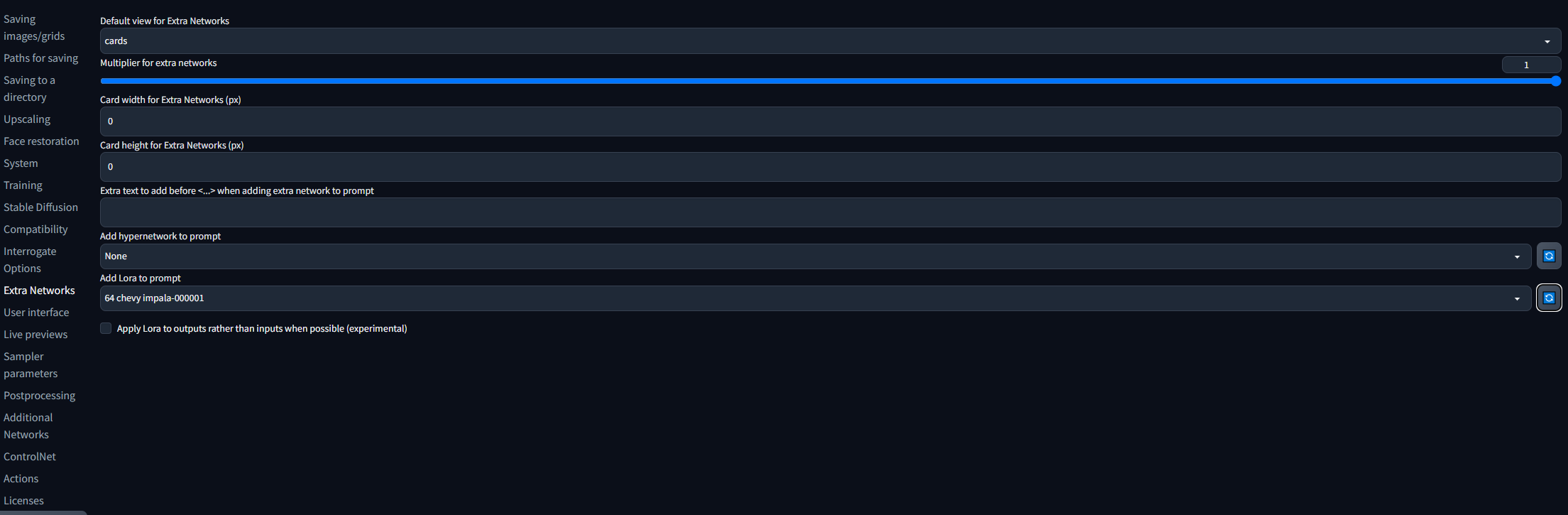

I wanted to know if your lora training tutorial here covers making sure this lora training doesn't break other models that have trained tokens in them? https://www.youtube.com/watch?v=mfaqqL5yOO4 I notice with textual inversion, merging checkpoints or loras to another model results in low quality. I tried changing lora dim/alpha as well and the quality was not good when using my other trained tokens from other models at the same time of using the lora I properly trained or extracted and I made sure to lower the lora value and cfg value. I did lora training with kohya. So I was wondering if webui dreambooth extension with lora actually has a different result that keeps the quality of other models intact with tokens we have trained into them. When I did dreambooth with shivam's repo, not dreambooth extension, but his actual repo, my training is fine individually but as soon as I train both training sets together in one model, the quality with using them at the same time is not good. @Furkan Gözükara SECourses

YouTubeSECourses - Software Engineering Courses

Our Discord : https://discord.gg/HbqgGaZVmr. Ultimate guide to the LoRA training. If I have been of assistance to you and you would like to show your support for my work, please consider becoming a patron on  https://www.patreon.com/SECourses

https://www.patreon.com/SECourses

Playlist of Stable Diffusion Tutorials, #Automatic1111 and Google Colab Guides, DreamBooth, Textual In...

https://www.patreon.com/SECourses

https://www.patreon.com/SECoursesPlaylist of Stable Diffusion Tutorials, #Automatic1111 and Google Colab Guides, DreamBooth, Textual In...