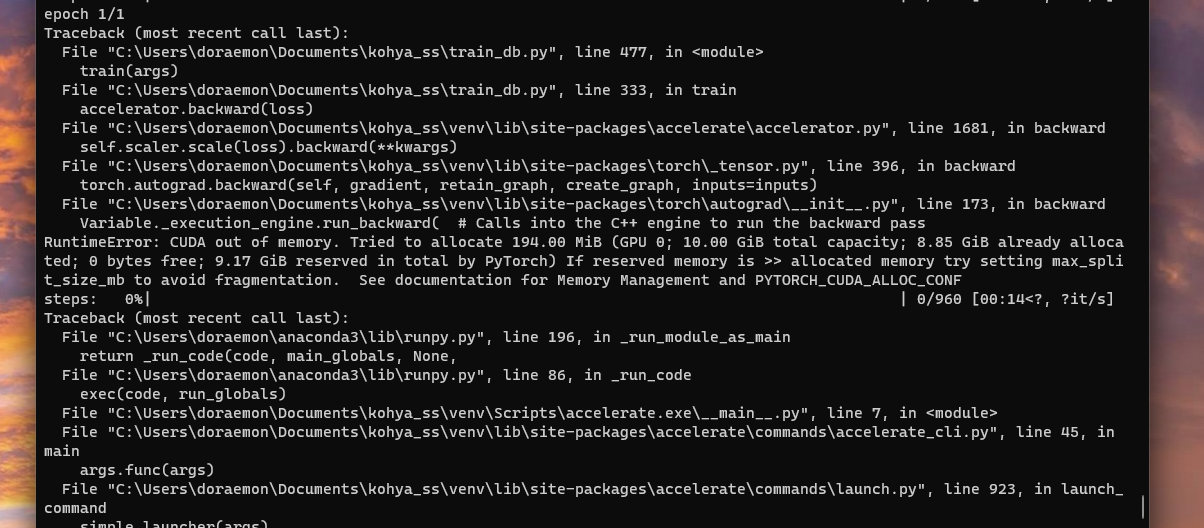

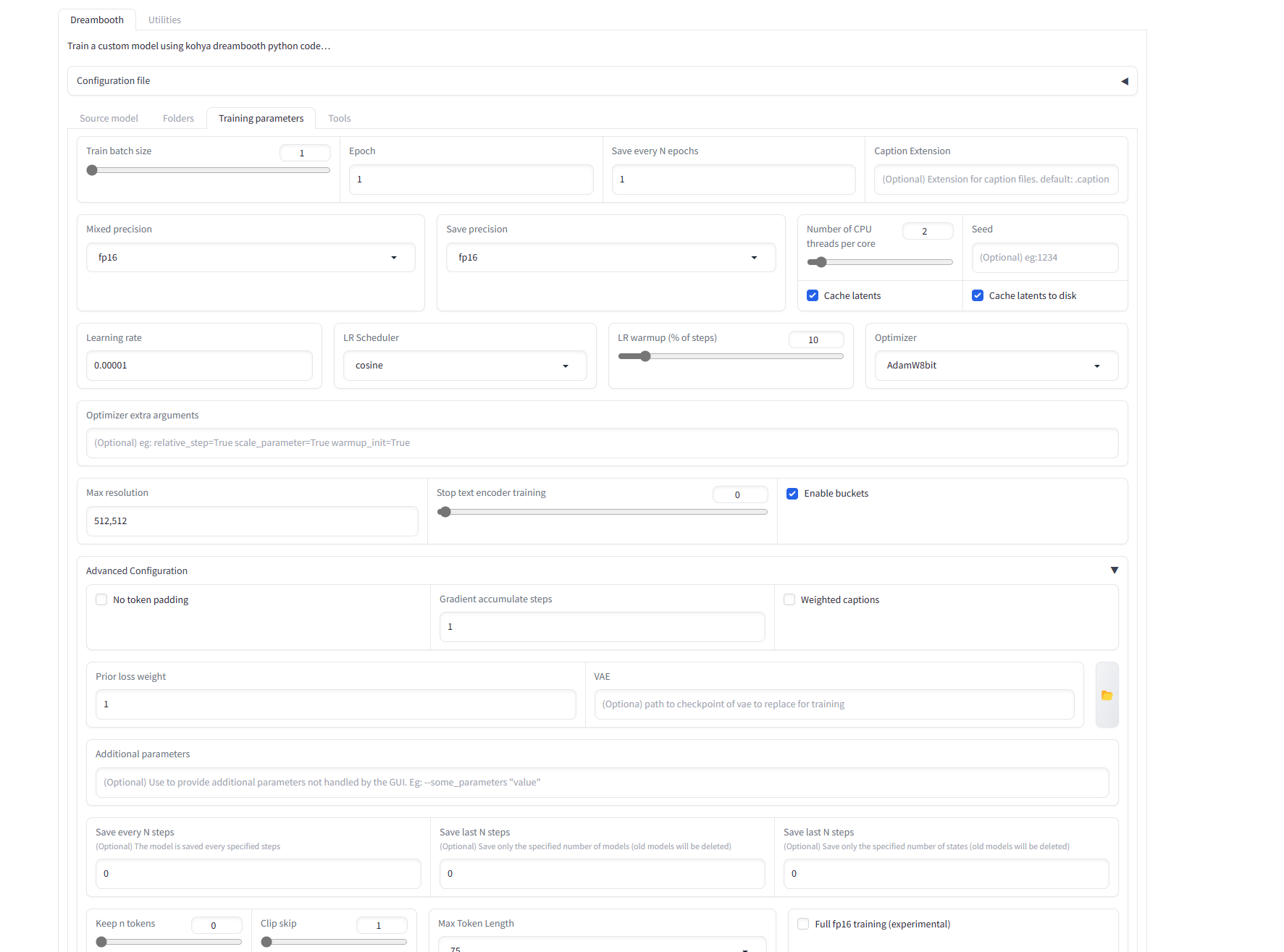

does anyone know what is Stable Diffusion by Automatic1111, why do we need it if I already have stab

does anyone know what is Stable Diffusion by Automatic1111, why do we need it if I already have stable diffusion in kohya_ss

https://www.patreon.com/SECourses

https://www.patreon.com/SECourses